找到

227

篇与

科普知识

相关的结果

-

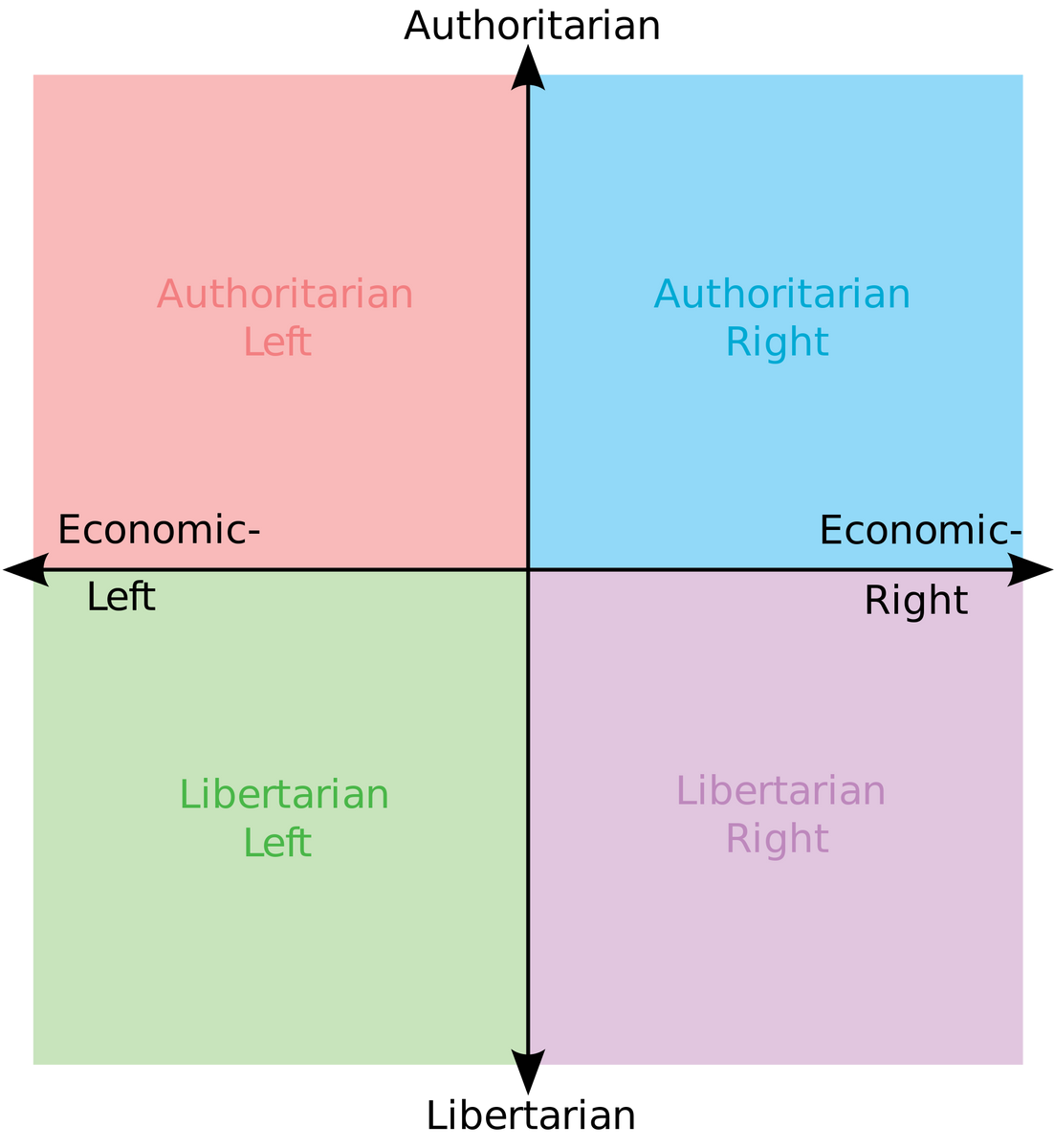

In Defense of Bitcoin Maximalism In Defense of Bitcoin Maximalism2022 Apr 01 See all posts In Defense of Bitcoin Maximalism We've been hearing for years that the future is blockchain, not Bitcoin. The future of the world won't be one major cryptocurrency, or even a few, but many cryptocurrencies - and the winning ones will have strong leadership under one central roof to adapt rapidly to users' needs for scale. Bitcoin is a boomer coin, and Ethereum is soon to follow; it will be newer and more energetic assets that attract the new waves of mass users who don't care about weird libertarian ideology or "self-sovereign verification", are turned off by toxicity and anti-government mentality, and just want blockchain defi and games that are fast and work.But what if this narrative is all wrong, and the ideas, habits and practices of Bitcoin maximalism are in fact pretty close to correct? What if Bitcoin is far more than an outdated pet rock tied to a network effect? What if Bitcoin maximalists actually deeply understand that they are operating in a very hostile and uncertain world where there are things that need to be fought for, and their actions, personalities and opinions on protocol design deeply reflect that fact? What if we live in a world of honest cryptocurrencies (of which there are very few) and grifter cryptocurrencies (of which there are very many), and a healthy dose of intolerance is in fact necessary to prevent the former from sliding into the latter? That is the argument that this post will make.We live in a dangerous world, and protecting freedom is serious businessHopefully, this is much more obvious now than it was six weeks ago, when many people still seriously thought that Vladimir Putin is a misunderstood and kindly character who is merely trying to protect Russia and save Western Civilization from the gaypocalypse. But it's still worth repeating. We live in a dangerous world, where there are plenty of bad-faith actors who do not listen to compassion and reason.A blockchain is at its core a security technology - a technology that is fundamentally all about protecting people and helping them survive in such an unfriendly world. It is, like the Phial of Galadriel, "a light to you in dark places, when all other lights go out". It is not a low-cost light, or a fluorescent hippie energy-efficient light, or a high-performance light. It is a light that sacrifices on all of those dimensions to optimize for one thing and one thing only: to be a light that does when it needs to do when you're facing the toughest challenge of your life and there is a friggin twenty foot spider staring at you in the face. Source: https://www.blackgate.com/2014/12/23/frodo-baggins-lady-galadriel-and-the-games-of-the-mighty/ Blockchains are being used every day by unbanked and underbanked people, by activists, by sex workers, by refugees, and by many other groups either who are uninteresting for profit-seeking centralized financial institutions to serve, or who have enemies that don't want them to be served. They are used as a primary lifeline by many people to make their payments and store their savings.And to that end, public blockchains sacrifice a lot for security:Blockchains require each transaction to be independently verified thousands of times to be accepted. Unlike centralized systems that confirm transactions in a few hundred milliseconds, blockchains require users to wait anywhere from 10 seconds to 10 minutes to get a confirmation. Blockchains require users to be fully in charge of authenticating themselves: if you lose your key, you lose your coins. Blockchains sacrifice privacy, requiring even crazier and more expensive technology to get that privacy back. What are all of these sacrifices for? To create a system that can survive in an unfriendly world, and actually do the job of being "a light in dark places, when all other lights go out".Excellent at that task requires two key ingredients: (i) a robust and defensible technology stack and (ii) a robust and defensible culture. The key property to have in a robust and defensible technology stack is a focus on simplicity and deep mathematical purity: a 1 MB block size, a 21 million coin limit, and a simple Nakamoto consensus proof of work mechanism that even a high school student can understand. The protocol design must be easy to justify decades and centuries down the line; the technology and parameter choices must be a work of art.The second ingredient is the culture of uncompromising, steadfast minimalism. This must be a culture that can stand unyieldingly in defending itself against corporate and government actors trying to co-opt the ecosystem from outside, as well as bad actors inside the crypto space trying to exploit it for personal profit, of which there are many.Now, what do Bitcoin and Ethereum culture actually look like? Well, let's ask Kevin Pham: Don't believe this is representative? Well, let's ask Kevin Pham again: Now, you might say, this is just Ethereum people having fun, and at the end of the day they understand what they have to do and what they are dealing with. But do they? Let's look at the kinds of people that Vitalik Buterin, the founder of Ethereum, hangs out with: Vitalik hangs out with elite tech CEOs in Beijing, China. Vitalik meets Vladimir Putin in Russia. Vitalik meets Nir Bakrat, mayor of Jerusalem. Vitalik shakes hands with Argentinian former president Mauricio Macri. Vitalik gives a friendly hello to Eric Schmidt, former CEO of Google and advisor to US Department of Defense. Vitalik has his first of many meetings with Audrey Tang, digital minister of Taiwan. And this is only a small selection. The immediate question that anyone looking at this should ask is: what the hell is the point of publicly meeting with all these people? Some of these people are very decent entrepreneurs and politicians, but others are actively involved in serious human rights abuses that Vitalik certainly does not support. Does Vitalik not realize just how much some of these people are geopolitically at each other's throats?Now, maybe he is just an idealistic person who believes in talking to people to help bring about world peace, and a follower of Frederick Douglass's dictum to "unite with anybody to do right and with nobody to do wrong". But there's also a simpler hypothesis: Vitalik is a hippy-happy globetrotting pleasure and status-seeker, and he deeply enjoys meeting and feeling respected by people who are important. And it's not just Vitalik; companies like Consensys are totally happy to partner with Saudi Arabia, and the ecosystem as a whole keeps trying to look to mainstream figures for validation.Now ask yourself the question: when the time comes, actually important things are happening on the blockchain - actually important things that offend people who are powerful - which ecosystem would be more willing to put its foot down and refuse to censor them no matter how much pressure is applied on them to do so? The ecosystem with globe-trotting nomads who really really care about being everyone's friend, or the ecosystem with people who take pictures of themslves with an AR15 and an axe as a side hobby?Currency is not "just the first app". It's by far the most successful one.Many people of the "blockchain, not Bitcoin" persuasion argue that cryptocurrency is the first application of blockchains, but it's a very boring one, and the true potential of blockchains lies in bigger and more exciting things. Let's go through the list of applications in the Ethereum whitepaper:Issuing tokens Financial derivatives Stablecoins Identity and reputation systems Decentralized file storage Decentralized autonomous organizations (DAOs) Peer-to-peer gambling Prediction markets Many of these categories have applications that have launched and that have at least some users. That said, cryptocurrency people really value empowering under-banked people in the "Global South". Which of these applications actually have lots of users in the Global South?As it turns out, by far the most successful one is storing wealth and payments. 3% of Argentinians own cryptocurrency, as do 6% of Nigerians and 12% of people in Ukraine. By far the biggest instance of a government using blockchains to accomplish something useful today is cryptocurrency donations to the government of Ukraine, which have raised more than $100 million if you include donations to non-governmental Ukraine-related efforts.What other application has anywhere close to that level of actual, real adoption today? Perhaps the closest is ENS. DAOs are real and growing, but today far too many of them are appealing to wealthy rich-country people whose main interest is having fun and using cartoon-character profiles to satisfy their first-world need for self-expression, and not build schools and hospitals and solve other real world problems.Thus, we can see the two sides pretty clearly: team "blockchain", privileged people in wealthy countries who love to virtue-signal about "moving beyond money and capitalism" and can't help being excited about "decentralized governance experimentation" as a hobby, and team "Bitcoin", a highly diverse group of both rich and poor people in many countries around the world including the Global South, who are actually using the capitalist tool of free self-sovereign money to provide real value to human beings today.Focusing exclusively on being money makes for better moneyA common misconception about why Bitcoin does not support "richly stateful" smart contracts goes as follows. Bitcoin really really values being simple, and particularly having low technical complexity, to reduce the chance that something will go wrong. As a result, it doesn't want to add the more complicated features and opcodes that are necessary to be able to support more complicated smart contracts in Ethereum.This misconception is, of course, wrong. In fact, there are plenty of ways to add rich statefulness into Bitcoin; search for the word "covenants" in Bitcoin chat archives to see many proposals being discussed. And many of these proposals are surprisingly simple. The reason why covenants have not been added is not that Bitcoin developers see the value in rich statefulness but find even a little bit more protocol complexity intolerable. Rather, it's because Bitcoin developers are worried about the risks of the systemic complexity that rich statefulness being possible would introduce into the ecosystem! A recent paper by Bitcoin researchers describes some ways to introduce covenants to add some degree of rich statefulness to Bitcoin. Ethereum's battle with miner-extractable value (MEV) is an excellent example of this problem appearing in practice. It's very easy in Ethereum to build applications where the next person to interact with some contract gets a substantial reward, causing transactors and miners to fight over it, and contributing greatly to network centralization risk and requiring complicated workarounds. In Bitcoin, building such systemically risky applications is hard, in large part because Bitcoin lacks rich statefulness and focuses on the simple (and MEV-free) use case of just being money.Systemic contagion can happen in non-technical ways too. Bitcoin just being money means that Bitcoin requires relatively few developers, helping to reduce the risk that developers will start demanding to print themselves free money to build new protocol features. Bitcoin just being money reduces pressure for core developers to keep adding features to "keep up with the competition" and "serve developers' needs".In so many ways, systemic effects are real, and it's just not possible for a currency to "enable" an ecosystem of highly complex and risky decentralized applications without that complexity biting it back somehow. Bitcoin makes the safe choice. If Ethereum continues its layer-2-centric approach, ETH-the-currency may gain some distance from the application ecosystem that it's enabling and thereby get some protection. So-called high-performance layer-1 platforms, on the other hand, stand no chance.In general, the earliest projects in an industry are the most "genuine"Many industries and fields follow a similar pattern. First, some new exciting technology either gets invented, or gets a big leap of improvement to the point where it's actually usable for something. At the beginning, the technology is still clunky, it is too risky for almost anyone to touch as an investment, and there is no "social proof" that people can use it to become successful. As a result, the first people involved are going to be the idealists, tech geeks and others who are genuinely excited about the technology and its potential to improve society.Once the technology proves itself enough, however, the normies come in - an event that in internet culture is often called Eternal September. And these are not just regular kindly normies who want to feel part of something exciting, but business normies, wearing suits, who start scouring the ecosystem wolf-eyed for ways to make money - with armies of venture capitalists just as eager to make their own money supporting them from the sidelines. In the extreme cases, outright grifters come in, creating blockchains with no redeeming social or technical value which are basically borderline scams. But the reality is that the line from "altruistic idealist" and "grifter" is really a spectrum. And the longer an ecosystem keeps going, the harder it is for any new project on the altruistic side of the spectrum to get going.One noisy proxy for the blockchain industry's slow replacement of philosophical and idealistic values with short-term profit-seeking values is the larger and larger size of premines: the allocations that developers of a cryptocurrency give to themselves. Source for insider allocations: Messari. Which blockchain communities deeply value self-sovereignty, privacy and decentralization, and are making to get big sacrifices to get it? And which blockchain communities are just trying to pump up their market caps and make money for founders and investors? The above chart should make it pretty clear.Intolerance is goodThe above makes it clear why Bitcoin's status as the first cryptocurrency gives it unique advantages that are extremely difficult for any cryptocurrency created within the last five years to replicate. But now we get to the biggest objection against Bitcoin maximalist culture: why is it so toxic?The case for Bitcoin toxicity stems from Conquest's second law. In Robert Conquest's original formulation, the law says that "any organization not explicitly and constitutionally right-wing will sooner or later become left-wing". But really, this is just a special case of a much more general pattern, and one that in the modern age of relentlessly homogenizing and conformist social media is more relevant than ever:If you want to retain an identity that is different from the mainstream, then you need a really strong culture that actively resists and fights assimilation into the mainstream every time it tries to assert its hegemony.Blockchains are, as I mentioned above, very fundamentally and explicitly a counterculture movement that is trying to create and preserve something different from the mainstream. At a time when the world is splitting up into great power blocs that actively suppress social and economic interaction between them, blockchains are one of the very few things that can remain global. At a time when more and more people are reaching for censorship to defeat their short-term enemies, blockchains steadfastly continue to censor nothing. The only correct way to respond to "reasonable adults" trying to tell you that to "become mainstream" you have to compromise on your "extreme" values. Because once you compromise once, you can't stop. Blockchain communities also have to fight bad actors on the inside. Bad actors include:Scammers, who make and sell projects that are ultimately valueless (or worse, actively harmful) but cling to the "crypto" and "decentralization" brand (as well as highly abstract ideas of humanism and friendship) for legitimacy. Collaborationists, who publicly and loudly virtue-signal about working together with governments and actively try to convince governments to use coercive force against their competitors. Corporatists, who try to use their resources to take over the development of blockchains, and often push for protocol changes that enable centralization. One could stand against all of these actors with a smiling face, politely telling the world why they "disagree with their priorities". But this is unrealistic: the bad actors will try hard to embed themselves into your community, and at that point it becomes psychologically hard to criticize them with the sufficient level of scorn that they truly require: the people you're criticizing are friends of your friends. And so any culture that values agreeableness will simply fold before the challenge, and let scammers roam freely through the wallets of innocent newbies.What kind of culture won't fold? A culture that is willing and eager to tell both scammers on the inside and powerful opponents on the outside to go the way of the Russian warship.Weird crusades against seed oils are goodOne powerful bonding tool to help a community maintain internal cohesion around its distinctive values, and avoid falling into the morass that is the mainstream, is weird beliefs and crusades that are in a similar spirit, even if not directly related, to the core mission. Ideally, these crusades should be at least partially correct, poking at a genuine blind spot or inconsistency of mainstream values.The Bitcoin community is good at this. Their most recent crusade is a war against seed oils, oils derived from vegetable seeds high in omega-6 fatty acids that are harmful to human health. This Bitcoiner crusade gets treated skeptically when reviewed in the media, but the media treats the topic much more favorably when "respectable" tech firms are tackling it. The crusade helps to remind Bitcoiners that the mainstream media is fundamentally tribal and hypocritical, and so the media's shrill attempts to slander cryptocurrency as being primarily for money laundering and terrorism should be treated with the same level of scorn.Be a maximalistMaximalism is often derided in the media as both a dangerous toxic right-wing cult, and as a paper tiger that will disappear as soon as some other cryptocurrency comes in and takes over Bitcoin's supreme network effect. But the reality is that none of the arguments for maximalism that I describe above depend at all on network effects. Network effects really are logarithmic, not quadratic: once a cryptocurrency is "big enough", it has enough liquidity to function and multi-cryptocurrency payment processors will easily add it to their collection. But the claim that Bitcoin is an outdated pet rock and its value derives entirely from a walking-zombie network effect that just needs a little push to collapse is similarly completely wrong.Crypto-assets like Bitcoin have real cultural and structural advantages that make them powerful assets worth holding and using. Bitcoin is an excellent example of the category, though it's certainly not the only one; other honorable cryptocurrencies do exist, and maximalists have been willing to support and use them. Maximalism is not just Bitcoin-for-the-sake-of-Bitcoin; rather, it's a very genuine realization that most other cryptoassets are scams, and a culture of intolerance is unavoidable and necessary to protect newbies and make sure at least one corner of that space continues to be a corner worth living in.It's better to mislead ten newbies into avoiding an investment that turns out good than it is to allow a single newbie to get bankrupted by a grifter.It's better to make your protocol too simple and fail to serve ten low-value short-attention-span gambling applications than it is to make it too complex and fail to serve the central sound money use case that underpins everything else.And it's better to offend millions by standing aggressively for what you believe in than it is to try to keep everyone happy and end up standing for nothing.Be brave. Fight for your values. Be a maximalist.

In Defense of Bitcoin Maximalism In Defense of Bitcoin Maximalism2022 Apr 01 See all posts In Defense of Bitcoin Maximalism We've been hearing for years that the future is blockchain, not Bitcoin. The future of the world won't be one major cryptocurrency, or even a few, but many cryptocurrencies - and the winning ones will have strong leadership under one central roof to adapt rapidly to users' needs for scale. Bitcoin is a boomer coin, and Ethereum is soon to follow; it will be newer and more energetic assets that attract the new waves of mass users who don't care about weird libertarian ideology or "self-sovereign verification", are turned off by toxicity and anti-government mentality, and just want blockchain defi and games that are fast and work.But what if this narrative is all wrong, and the ideas, habits and practices of Bitcoin maximalism are in fact pretty close to correct? What if Bitcoin is far more than an outdated pet rock tied to a network effect? What if Bitcoin maximalists actually deeply understand that they are operating in a very hostile and uncertain world where there are things that need to be fought for, and their actions, personalities and opinions on protocol design deeply reflect that fact? What if we live in a world of honest cryptocurrencies (of which there are very few) and grifter cryptocurrencies (of which there are very many), and a healthy dose of intolerance is in fact necessary to prevent the former from sliding into the latter? That is the argument that this post will make.We live in a dangerous world, and protecting freedom is serious businessHopefully, this is much more obvious now than it was six weeks ago, when many people still seriously thought that Vladimir Putin is a misunderstood and kindly character who is merely trying to protect Russia and save Western Civilization from the gaypocalypse. But it's still worth repeating. We live in a dangerous world, where there are plenty of bad-faith actors who do not listen to compassion and reason.A blockchain is at its core a security technology - a technology that is fundamentally all about protecting people and helping them survive in such an unfriendly world. It is, like the Phial of Galadriel, "a light to you in dark places, when all other lights go out". It is not a low-cost light, or a fluorescent hippie energy-efficient light, or a high-performance light. It is a light that sacrifices on all of those dimensions to optimize for one thing and one thing only: to be a light that does when it needs to do when you're facing the toughest challenge of your life and there is a friggin twenty foot spider staring at you in the face. Source: https://www.blackgate.com/2014/12/23/frodo-baggins-lady-galadriel-and-the-games-of-the-mighty/ Blockchains are being used every day by unbanked and underbanked people, by activists, by sex workers, by refugees, and by many other groups either who are uninteresting for profit-seeking centralized financial institutions to serve, or who have enemies that don't want them to be served. They are used as a primary lifeline by many people to make their payments and store their savings.And to that end, public blockchains sacrifice a lot for security:Blockchains require each transaction to be independently verified thousands of times to be accepted. Unlike centralized systems that confirm transactions in a few hundred milliseconds, blockchains require users to wait anywhere from 10 seconds to 10 minutes to get a confirmation. Blockchains require users to be fully in charge of authenticating themselves: if you lose your key, you lose your coins. Blockchains sacrifice privacy, requiring even crazier and more expensive technology to get that privacy back. What are all of these sacrifices for? To create a system that can survive in an unfriendly world, and actually do the job of being "a light in dark places, when all other lights go out".Excellent at that task requires two key ingredients: (i) a robust and defensible technology stack and (ii) a robust and defensible culture. The key property to have in a robust and defensible technology stack is a focus on simplicity and deep mathematical purity: a 1 MB block size, a 21 million coin limit, and a simple Nakamoto consensus proof of work mechanism that even a high school student can understand. The protocol design must be easy to justify decades and centuries down the line; the technology and parameter choices must be a work of art.The second ingredient is the culture of uncompromising, steadfast minimalism. This must be a culture that can stand unyieldingly in defending itself against corporate and government actors trying to co-opt the ecosystem from outside, as well as bad actors inside the crypto space trying to exploit it for personal profit, of which there are many.Now, what do Bitcoin and Ethereum culture actually look like? Well, let's ask Kevin Pham: Don't believe this is representative? Well, let's ask Kevin Pham again: Now, you might say, this is just Ethereum people having fun, and at the end of the day they understand what they have to do and what they are dealing with. But do they? Let's look at the kinds of people that Vitalik Buterin, the founder of Ethereum, hangs out with: Vitalik hangs out with elite tech CEOs in Beijing, China. Vitalik meets Vladimir Putin in Russia. Vitalik meets Nir Bakrat, mayor of Jerusalem. Vitalik shakes hands with Argentinian former president Mauricio Macri. Vitalik gives a friendly hello to Eric Schmidt, former CEO of Google and advisor to US Department of Defense. Vitalik has his first of many meetings with Audrey Tang, digital minister of Taiwan. And this is only a small selection. The immediate question that anyone looking at this should ask is: what the hell is the point of publicly meeting with all these people? Some of these people are very decent entrepreneurs and politicians, but others are actively involved in serious human rights abuses that Vitalik certainly does not support. Does Vitalik not realize just how much some of these people are geopolitically at each other's throats?Now, maybe he is just an idealistic person who believes in talking to people to help bring about world peace, and a follower of Frederick Douglass's dictum to "unite with anybody to do right and with nobody to do wrong". But there's also a simpler hypothesis: Vitalik is a hippy-happy globetrotting pleasure and status-seeker, and he deeply enjoys meeting and feeling respected by people who are important. And it's not just Vitalik; companies like Consensys are totally happy to partner with Saudi Arabia, and the ecosystem as a whole keeps trying to look to mainstream figures for validation.Now ask yourself the question: when the time comes, actually important things are happening on the blockchain - actually important things that offend people who are powerful - which ecosystem would be more willing to put its foot down and refuse to censor them no matter how much pressure is applied on them to do so? The ecosystem with globe-trotting nomads who really really care about being everyone's friend, or the ecosystem with people who take pictures of themslves with an AR15 and an axe as a side hobby?Currency is not "just the first app". It's by far the most successful one.Many people of the "blockchain, not Bitcoin" persuasion argue that cryptocurrency is the first application of blockchains, but it's a very boring one, and the true potential of blockchains lies in bigger and more exciting things. Let's go through the list of applications in the Ethereum whitepaper:Issuing tokens Financial derivatives Stablecoins Identity and reputation systems Decentralized file storage Decentralized autonomous organizations (DAOs) Peer-to-peer gambling Prediction markets Many of these categories have applications that have launched and that have at least some users. That said, cryptocurrency people really value empowering under-banked people in the "Global South". Which of these applications actually have lots of users in the Global South?As it turns out, by far the most successful one is storing wealth and payments. 3% of Argentinians own cryptocurrency, as do 6% of Nigerians and 12% of people in Ukraine. By far the biggest instance of a government using blockchains to accomplish something useful today is cryptocurrency donations to the government of Ukraine, which have raised more than $100 million if you include donations to non-governmental Ukraine-related efforts.What other application has anywhere close to that level of actual, real adoption today? Perhaps the closest is ENS. DAOs are real and growing, but today far too many of them are appealing to wealthy rich-country people whose main interest is having fun and using cartoon-character profiles to satisfy their first-world need for self-expression, and not build schools and hospitals and solve other real world problems.Thus, we can see the two sides pretty clearly: team "blockchain", privileged people in wealthy countries who love to virtue-signal about "moving beyond money and capitalism" and can't help being excited about "decentralized governance experimentation" as a hobby, and team "Bitcoin", a highly diverse group of both rich and poor people in many countries around the world including the Global South, who are actually using the capitalist tool of free self-sovereign money to provide real value to human beings today.Focusing exclusively on being money makes for better moneyA common misconception about why Bitcoin does not support "richly stateful" smart contracts goes as follows. Bitcoin really really values being simple, and particularly having low technical complexity, to reduce the chance that something will go wrong. As a result, it doesn't want to add the more complicated features and opcodes that are necessary to be able to support more complicated smart contracts in Ethereum.This misconception is, of course, wrong. In fact, there are plenty of ways to add rich statefulness into Bitcoin; search for the word "covenants" in Bitcoin chat archives to see many proposals being discussed. And many of these proposals are surprisingly simple. The reason why covenants have not been added is not that Bitcoin developers see the value in rich statefulness but find even a little bit more protocol complexity intolerable. Rather, it's because Bitcoin developers are worried about the risks of the systemic complexity that rich statefulness being possible would introduce into the ecosystem! A recent paper by Bitcoin researchers describes some ways to introduce covenants to add some degree of rich statefulness to Bitcoin. Ethereum's battle with miner-extractable value (MEV) is an excellent example of this problem appearing in practice. It's very easy in Ethereum to build applications where the next person to interact with some contract gets a substantial reward, causing transactors and miners to fight over it, and contributing greatly to network centralization risk and requiring complicated workarounds. In Bitcoin, building such systemically risky applications is hard, in large part because Bitcoin lacks rich statefulness and focuses on the simple (and MEV-free) use case of just being money.Systemic contagion can happen in non-technical ways too. Bitcoin just being money means that Bitcoin requires relatively few developers, helping to reduce the risk that developers will start demanding to print themselves free money to build new protocol features. Bitcoin just being money reduces pressure for core developers to keep adding features to "keep up with the competition" and "serve developers' needs".In so many ways, systemic effects are real, and it's just not possible for a currency to "enable" an ecosystem of highly complex and risky decentralized applications without that complexity biting it back somehow. Bitcoin makes the safe choice. If Ethereum continues its layer-2-centric approach, ETH-the-currency may gain some distance from the application ecosystem that it's enabling and thereby get some protection. So-called high-performance layer-1 platforms, on the other hand, stand no chance.In general, the earliest projects in an industry are the most "genuine"Many industries and fields follow a similar pattern. First, some new exciting technology either gets invented, or gets a big leap of improvement to the point where it's actually usable for something. At the beginning, the technology is still clunky, it is too risky for almost anyone to touch as an investment, and there is no "social proof" that people can use it to become successful. As a result, the first people involved are going to be the idealists, tech geeks and others who are genuinely excited about the technology and its potential to improve society.Once the technology proves itself enough, however, the normies come in - an event that in internet culture is often called Eternal September. And these are not just regular kindly normies who want to feel part of something exciting, but business normies, wearing suits, who start scouring the ecosystem wolf-eyed for ways to make money - with armies of venture capitalists just as eager to make their own money supporting them from the sidelines. In the extreme cases, outright grifters come in, creating blockchains with no redeeming social or technical value which are basically borderline scams. But the reality is that the line from "altruistic idealist" and "grifter" is really a spectrum. And the longer an ecosystem keeps going, the harder it is for any new project on the altruistic side of the spectrum to get going.One noisy proxy for the blockchain industry's slow replacement of philosophical and idealistic values with short-term profit-seeking values is the larger and larger size of premines: the allocations that developers of a cryptocurrency give to themselves. Source for insider allocations: Messari. Which blockchain communities deeply value self-sovereignty, privacy and decentralization, and are making to get big sacrifices to get it? And which blockchain communities are just trying to pump up their market caps and make money for founders and investors? The above chart should make it pretty clear.Intolerance is goodThe above makes it clear why Bitcoin's status as the first cryptocurrency gives it unique advantages that are extremely difficult for any cryptocurrency created within the last five years to replicate. But now we get to the biggest objection against Bitcoin maximalist culture: why is it so toxic?The case for Bitcoin toxicity stems from Conquest's second law. In Robert Conquest's original formulation, the law says that "any organization not explicitly and constitutionally right-wing will sooner or later become left-wing". But really, this is just a special case of a much more general pattern, and one that in the modern age of relentlessly homogenizing and conformist social media is more relevant than ever:If you want to retain an identity that is different from the mainstream, then you need a really strong culture that actively resists and fights assimilation into the mainstream every time it tries to assert its hegemony.Blockchains are, as I mentioned above, very fundamentally and explicitly a counterculture movement that is trying to create and preserve something different from the mainstream. At a time when the world is splitting up into great power blocs that actively suppress social and economic interaction between them, blockchains are one of the very few things that can remain global. At a time when more and more people are reaching for censorship to defeat their short-term enemies, blockchains steadfastly continue to censor nothing. The only correct way to respond to "reasonable adults" trying to tell you that to "become mainstream" you have to compromise on your "extreme" values. Because once you compromise once, you can't stop. Blockchain communities also have to fight bad actors on the inside. Bad actors include:Scammers, who make and sell projects that are ultimately valueless (or worse, actively harmful) but cling to the "crypto" and "decentralization" brand (as well as highly abstract ideas of humanism and friendship) for legitimacy. Collaborationists, who publicly and loudly virtue-signal about working together with governments and actively try to convince governments to use coercive force against their competitors. Corporatists, who try to use their resources to take over the development of blockchains, and often push for protocol changes that enable centralization. One could stand against all of these actors with a smiling face, politely telling the world why they "disagree with their priorities". But this is unrealistic: the bad actors will try hard to embed themselves into your community, and at that point it becomes psychologically hard to criticize them with the sufficient level of scorn that they truly require: the people you're criticizing are friends of your friends. And so any culture that values agreeableness will simply fold before the challenge, and let scammers roam freely through the wallets of innocent newbies.What kind of culture won't fold? A culture that is willing and eager to tell both scammers on the inside and powerful opponents on the outside to go the way of the Russian warship.Weird crusades against seed oils are goodOne powerful bonding tool to help a community maintain internal cohesion around its distinctive values, and avoid falling into the morass that is the mainstream, is weird beliefs and crusades that are in a similar spirit, even if not directly related, to the core mission. Ideally, these crusades should be at least partially correct, poking at a genuine blind spot or inconsistency of mainstream values.The Bitcoin community is good at this. Their most recent crusade is a war against seed oils, oils derived from vegetable seeds high in omega-6 fatty acids that are harmful to human health. This Bitcoiner crusade gets treated skeptically when reviewed in the media, but the media treats the topic much more favorably when "respectable" tech firms are tackling it. The crusade helps to remind Bitcoiners that the mainstream media is fundamentally tribal and hypocritical, and so the media's shrill attempts to slander cryptocurrency as being primarily for money laundering and terrorism should be treated with the same level of scorn.Be a maximalistMaximalism is often derided in the media as both a dangerous toxic right-wing cult, and as a paper tiger that will disappear as soon as some other cryptocurrency comes in and takes over Bitcoin's supreme network effect. But the reality is that none of the arguments for maximalism that I describe above depend at all on network effects. Network effects really are logarithmic, not quadratic: once a cryptocurrency is "big enough", it has enough liquidity to function and multi-cryptocurrency payment processors will easily add it to their collection. But the claim that Bitcoin is an outdated pet rock and its value derives entirely from a walking-zombie network effect that just needs a little push to collapse is similarly completely wrong.Crypto-assets like Bitcoin have real cultural and structural advantages that make them powerful assets worth holding and using. Bitcoin is an excellent example of the category, though it's certainly not the only one; other honorable cryptocurrencies do exist, and maximalists have been willing to support and use them. Maximalism is not just Bitcoin-for-the-sake-of-Bitcoin; rather, it's a very genuine realization that most other cryptoassets are scams, and a culture of intolerance is unavoidable and necessary to protect newbies and make sure at least one corner of that space continues to be a corner worth living in.It's better to mislead ten newbies into avoiding an investment that turns out good than it is to allow a single newbie to get bankrupted by a grifter.It's better to make your protocol too simple and fail to serve ten low-value short-attention-span gambling applications than it is to make it too complex and fail to serve the central sound money use case that underpins everything else.And it's better to offend millions by standing aggressively for what you believe in than it is to try to keep everyone happy and end up standing for nothing.Be brave. Fight for your values. Be a maximalist. -

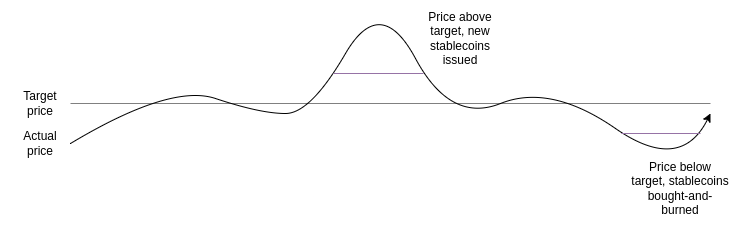

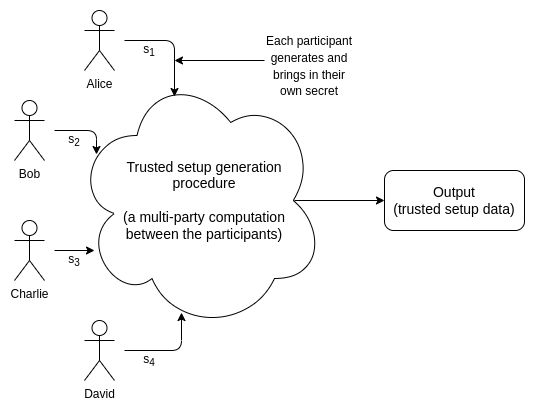

Two thought experiments to evaluate automated stablecoins Two thought experiments to evaluate automated stablecoins2022 May 25 See all posts Two thought experiments to evaluate automated stablecoins Special thanks to Dan Robinson, Hayden Adams and Dankrad Feist for feedback and review.The recent LUNA crash, which led to tens of billions of dollars of losses, has led to a storm of criticism of "algorithmic stablecoins" as a category, with many considering them to be a "fundamentally flawed product". The greater level of scrutiny on defi financial mechanisms, especially those that try very hard to optimize for "capital efficiency", is highly welcome. The greater acknowledgement that present performance is no guarantee of future returns (or even future lack-of-total-collapse) is even more welcome. Where the sentiment goes very wrong, however, is in painting all automated pure-crypto stablecoins with the same brush, and dismissing the entire category.While there are plenty of automated stablecoin designs that are fundamentally flawed and doomed to collapse eventually, and plenty more that can survive theoretically but are highly risky, there are also many stablecoins that are highly robust in theory, and have survived extreme tests of crypto market conditions in practice. Hence, what we need is not stablecoin boosterism or stablecoin doomerism, but rather a return to principles-based thinking. So what are some good principles for evaluating whether or not a particular automated stablecoin is a truly stable one? For me, the test that I start from is asking how the stablecoin responds to two thought experiments.Click here to skip straight to the thought experiments.Reminder: what is an automated stablecoin?For the purposes of this post, an automated stablecoin is a system that has the following properties:It issues a stablecoin, which attempts to target a particular price index. Usually, the target is 1 USD, but there are other options too. There is some targeting mechanism that continuously works to push the price toward the index if it veers away in either direction. This makes ETH and BTC not stablecoins (duh). The targeting mechanism is completely decentralized, and free of protocol-level dependencies on specific trusted actors. Particularly, it must not rely on asset custodians. This makes USDT and USDC not automated stablecoins. In practice, (2) means that the targeting mechanism must be some kind of smart contract which manages some reserve of crypto-assets, and uses those crypto-assets to prop up the price if it drops.How does Terra work?Terra-style stablecoins (roughly the same family as seignorage shares, though many implementation details differ) work by having a pair of two coins, which we'll call a stablecoin and a volatile-coin or volcoin (in Terra, UST is the stablecoin and LUNA is the volcoin). The stablecoin retains stability using a simple mechanism:If the price of the stablecoin exceeds the target, the system auctions off new stablecoins (and uses the revenue to burn volcoins) until the price returns to the target If the price of the stablecoin drops below the target, the system buys back and burns stablecoins (issuing new volcoins to fund the burn) until the price returns to the target Now what is the price of the volcoin? The volcoin's value could be purely speculative, backed by an assumption of greater stablecoin demand in the future (which would require burning volcoins to issue). Alternatively, the value could come from fees: either trading fees on stablecoin volcoin exchange, or holding fees charged per year to stablecoin holders, or both. But in all cases, the price of the volcoin comes from the expectation of future activity in the system.How does RAI work?In this post I'm focusing on RAI rather than DAI because RAI better exemplifies the pure "ideal type" of a collateralized automated stablecoin, backed by ETH only. DAI is a hybrid system backed by both centralized and decentralized collateral, which is a reasonable choice for their product but it does make analysis trickier.In RAI, there are two main categories of participants (there's also holders of FLX, the speculative token, but they play a less important role):A RAI holder holds RAI, the stablecoin of the RAI system. A RAI lender deposits some ETH into a smart contract object called a "safe". They can then withdraw RAI up to the value of \(\frac\) of that ETH (eg. if 1 ETH = 100 RAI, then if you deposit 10 ETH you can withdraw up to \(10 * 100 * \frac \approx 667\) RAI). A lender can recover the ETH in the same if they pay back their RAI debt. There are two main reasons to become a RAI lender:To go long on ETH: if you deposit 10 ETH and withdraw 500 RAI in the above example, you end up with a position worth 500 RAI but with 10 ETH of exposure, so it goes up/down by 2% for every 1% change in the ETH price. Arbitrage if you find a fiat-denominated investment that goes up faster than RAI, you can borrow RAI, put the funds into that investment, and earn a profit on the difference. If the ETH price drops, and a safe no longer has enough collateral (meaning, the RAI debt is now more than \(\frac\) times the value of the ETH deposited), a liquidation event takes place. The safe gets auctioned off for anyone else to buy by putting up more collateral.The other main mechanism to understand is redemption rate adjustment. In RAI, the target isn't a fixed quantity of USD; instead, it moves up or down, and the rate at which it moves up or down adjusts in response to market conditions:If the price of RAI is above the target, the redemption rate decreases, reducing the incentive to hold RAI and increasing the incentive to hold negative RAI by being a lender. This pushes the price back down. If the price of RAI is below the target, the redemption rate increases, increasing the incentive to hold RAI and reducing the incentive to hold negative RAI by being a lender. This pushes the price back up. Thought experiment 1: can the stablecoin, even in theory, safely "wind down" to zero users?In the non-crypto real world, nothing lasts forever. Companies shut down all the time, either because they never manage to find enough users in the first place, or because once-strong demand for their product is no longer there, or because they get displaced by a superior competitor. Sometimes, there are partial collapses, declines from mainstream status to niche status (eg. MySpace). Such things have to happen to make room for new products. But in the non-crypto world, when a product shuts down or declines, customers generally don't get hurt all that much. There are certainly some cases of people falling through the cracks, but on the whole shutdowns are orderly and the problem is manageable.But what about automated stablecoins? What happens if we look at a stablecoin from the bold and radical perspective that the system's ability to avoid collapsing and losing huge amounts of user funds should not depend on a constant influx of new users? Let's see and find out!Can Terra wind down?In Terra, the price of the volcoin (LUNA) comes from the expectation of fees from future activity in the system. So what happens if expected future activity drops to near-zero? The market cap of the volcoin drops until it becomes quite small relative to the stablecoin. At that point, the system becomes extremely fragile: only a small downward shock to demand for the stablecoin could lead to the targeting mechanism printing lots of volcoins, which causes the volcoin to hyperinflate, at which point the stablecoin too loses its value.The system's collapse can even become a self-fulfilling prophecy: if it seems like a collapse is likely, this reduces the expectation of future fees that is the basis of the value of the volcoin, pushing the volcoin's market cap down, making the system even more fragile and potentially triggering that very collapse - exactly as we saw happen with Terra in May.LUNA price, May 8-12 UST price, May 8-12 First, the volcoin price drops. Then, the stablecoin starts to shake. The system attempts to shore up stablecoin demand by issuing more volcoins. With confidence in the system low, there are few buyers, so the volcoin price rapidly falls. Finally, once the volcoin price is near-zero, the stablecoin too collapses. In principle, if demand decreases extremely slowly, the volcoin's expected future fees and hence its market cap could still be large relative to the stablecoin, and so the system would continue to be stable at every step of its decline. But this kind of successful slowly-decreasing managed decline is very unlikely. What's more likely is a rapid drop in interest followed by a bang. Safe wind-down: at every step, there's enough expected future revenue to justify enough volcoin market cap to keep the stablecoin safe at its current level.Unsafe wind-down: at some point, there's not enough expected future revenue to justify enough volcoin market cap to keep the stablecoin safe. Collapse is likely. Can RAI wind down?RAI's security depends on an asset external to the RAI system (ETH), so RAI has a much easier time safely winding down. If the decline in demand is unbalanced (so, either demand for holding drops faster or demand for lending drops faster), the redemption rate will adjust to equalize the two. The lenders are holding a leveraged position in ETH, not FLX, so there's no risk of a positive-feedback loop where reduced confidence in RAI causes demand for lending to also decrease.If, in the extreme case, all demand for holding RAI disappears simultaneously except for one holder, the redemption rate would skyrocket until eventually every lender's safe gets liquidated. The single remaining holder would be able to buy the safe in the liquidation auction, use their RAI to immediately clear its debt, and withdraw the ETH. This gives them the opportunity to get a fair price for their RAI, paid for out of the ETH in the safe.Another extreme case worth examining is where RAI becomes the primary appliation on Ethereum. In this case, a reduction in expected future demand for RAI would crater the price of ETH. In the extreme case, a cascade of liquidations is possible, leading to a messy collapse of the system. But RAI is far more robust against this possibility than a Terra-style system.Thought experiment 2: what happens if you try to peg the stablecoin to an index that goes up 20% per year?Currently, stablecoins tend to be pegged to the US dollar. RAI stands out as a slight exception, because its peg adjusts up or down due to the redemption rate and the peg started at 3.14 USD instead of 1 USD (the exact starting value was a concession to being normie-friendly, as a true math nerd would have chosen tau = 6.28 USD instead). But they do not have to be. You can have a stablecoin pegged to a basket of assets, a consumer price index, or some arbitrarily complex formula ("a quantity of value sufficient to buy hectares of land in the forests of Yakutia"). As long as you can find an oracle to prove the index, and people to participate on all sides of the market, you can make such a stablecoin work.As a thought experiment to evaluate sustainability, let's imagine a stablecoin with a particular index: a quantity of US dollars that grows by 20% per year. In math language, the index is \(1.2^\) USD, where \(t\) is the current time in years and \(t_0\) is the time when the system launched. An even more fun alternative is \(1.04^*(t - t_0)^2}\) USD, so it starts off acting like a regular USD-denominated stablecoin, but the USD-denominated return rate keeps increasing by 4% every year. Obviously, there is no genuine investment that can get anywhere close to 20% returns per year, and there is definitely no genuine investment that can keep increasing its return rate by 4% per year forever. But what happens if you try?I will claim that there's basically two ways for a stablecoin that tries to track such an index to turn out:It charges some kind of negative interest rate on holders that equilibrates to basically cancel out the USD-denominated growth rate built in to the index. It turns into a Ponzi, giving stablecoin holders amazing returns for some time until one day it suddenly collapses with a bang. It should be pretty easy to understand why RAI does (1) and LUNA does (2), and so RAI is better than LUNA. But this also shows a deeper and more important fact about stablecoins: for a collateralized automated stablecoin to be sustainable, it has to somehow contain the possibility of implementing a negative interest rate. A version of RAI programmatically prevented from implementing negative interest rates (which is what the earlier single-collateral DAI basically was) would also turn into a Ponzi if tethered to a rapidly-appreciating price index.Even outside of crazy hypotheticals where you build a stablecoin to track a Ponzi index, the stablecoin must somehow be able to respond to situations where even at a zero interest rate, demand for holding exceeds demand for borrowing. If you don't, the price rises above the peg, and the stablecoin becomes vulnerable to price movements in both directions that are quite unpredictable.Negative interest rates can be done in two ways:RAI-style, having a floating target that can drop over time if the redemption rate is negative Actually having balances decrease over time Option (1) has the user-experience flaw that the stablecoin no longer cleanly tracks "1 USD". Option (2) has the developer-experience flaw that developers aren't used to dealing with assets where receiving N coins does not unconditionally mean that you can later send N coins. But choosing one of the two seems unavoidable - unless you go the MakerDAO route of being a hybrid stablecoin that uses both pure cryptoassets and centralized assets like USDC as collateral.What can we learn?In general, the crypto space needs to move away from the attitude that it's okay to achieve safety by relying on endless growth. It's certainly not acceptable to maintain that attitude by saying that "the fiat world works in the same way", because the fiat world is not attempting to offer anyone returns that go up much faster than the regular economy, outside of isolated cases that certainly should be criticized with the same ferocity.Instead, while we certainly should hope for growth, we should evaluate how safe systems are by looking at their steady state, and even the pessimistic state of how they would fare under extreme conditions and ultimately whether or not they can safely wind down. If a system passes this test, that does not mean it's safe; it could still be fragile for other reasons (eg. insufficient collateral ratios), or have bugs or governance vulnerabilities. But steady-state and extreme-case soundness should always be one of the first things that we check for.

Two thought experiments to evaluate automated stablecoins Two thought experiments to evaluate automated stablecoins2022 May 25 See all posts Two thought experiments to evaluate automated stablecoins Special thanks to Dan Robinson, Hayden Adams and Dankrad Feist for feedback and review.The recent LUNA crash, which led to tens of billions of dollars of losses, has led to a storm of criticism of "algorithmic stablecoins" as a category, with many considering them to be a "fundamentally flawed product". The greater level of scrutiny on defi financial mechanisms, especially those that try very hard to optimize for "capital efficiency", is highly welcome. The greater acknowledgement that present performance is no guarantee of future returns (or even future lack-of-total-collapse) is even more welcome. Where the sentiment goes very wrong, however, is in painting all automated pure-crypto stablecoins with the same brush, and dismissing the entire category.While there are plenty of automated stablecoin designs that are fundamentally flawed and doomed to collapse eventually, and plenty more that can survive theoretically but are highly risky, there are also many stablecoins that are highly robust in theory, and have survived extreme tests of crypto market conditions in practice. Hence, what we need is not stablecoin boosterism or stablecoin doomerism, but rather a return to principles-based thinking. So what are some good principles for evaluating whether or not a particular automated stablecoin is a truly stable one? For me, the test that I start from is asking how the stablecoin responds to two thought experiments.Click here to skip straight to the thought experiments.Reminder: what is an automated stablecoin?For the purposes of this post, an automated stablecoin is a system that has the following properties:It issues a stablecoin, which attempts to target a particular price index. Usually, the target is 1 USD, but there are other options too. There is some targeting mechanism that continuously works to push the price toward the index if it veers away in either direction. This makes ETH and BTC not stablecoins (duh). The targeting mechanism is completely decentralized, and free of protocol-level dependencies on specific trusted actors. Particularly, it must not rely on asset custodians. This makes USDT and USDC not automated stablecoins. In practice, (2) means that the targeting mechanism must be some kind of smart contract which manages some reserve of crypto-assets, and uses those crypto-assets to prop up the price if it drops.How does Terra work?Terra-style stablecoins (roughly the same family as seignorage shares, though many implementation details differ) work by having a pair of two coins, which we'll call a stablecoin and a volatile-coin or volcoin (in Terra, UST is the stablecoin and LUNA is the volcoin). The stablecoin retains stability using a simple mechanism:If the price of the stablecoin exceeds the target, the system auctions off new stablecoins (and uses the revenue to burn volcoins) until the price returns to the target If the price of the stablecoin drops below the target, the system buys back and burns stablecoins (issuing new volcoins to fund the burn) until the price returns to the target Now what is the price of the volcoin? The volcoin's value could be purely speculative, backed by an assumption of greater stablecoin demand in the future (which would require burning volcoins to issue). Alternatively, the value could come from fees: either trading fees on stablecoin volcoin exchange, or holding fees charged per year to stablecoin holders, or both. But in all cases, the price of the volcoin comes from the expectation of future activity in the system.How does RAI work?In this post I'm focusing on RAI rather than DAI because RAI better exemplifies the pure "ideal type" of a collateralized automated stablecoin, backed by ETH only. DAI is a hybrid system backed by both centralized and decentralized collateral, which is a reasonable choice for their product but it does make analysis trickier.In RAI, there are two main categories of participants (there's also holders of FLX, the speculative token, but they play a less important role):A RAI holder holds RAI, the stablecoin of the RAI system. A RAI lender deposits some ETH into a smart contract object called a "safe". They can then withdraw RAI up to the value of \(\frac\) of that ETH (eg. if 1 ETH = 100 RAI, then if you deposit 10 ETH you can withdraw up to \(10 * 100 * \frac \approx 667\) RAI). A lender can recover the ETH in the same if they pay back their RAI debt. There are two main reasons to become a RAI lender:To go long on ETH: if you deposit 10 ETH and withdraw 500 RAI in the above example, you end up with a position worth 500 RAI but with 10 ETH of exposure, so it goes up/down by 2% for every 1% change in the ETH price. Arbitrage if you find a fiat-denominated investment that goes up faster than RAI, you can borrow RAI, put the funds into that investment, and earn a profit on the difference. If the ETH price drops, and a safe no longer has enough collateral (meaning, the RAI debt is now more than \(\frac\) times the value of the ETH deposited), a liquidation event takes place. The safe gets auctioned off for anyone else to buy by putting up more collateral.The other main mechanism to understand is redemption rate adjustment. In RAI, the target isn't a fixed quantity of USD; instead, it moves up or down, and the rate at which it moves up or down adjusts in response to market conditions:If the price of RAI is above the target, the redemption rate decreases, reducing the incentive to hold RAI and increasing the incentive to hold negative RAI by being a lender. This pushes the price back down. If the price of RAI is below the target, the redemption rate increases, increasing the incentive to hold RAI and reducing the incentive to hold negative RAI by being a lender. This pushes the price back up. Thought experiment 1: can the stablecoin, even in theory, safely "wind down" to zero users?In the non-crypto real world, nothing lasts forever. Companies shut down all the time, either because they never manage to find enough users in the first place, or because once-strong demand for their product is no longer there, or because they get displaced by a superior competitor. Sometimes, there are partial collapses, declines from mainstream status to niche status (eg. MySpace). Such things have to happen to make room for new products. But in the non-crypto world, when a product shuts down or declines, customers generally don't get hurt all that much. There are certainly some cases of people falling through the cracks, but on the whole shutdowns are orderly and the problem is manageable.But what about automated stablecoins? What happens if we look at a stablecoin from the bold and radical perspective that the system's ability to avoid collapsing and losing huge amounts of user funds should not depend on a constant influx of new users? Let's see and find out!Can Terra wind down?In Terra, the price of the volcoin (LUNA) comes from the expectation of fees from future activity in the system. So what happens if expected future activity drops to near-zero? The market cap of the volcoin drops until it becomes quite small relative to the stablecoin. At that point, the system becomes extremely fragile: only a small downward shock to demand for the stablecoin could lead to the targeting mechanism printing lots of volcoins, which causes the volcoin to hyperinflate, at which point the stablecoin too loses its value.The system's collapse can even become a self-fulfilling prophecy: if it seems like a collapse is likely, this reduces the expectation of future fees that is the basis of the value of the volcoin, pushing the volcoin's market cap down, making the system even more fragile and potentially triggering that very collapse - exactly as we saw happen with Terra in May.LUNA price, May 8-12 UST price, May 8-12 First, the volcoin price drops. Then, the stablecoin starts to shake. The system attempts to shore up stablecoin demand by issuing more volcoins. With confidence in the system low, there are few buyers, so the volcoin price rapidly falls. Finally, once the volcoin price is near-zero, the stablecoin too collapses. In principle, if demand decreases extremely slowly, the volcoin's expected future fees and hence its market cap could still be large relative to the stablecoin, and so the system would continue to be stable at every step of its decline. But this kind of successful slowly-decreasing managed decline is very unlikely. What's more likely is a rapid drop in interest followed by a bang. Safe wind-down: at every step, there's enough expected future revenue to justify enough volcoin market cap to keep the stablecoin safe at its current level.Unsafe wind-down: at some point, there's not enough expected future revenue to justify enough volcoin market cap to keep the stablecoin safe. Collapse is likely. Can RAI wind down?RAI's security depends on an asset external to the RAI system (ETH), so RAI has a much easier time safely winding down. If the decline in demand is unbalanced (so, either demand for holding drops faster or demand for lending drops faster), the redemption rate will adjust to equalize the two. The lenders are holding a leveraged position in ETH, not FLX, so there's no risk of a positive-feedback loop where reduced confidence in RAI causes demand for lending to also decrease.If, in the extreme case, all demand for holding RAI disappears simultaneously except for one holder, the redemption rate would skyrocket until eventually every lender's safe gets liquidated. The single remaining holder would be able to buy the safe in the liquidation auction, use their RAI to immediately clear its debt, and withdraw the ETH. This gives them the opportunity to get a fair price for their RAI, paid for out of the ETH in the safe.Another extreme case worth examining is where RAI becomes the primary appliation on Ethereum. In this case, a reduction in expected future demand for RAI would crater the price of ETH. In the extreme case, a cascade of liquidations is possible, leading to a messy collapse of the system. But RAI is far more robust against this possibility than a Terra-style system.Thought experiment 2: what happens if you try to peg the stablecoin to an index that goes up 20% per year?Currently, stablecoins tend to be pegged to the US dollar. RAI stands out as a slight exception, because its peg adjusts up or down due to the redemption rate and the peg started at 3.14 USD instead of 1 USD (the exact starting value was a concession to being normie-friendly, as a true math nerd would have chosen tau = 6.28 USD instead). But they do not have to be. You can have a stablecoin pegged to a basket of assets, a consumer price index, or some arbitrarily complex formula ("a quantity of value sufficient to buy hectares of land in the forests of Yakutia"). As long as you can find an oracle to prove the index, and people to participate on all sides of the market, you can make such a stablecoin work.As a thought experiment to evaluate sustainability, let's imagine a stablecoin with a particular index: a quantity of US dollars that grows by 20% per year. In math language, the index is \(1.2^\) USD, where \(t\) is the current time in years and \(t_0\) is the time when the system launched. An even more fun alternative is \(1.04^*(t - t_0)^2}\) USD, so it starts off acting like a regular USD-denominated stablecoin, but the USD-denominated return rate keeps increasing by 4% every year. Obviously, there is no genuine investment that can get anywhere close to 20% returns per year, and there is definitely no genuine investment that can keep increasing its return rate by 4% per year forever. But what happens if you try?I will claim that there's basically two ways for a stablecoin that tries to track such an index to turn out:It charges some kind of negative interest rate on holders that equilibrates to basically cancel out the USD-denominated growth rate built in to the index. It turns into a Ponzi, giving stablecoin holders amazing returns for some time until one day it suddenly collapses with a bang. It should be pretty easy to understand why RAI does (1) and LUNA does (2), and so RAI is better than LUNA. But this also shows a deeper and more important fact about stablecoins: for a collateralized automated stablecoin to be sustainable, it has to somehow contain the possibility of implementing a negative interest rate. A version of RAI programmatically prevented from implementing negative interest rates (which is what the earlier single-collateral DAI basically was) would also turn into a Ponzi if tethered to a rapidly-appreciating price index.Even outside of crazy hypotheticals where you build a stablecoin to track a Ponzi index, the stablecoin must somehow be able to respond to situations where even at a zero interest rate, demand for holding exceeds demand for borrowing. If you don't, the price rises above the peg, and the stablecoin becomes vulnerable to price movements in both directions that are quite unpredictable.Negative interest rates can be done in two ways:RAI-style, having a floating target that can drop over time if the redemption rate is negative Actually having balances decrease over time Option (1) has the user-experience flaw that the stablecoin no longer cleanly tracks "1 USD". Option (2) has the developer-experience flaw that developers aren't used to dealing with assets where receiving N coins does not unconditionally mean that you can later send N coins. But choosing one of the two seems unavoidable - unless you go the MakerDAO route of being a hybrid stablecoin that uses both pure cryptoassets and centralized assets like USDC as collateral.What can we learn?In general, the crypto space needs to move away from the attitude that it's okay to achieve safety by relying on endless growth. It's certainly not acceptable to maintain that attitude by saying that "the fiat world works in the same way", because the fiat world is not attempting to offer anyone returns that go up much faster than the regular economy, outside of isolated cases that certainly should be criticized with the same ferocity.Instead, while we certainly should hope for growth, we should evaluate how safe systems are by looking at their steady state, and even the pessimistic state of how they would fare under extreme conditions and ultimately whether or not they can safely wind down. If a system passes this test, that does not mean it's safe; it could still be fragile for other reasons (eg. insufficient collateral ratios), or have bugs or governance vulnerabilities. But steady-state and extreme-case soundness should always be one of the first things that we check for. -

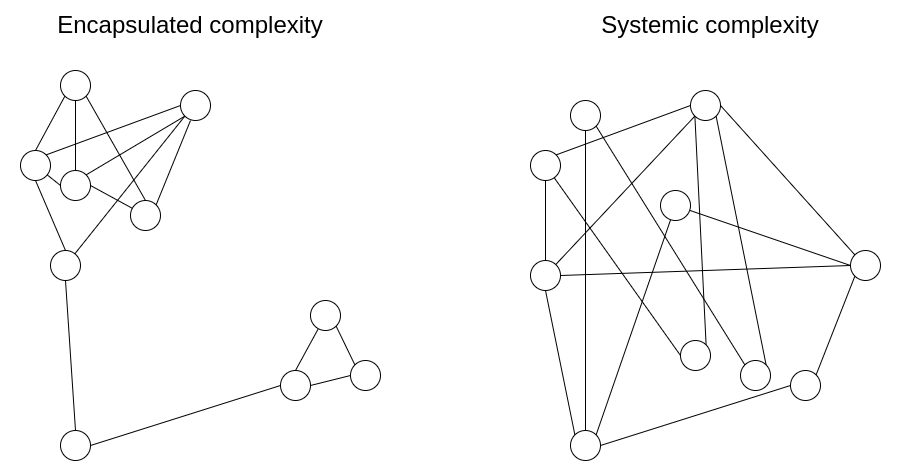

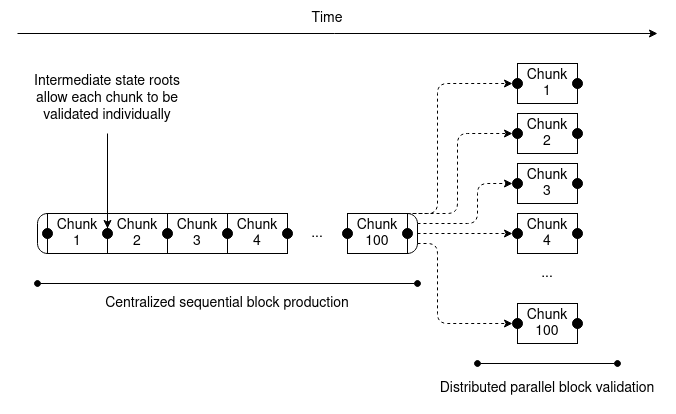

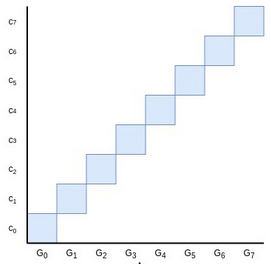

Encapsulated vs systemic complexity in protocol design Encapsulated vs systemic complexity in protocol design2022 Feb 28 See all posts Encapsulated vs systemic complexity in protocol design One of the main goals of Ethereum protocol design is to minimize complexity: make the protocol as simple as possible, while still making a blockchain that can do what an effective blockchain needs to do. The Ethereum protocol is far from perfect at this, especially since much of it was designed in 2014-16 when we understood much less, but we nevertheless make an active effort to reduce complexity whenever possible.One of the challenges of this goal, however, is that complexity is difficult to define, and sometimes, you have to trade off between two choices that introduce different kinds of complexity and have different costs. How do we compare?One powerful intellectual tool that allows for more nuanced thinking about complexity is to draw a distinction between what we will call encapsulated complexity and systemic complexity. Encapsulated complexity occurs when there is a system with sub-systems that are internally complex, but that present a simple "interface" to the outside. Systemic complexity occurs when the different parts of a system can't even be cleanly separated, and have complex interactions with each other.Here are a few examples.BLS signatures vs Schnorr signaturesBLS signatures and Schnorr signatures are two popular types of cryptographic signature schemes that can be made with elliptic curves.BLS signatures appear mathematically very simple:Signing: \(\sigma = H(m) * k\)Verifying: \(e([1], \sigma) \stackrel e(H(m), K)\)\(H\) is a hash function, \(m\) is the message, and \(k\) and \(K\) are the private and public keys. So far, so simple. However, the true complexity is hidden inside the definition of the \(e\) function: elliptic curve pairings, one of the most devilishly hard-to-understand pieces of math in all of cryptography.Now, consider Schnorr signatures. Schnorr signatures rely only on basic elliptic curves. But the signing and verification logic is somewhat more complex: So... which type of signature is "simpler"? It depends what you care about! BLS signatures have a huge amount of technical complexity, but the complexity is all buried within the definition of the \(e\) function. If you treat the \(e\) function as a black box, BLS signatures are actually really easy. Schnorr signatures, on the other hand, have less total complexity, but they have more pieces that could interact with the outside world in tricky ways.For example:Doing a BLS multi-signature (a combined signature from two keys \(k_1\) and \(k_2\)) is easy: just take \(\sigma_1 + \sigma_2\). But a Schnorr multi-signature requires two rounds of interaction, and there are tricky key cancellation attacks that need to be dealt with. Schnorr signatures require random number generation, BLS signatures do not. Elliptic curve pairings in general are a powerful "complexity sponge" in that they contain large amounts of encapsulated complexity, but enable solutions with much less systemic complexity. This is also true in the area of polynomial commitments: compare the simplicity of KZG commitments (which require pairings) to the much more complicated internal logic of inner product arguments (which do not).Cryptography vs cryptoeconomicsOne important design choice that appears in many blockchain designs is that of cryptography versus cryptoeconomics. Often (eg. in rollups) this comes in the form of a choice between validity proofs (aka. ZK-SNARKs) and fraud proofs.ZK-SNARKs are complex technology. While the basic ideas behind how they work can be explained in a single post, actually implementing a ZK-SNARK to verify some computation involves many times more complexity than the computation itself (hence why ZK-SNARKs for the EVM are still under development while fraud proofs for the EVM are already in the testing stage). Implementing a ZK-SNARK effectively involves circuit design with special-purpose optimization, working with unfamiliar programming languages, and many other challenges. Fraud proofs, on the other hand, are inherently simple: if someone makes a challenge, you just directly run the computation on-chain. For efficiency, a binary-search scheme is sometimes added, but even that doesn't add too much complexity.But while ZK-SNARKs are complex, their complexity is encapsulated complexity. The relatively light complexity of fraud proofs, on the other hand, is systemic. Here are some examples of systemic complexity that fraud proofs introduce:They require careful incentive engineering to avoid the verifier's dilemma. If done in-consensus, they require extra transaction types for the fraud proofs, along with reasoning about what happens if many actors compete to submit a fraud proof at the same time. They depend on a synchronous network. They allow censorship attacks to be also used to commit theft. Rollups based on fraud proofs require liquidity providers to support instant withdrawals. For these reasons, even from a complexity perspective purely cryptographic solutions based on ZK-SNARKs are likely to be long-run safer: ZK-SNARKs have are more complicated parts that some people have to think about, but they have fewer dangling caveats that everyone has to think about.Miscellaneous examplesProof of work (Nakamoto consensus) - low encapsulated complexity, as the mechanism is extremely simple and easy to understand, but higher systemic complexity (eg. selfish mining attacks). Hash functions - high encapsulated complexity, but very easy-to-understand properties so low systemic complexity. Random shuffling algorithms - shuffling algorithms can either be internally complicated (as in Whisk) but lead to easy-to-understand guarantees of strong randomness, or internally simpler but lead to randomness properties that are weaker and more difficult to analyze (systemic complexity). Miner extractable value (MEV) - a protocol that is powerful enough to support complex transactions can be fairly simple internally, but those complex transactions can have complex systemic effects on the protocol's incentives by contributing to the incentive to propose blocks in very irregular ways. Verkle trees - Verkle trees do have some encapsulated complexity, in fact quite a bit more than plain Merkle hash trees. Systemically, however, Verkle trees present the exact same relatively clean-and-simple interface of a key-value map. The main systemic complexity "leak" is the possibility of an attacker manipulating the tree to make a particular value have a very long branch; but this risk is the same for both Verkle trees and Merkle trees. How do we make the tradeoff?Often, the choice with less encapsulated complexity is also the choice with less systemic complexity, and so there is one choice that is obviously simpler. But at other times, you have to make a hard choice between one type of complexity and the other. What should be clear at this point is that complexity is less dangerous if it is encapsulated. The risks from complexity of a system are not a simple function of how long the specification is; a small 10-line piece of the specification that interacts with every other piece adds more complexity than a 100-line function that is otherwise treated as a black box.However, there are limits to this approach of preferring encapsulated complexity. Software bugs can occur in any piece of code, and as it gets bigger the probability of a bug approaches 1. Sometimes, when you need to interact with a sub-system in an unexpected and new way, complexity that was originally encapsulated can become systemic.One example of the latter is Ethereum's current two-level state tree, which features a tree of account objects, where each account object in turn has its own storage tree. This tree structure is complex, but at the beginning the complexity seemed to be well-encapsulated: the rest of the protocol interacts with the tree as a key/value store that you can read and write to, so we don't have to worry about how the tree is structured.Later, however, the complexity turned out to have systemic effects: the ability of accounts to have arbitrarily large storage trees meant that there was no way to reliably expect a particular slice of the state (eg. "all accounts starting with 0x1234") to have a predictable size. This makes it harder to split up the state into pieces, complicating the design of syncing protocols and attempts to distribute the storage process. Why did encapsulated complexity become systemic? Because the interface changed. The fix? The current proposal to move to Verkle trees also includes a move to a well-balanced single-layer design for the tree,Ultimately, which type of complexity to favor in any given situation is a question with no easy answers. The best that we can do is to have an attitude of moderately favoring encapsulated complexity, but not too much, and exercise our judgement in each specific case. Sometimes, a sacrifice of a little bit of systemic complexity to allow a great reduction of encapsulated complexity really is the best thing to do. And other times, you can even misjudge what is encapsulated and what isn't. Each situation is different.