找到

1087

篇与

heyuan

相关的结果

-

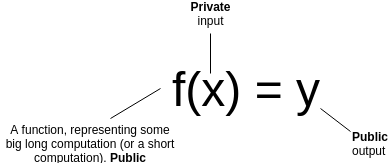

STARKs, Part I: Proofs with Polynomials STARKs, Part I: Proofs with Polynomials2017 Nov 09 See all posts STARKs, Part I: Proofs with Polynomials Special thanks to Eli Ben-Sasson for ongoing help, explanations and review, coming up with some of the examples used in this post, and most crucially of all inventing a lot of this stuff; thanks to Hsiao-wei Wang for reviewingHopefully many people by now have heard of ZK-SNARKs, the general-purpose succinct zero knowledge proof technology that can be used for all sorts of usecases ranging from verifiable computation to privacy-preserving cryptocurrency. What you might not know is that ZK-SNARKs have a newer, shinier cousin: ZK-STARKs. With the T standing for "transparent", ZK-STARKs resolve one of the primary weaknesses of ZK-SNARKs, its reliance on a "trusted setup". They also come with much simpler cryptographic assumptions, avoiding the need for elliptic curves, pairings and the knowledge-of-exponent assumption and instead relying purely on hashes and information theory; this also means that they are secure even against attackers with quantum computers.However, this comes at a cost: the size of a proof goes up from 288 bytes to a few hundred kilobytes. Sometimes the cost will not be worth it, but at other times, particularly in the context of public blockchain applications where the need for trust minimization is high, it may well be. And if elliptic curves break or quantum computers do come around, it definitely will be.So how does this other kind of zero knowledge proof work? First of all, let us review what a general-purpose succinct ZKP does. Suppose that you have a (public) function \(f\), a (private) input \(x\) and a (public) output \(y\). You want to prove that you know an \(x\) such that \(f(x) = y\), without revealing what \(x\) is. Furthermore, for the proof to be succinct, you want it to be verifiable much more quickly than computing \(f\) itself. Let's go through a few examples:\(f\) is a computation that takes two weeks to run on a regular computer, but two hours on a data center. You send the data center the computation (ie. the code to run \(f\) ), the data center runs it, and gives back the answer \(y\) with a proof. You verify the proof in a few milliseconds, and are convinced that \(y\) actually is the answer. You have an encrypted transaction, of the form "\(X_1\) was my old balance. \(X_2\) was your old balance. \(X_3\) is my new balance. \(X_4\) is your new balance". You want to create a proof that this transaction is valid (specifically, old and new balances are non-negative, and the decrease in my balance cancels out the increase in your balance). \(x\) can be the pair of encryption keys, and \(f\) can be a function which contains as a built-in public input the transaction, takes as input the keys, decrypts the transaction, performs the check, and returns 1 if it passes and 0 if it does not. \(y\) would of course be 1. You have a blockchain like Ethereum, and you download the most recent block. You want a proof that this block is valid, and that this block is at the tip of a chain where every block in the chain is valid. You ask an existing full node to provide such a proof. \(x\) is the entire blockchain (yes, all ?? gigabytes of it), \(f\) is a function that processes it block by block, verifies the validity and outputs the hash of the last block, and \(y\) is the hash of the block you just downloaded. So what's so hard about all this? As it turns out, the zero knowledge (ie. privacy) guarantee is (relatively!) easy to provide; there are a bunch of ways to convert any computation into an instance of something like the three color graph problem, where a three-coloring of the graph corresponds to a solution of the original problem, and then use a traditional zero knowledge proof protocol to prove that you have a valid graph coloring without revealing what it is. This excellent post by Matthew Green from 2014 describes this in some detail.The much harder thing to provide is succinctness. Intuitively speaking, proving things about computation succinctly is hard because computation is incredibly fragile. If you have a long and complex computation, and you as an evil genie have the ability to flip a 0 to a 1 anywhere in the middle of the computation, then in many cases even one flipped bit will be enough to make the computation give a completely different result. Hence, it's hard to see how you can do something like randomly sampling a computation trace in order to gauge its correctness, as it's just too easy to miss that "one evil bit". However, with some fancy math, it turns out that you can.The general very high level intuition is that the protocols that accomplish this use similar math to what is used in erasure coding, which is frequently used to make data fault-tolerant. If you have a piece of data, and you encode the data as a line, then you can pick out four points on the line. Any two of those four points are enough to reconstruct the original line, and therefore also give you the other two points. Furthermore, if you make even the slightest change to the data, then it is guaranteed at least three of those four points. You can also encode the data as a degree-1,000,000 polynomial, and pick out 2,000,000 points on the polynomial; any 1,000,001 of those points will recover the original data and therefore the other points, and any deviation in the original data will change at least 1,000,000 points. The algorithms shown here will make heavy use of polynomials in this way for error amplification. Changing even one point in the original data will lead to large changes in a polynomial's trajectoryA Somewhat Simple ExampleSuppose that you want to prove that you have a polynomial \(P\) such that \(P(x)\) is an integer with \(0 \leq P(x) \leq 9\) for all \(x\) from 1 to 1 million. This is a simple instance of the fairly common task of "range checking"; you might imagine this kind of check being used to verify, for example, that a set of account balances is still positive after applying some set of transactions. If it were \(1 \leq P(x) \leq 9\), this could be part of checking that the values form a correct Sudoku solution.The "traditional" way to prove this would be to just show all 1,000,000 points, and verify it by checking the values. However, we want to see if we can make a proof that can be verified in less than 1,000,000 steps. Simply randomly checking evaluations of \(P\) won't do; there's always the possibility that a malicious prover came up with a \(P\) which satisfies the constraint in 999,999 places but does not satisfy it in the last one, and random sampling only a few values will almost always miss that value. So what can we do? Let's mathematically transform the problem somewhat. Let \(C(x)\) be a constraint checking polynomial; \(C(x) = 0\) if \(0 \leq x \leq 9\) and is nonzero otherwise. There's a simple way to construct \(C(x)\): \(x \cdot (x-1) \cdot (x-2) \cdot \ldots(x-9)\) (we'll assume all of our polynomials and other values use exclusively integers, so we don't need to worry about numbers in between). Now, the problem becomes: prove that you know \(P\) such that \(C(P(x)) = 0\) for all \(x\) from 1 to 1,000,000. Let \(Z(x) = (x-1) \cdot (x-2) \cdot \ldots (x-1000000)\). It's a known mathematical fact that any polynomial which equals zero at all \(x\) from 1 to 1,000,000 is a multiple of \(Z(x)\). Hence, the problem can now be transformed again: prove that you know \(P\) and \(D\) such that \(C(P(x)) = Z(x) \cdot D(x)\) for all \(x\) (note that if you know a suitable \(C(P(x))\) then dividing it by \(Z(x)\) to compute \(D(x)\) is not too difficult; you can use long polynomial division or more realistically a faster algorithm based on FFTs). Now, we've converted our original statement into something that looks mathematically clean and possibly quite provable.So how does one prove this claim? We can imagine the proof process as a three-step communication between a prover and a verifier: the prover sends some information, then the verifier sends some requests, then the prover sends some more information. First, the prover commits to (ie. makes a Merkle tree and sends the verifier the root hash of) the evaluations of \(P(x)\) and \(D(x)\) for all \(x\) from 1 to 1 billion (yes, billion). This includes the 1 million points where \(0 \leq P(x) \leq 9\) as well as the 999 million points where that (probably) is not the case. We assume the verifier already knows the evaluation of \(Z(x)\) at all of these points; the \(Z(x)\) is like a "public verification key" for this scheme that everyone must know ahead of time (clients that do not have the space to store \(Z(x)\) in its entirety can simply store the Merkle root of \(Z(x)\) and require the prover to also provide branches for every \(Z(x)\) value that the verifier needs to query; alternatively, there are some number fields over which \(Z(x)\) for certain \(x\) is very easy to calculate). After receiving the commitment (ie. Merkle root) the verifier then selects a random 16 \(x\) values between 1 and 1 billion, and asks the prover to provide the Merkle branches for \(P(x)\) and \(D(x)\) there. The prover provides these values, and the verifier checks that (i) the branches match the Merkle root that was provided earlier, and (ii) \(C(P(x))\) actually equals \(Z(x) \cdot D(x)\) in all 16 cases. We know that this proof perfect completeness - if you actually know a suitable \(P(x)\), then if you calculate \(D(x)\) and construct the proof correctly it will always pass all 16 checks. But what about soundness - that is, if a malicious prover provides a bad \(P(x)\), what is the minimum probability that they will get caught? We can analyze as follows. Because \(C(P(x))\) is a degree-10 polynomial composed with a degree-1,000,000 polynomial, its degree will be at most 10,000,000. In general, we know that two different degree-\(N\) polynomials agree on at most \(N\) points; hence, a degree-10,000,000 polynomial which is not equal to any polynomial which always equals \(Z(x) \cdot D(x)\) for some \(x\) will necessarily disagree with them all at at least 990,000,000 points. Hence, the probability that a bad \(P(x)\) will get caught in even one round is already 99%; with 16 checks, the probability of getting caught goes up to \(1 - 10^\); that is to say, the scheme is about as hard to spoof as it is to compute a hash collision.So... what did we just do? We used polynomials to "boost" the error in any bad solution, so that any incorrect solution to the original problem, which would have required a million checks to find directly, turns into a solution to the verification protocol that can get flagged as erroneous at 99% of the time with even a single check.We can convert this three-step mechanism into a non-interactive proof, which can be broadcasted by a single prover once and then verified by anyone, using the Fiat-Shamir heuristic. The prover first builds up a Merkle tree of the \(P(x)\) and \(D(x)\) values, and computes the root hash of the tree. The root itself is then used as the source of entropy that determines what branches of the tree the prover needs to provide. The prover then broadcasts the Merkle root and the branches together as the proof. The computation is all done on the prover side; the process of computing the Merkle root from the data, and then using that to select the branches that get audited, effectively substitutes the need for an interactive verifier.The only thing a malicious prover without a valid \(P(x)\) can do is try to make a valid proof over and over again until eventually they get extremely lucky with the branches that a Merkle root that they compute selects, but with a soundness of \(1 - 10^\) (ie. probability of at least \(1 - 10^\) that a given attempted fake proof will fail the check) it would take a malicious prover billions of years to make a passable proof. Going FurtherTo illustrate the power of this technique, let's use it to do something a little less trivial: prove that you know the millionth Fibonacci number. To accomplish this, we'll prove that you have knowledge of a polynomial which represents a computation tape, with \(P(x)\) representing the \(x\)th Fibonacci number. The constraint checking polynomial will now hop across three x-coordinates: \(C(x_1, x_2, x_3) = x_3-x_2-x_1\) (notice how if \(C(P(x), P(x+1), P(x+2)) = 0\) for all \(x\) then \(P(x)\) represents a Fibonacci sequence). The translated problem becomes: prove that you know \(P\) and \(D\) such that \(C(P(x), P(x+1), P(x+2)) = Z(x) \cdot D(x)\). For each of the 16 indices that the proof audits, the prover will need to provide Merkle branches for \(P(x)\), \(P(x+1)\), \(P(x+2)\) and \(D(x)\). The prover will additionally need to provide Merkle branches to show that \(P(0) = P(1) = 1\). Otherwise, the entire process is the same.Now, to accomplish this in reality there are two problems that need to be resolved. The first problem is that if we actually try to work with regular numbers the solution would not be efficient in practice, because the numbers themselves very easily get extremely large. The millionth Fibonacci number, for example, has 208988 digits. If we actually want to achieve succinctness in practice, instead of doing these polynomials with regular numbers, we need to use finite fields - number systems that still follow the same arithmetic laws we know and love, like \(a \cdot (b+c) = (a\cdot b) + (a\cdot c)\) and \((a^2 - b^2) = (a-b) \cdot (a+b)\), but where each number is guaranteed to take up a constant amount of space. Proving claims about the millionth Fibonacci number would then require a more complicated design that implements big-number arithmetic on top of this finite field math.The simplest possible finite field is modular arithmetic; that is, replace every instance of \(a + b\) with \(a + b \mod\) for some prime \(N\), do the same for subtraction and multiplication, and for division use modular inverses (eg. if \(N = 7\), then \(3 + 4 = 0\), \(2 + 6 = 1\), \(3 \cdot 4 = 5\), \(4 / 2 = 2\) and \(5 / 2 = 6\)). You can learn more about these kinds of number systems from my description on prime fields here (search "prime field" in the page) or this Wikipedia article on modular arithmetic (the articles that you'll find by searching directly for "finite fields" and "prime fields" unfortunately tend to be very complicated and go straight into abstract algebra, don't bother with those).Second, you might have noticed that in my above proof sketch for soundness I neglected to cover one kind of attack: what if, instead of a plausible degree-1,000,000 \(P(x)\) and degree-9,000,000 \(D(x)\), the attacker commits to some values that are not on any such relatively-low-degree polynomial? Then, the argument that an invalid \(C(P(x))\) must differ from any valid \(C(P(x))\) on at least 990 million points does not apply, and so different and much more effective kinds of attacks are possible. For example, an attacker could generate a random value \(p\) for every \(x\), then compute \(d = C(p) / Z(x)\) and commit to these values in place of \(P(x)\) and \(D(x)\). These values would not be on any kind of low-degree polynomial, but they would pass the test.It turns out that this possibility can be effectively defended against, though the tools for doing so are fairly complex, and so you can quite legitimately say that they make up the bulk of the mathematical innovation in STARKs. Also, the solution has a limitation: you can weed out commitments to data that are very far from any degree-1,000,000 polynomial (eg. you would need to change 20% of all the values to make it a degree-1,000,000 polynomial), but you cannot weed out commitments to data that only differ from a polynomial in only one or two coordinates. Hence, what these tools will provide is proof of proximity - proof that most of the points on \(P\) and \(D\) correspond to the right kind of polynomial.As it turns out, this is sufficient to make a proof, though there are two "catches". First, the verifier needs to check a few more indices to make up for the additional room for error that this limitation introduces. Second, if we are doing "boundary constraint checking" (eg. verifying \(P(0) = P(1) = 1\) in the Fibonacci example above), then we need to extend the proof of proximity to not only prove that most points are on the same polynomial, but also prove that those two specific points (or whatever other number of specific points you want to check) are on that polynomial.In the next part of this series, I will describe the solution to proximity checking in much more detail, and in the third part I will describe how more complex constraint functions can be constructed to check not just Fibonacci numbers and ranges, but also arbitrary computation.

STARKs, Part I: Proofs with Polynomials STARKs, Part I: Proofs with Polynomials2017 Nov 09 See all posts STARKs, Part I: Proofs with Polynomials Special thanks to Eli Ben-Sasson for ongoing help, explanations and review, coming up with some of the examples used in this post, and most crucially of all inventing a lot of this stuff; thanks to Hsiao-wei Wang for reviewingHopefully many people by now have heard of ZK-SNARKs, the general-purpose succinct zero knowledge proof technology that can be used for all sorts of usecases ranging from verifiable computation to privacy-preserving cryptocurrency. What you might not know is that ZK-SNARKs have a newer, shinier cousin: ZK-STARKs. With the T standing for "transparent", ZK-STARKs resolve one of the primary weaknesses of ZK-SNARKs, its reliance on a "trusted setup". They also come with much simpler cryptographic assumptions, avoiding the need for elliptic curves, pairings and the knowledge-of-exponent assumption and instead relying purely on hashes and information theory; this also means that they are secure even against attackers with quantum computers.However, this comes at a cost: the size of a proof goes up from 288 bytes to a few hundred kilobytes. Sometimes the cost will not be worth it, but at other times, particularly in the context of public blockchain applications where the need for trust minimization is high, it may well be. And if elliptic curves break or quantum computers do come around, it definitely will be.So how does this other kind of zero knowledge proof work? First of all, let us review what a general-purpose succinct ZKP does. Suppose that you have a (public) function \(f\), a (private) input \(x\) and a (public) output \(y\). You want to prove that you know an \(x\) such that \(f(x) = y\), without revealing what \(x\) is. Furthermore, for the proof to be succinct, you want it to be verifiable much more quickly than computing \(f\) itself. Let's go through a few examples:\(f\) is a computation that takes two weeks to run on a regular computer, but two hours on a data center. You send the data center the computation (ie. the code to run \(f\) ), the data center runs it, and gives back the answer \(y\) with a proof. You verify the proof in a few milliseconds, and are convinced that \(y\) actually is the answer. You have an encrypted transaction, of the form "\(X_1\) was my old balance. \(X_2\) was your old balance. \(X_3\) is my new balance. \(X_4\) is your new balance". You want to create a proof that this transaction is valid (specifically, old and new balances are non-negative, and the decrease in my balance cancels out the increase in your balance). \(x\) can be the pair of encryption keys, and \(f\) can be a function which contains as a built-in public input the transaction, takes as input the keys, decrypts the transaction, performs the check, and returns 1 if it passes and 0 if it does not. \(y\) would of course be 1. You have a blockchain like Ethereum, and you download the most recent block. You want a proof that this block is valid, and that this block is at the tip of a chain where every block in the chain is valid. You ask an existing full node to provide such a proof. \(x\) is the entire blockchain (yes, all ?? gigabytes of it), \(f\) is a function that processes it block by block, verifies the validity and outputs the hash of the last block, and \(y\) is the hash of the block you just downloaded. So what's so hard about all this? As it turns out, the zero knowledge (ie. privacy) guarantee is (relatively!) easy to provide; there are a bunch of ways to convert any computation into an instance of something like the three color graph problem, where a three-coloring of the graph corresponds to a solution of the original problem, and then use a traditional zero knowledge proof protocol to prove that you have a valid graph coloring without revealing what it is. This excellent post by Matthew Green from 2014 describes this in some detail.The much harder thing to provide is succinctness. Intuitively speaking, proving things about computation succinctly is hard because computation is incredibly fragile. If you have a long and complex computation, and you as an evil genie have the ability to flip a 0 to a 1 anywhere in the middle of the computation, then in many cases even one flipped bit will be enough to make the computation give a completely different result. Hence, it's hard to see how you can do something like randomly sampling a computation trace in order to gauge its correctness, as it's just too easy to miss that "one evil bit". However, with some fancy math, it turns out that you can.The general very high level intuition is that the protocols that accomplish this use similar math to what is used in erasure coding, which is frequently used to make data fault-tolerant. If you have a piece of data, and you encode the data as a line, then you can pick out four points on the line. Any two of those four points are enough to reconstruct the original line, and therefore also give you the other two points. Furthermore, if you make even the slightest change to the data, then it is guaranteed at least three of those four points. You can also encode the data as a degree-1,000,000 polynomial, and pick out 2,000,000 points on the polynomial; any 1,000,001 of those points will recover the original data and therefore the other points, and any deviation in the original data will change at least 1,000,000 points. The algorithms shown here will make heavy use of polynomials in this way for error amplification. Changing even one point in the original data will lead to large changes in a polynomial's trajectoryA Somewhat Simple ExampleSuppose that you want to prove that you have a polynomial \(P\) such that \(P(x)\) is an integer with \(0 \leq P(x) \leq 9\) for all \(x\) from 1 to 1 million. This is a simple instance of the fairly common task of "range checking"; you might imagine this kind of check being used to verify, for example, that a set of account balances is still positive after applying some set of transactions. If it were \(1 \leq P(x) \leq 9\), this could be part of checking that the values form a correct Sudoku solution.The "traditional" way to prove this would be to just show all 1,000,000 points, and verify it by checking the values. However, we want to see if we can make a proof that can be verified in less than 1,000,000 steps. Simply randomly checking evaluations of \(P\) won't do; there's always the possibility that a malicious prover came up with a \(P\) which satisfies the constraint in 999,999 places but does not satisfy it in the last one, and random sampling only a few values will almost always miss that value. So what can we do? Let's mathematically transform the problem somewhat. Let \(C(x)\) be a constraint checking polynomial; \(C(x) = 0\) if \(0 \leq x \leq 9\) and is nonzero otherwise. There's a simple way to construct \(C(x)\): \(x \cdot (x-1) \cdot (x-2) \cdot \ldots(x-9)\) (we'll assume all of our polynomials and other values use exclusively integers, so we don't need to worry about numbers in between). Now, the problem becomes: prove that you know \(P\) such that \(C(P(x)) = 0\) for all \(x\) from 1 to 1,000,000. Let \(Z(x) = (x-1) \cdot (x-2) \cdot \ldots (x-1000000)\). It's a known mathematical fact that any polynomial which equals zero at all \(x\) from 1 to 1,000,000 is a multiple of \(Z(x)\). Hence, the problem can now be transformed again: prove that you know \(P\) and \(D\) such that \(C(P(x)) = Z(x) \cdot D(x)\) for all \(x\) (note that if you know a suitable \(C(P(x))\) then dividing it by \(Z(x)\) to compute \(D(x)\) is not too difficult; you can use long polynomial division or more realistically a faster algorithm based on FFTs). Now, we've converted our original statement into something that looks mathematically clean and possibly quite provable.So how does one prove this claim? We can imagine the proof process as a three-step communication between a prover and a verifier: the prover sends some information, then the verifier sends some requests, then the prover sends some more information. First, the prover commits to (ie. makes a Merkle tree and sends the verifier the root hash of) the evaluations of \(P(x)\) and \(D(x)\) for all \(x\) from 1 to 1 billion (yes, billion). This includes the 1 million points where \(0 \leq P(x) \leq 9\) as well as the 999 million points where that (probably) is not the case. We assume the verifier already knows the evaluation of \(Z(x)\) at all of these points; the \(Z(x)\) is like a "public verification key" for this scheme that everyone must know ahead of time (clients that do not have the space to store \(Z(x)\) in its entirety can simply store the Merkle root of \(Z(x)\) and require the prover to also provide branches for every \(Z(x)\) value that the verifier needs to query; alternatively, there are some number fields over which \(Z(x)\) for certain \(x\) is very easy to calculate). After receiving the commitment (ie. Merkle root) the verifier then selects a random 16 \(x\) values between 1 and 1 billion, and asks the prover to provide the Merkle branches for \(P(x)\) and \(D(x)\) there. The prover provides these values, and the verifier checks that (i) the branches match the Merkle root that was provided earlier, and (ii) \(C(P(x))\) actually equals \(Z(x) \cdot D(x)\) in all 16 cases. We know that this proof perfect completeness - if you actually know a suitable \(P(x)\), then if you calculate \(D(x)\) and construct the proof correctly it will always pass all 16 checks. But what about soundness - that is, if a malicious prover provides a bad \(P(x)\), what is the minimum probability that they will get caught? We can analyze as follows. Because \(C(P(x))\) is a degree-10 polynomial composed with a degree-1,000,000 polynomial, its degree will be at most 10,000,000. In general, we know that two different degree-\(N\) polynomials agree on at most \(N\) points; hence, a degree-10,000,000 polynomial which is not equal to any polynomial which always equals \(Z(x) \cdot D(x)\) for some \(x\) will necessarily disagree with them all at at least 990,000,000 points. Hence, the probability that a bad \(P(x)\) will get caught in even one round is already 99%; with 16 checks, the probability of getting caught goes up to \(1 - 10^\); that is to say, the scheme is about as hard to spoof as it is to compute a hash collision.So... what did we just do? We used polynomials to "boost" the error in any bad solution, so that any incorrect solution to the original problem, which would have required a million checks to find directly, turns into a solution to the verification protocol that can get flagged as erroneous at 99% of the time with even a single check.We can convert this three-step mechanism into a non-interactive proof, which can be broadcasted by a single prover once and then verified by anyone, using the Fiat-Shamir heuristic. The prover first builds up a Merkle tree of the \(P(x)\) and \(D(x)\) values, and computes the root hash of the tree. The root itself is then used as the source of entropy that determines what branches of the tree the prover needs to provide. The prover then broadcasts the Merkle root and the branches together as the proof. The computation is all done on the prover side; the process of computing the Merkle root from the data, and then using that to select the branches that get audited, effectively substitutes the need for an interactive verifier.The only thing a malicious prover without a valid \(P(x)\) can do is try to make a valid proof over and over again until eventually they get extremely lucky with the branches that a Merkle root that they compute selects, but with a soundness of \(1 - 10^\) (ie. probability of at least \(1 - 10^\) that a given attempted fake proof will fail the check) it would take a malicious prover billions of years to make a passable proof. Going FurtherTo illustrate the power of this technique, let's use it to do something a little less trivial: prove that you know the millionth Fibonacci number. To accomplish this, we'll prove that you have knowledge of a polynomial which represents a computation tape, with \(P(x)\) representing the \(x\)th Fibonacci number. The constraint checking polynomial will now hop across three x-coordinates: \(C(x_1, x_2, x_3) = x_3-x_2-x_1\) (notice how if \(C(P(x), P(x+1), P(x+2)) = 0\) for all \(x\) then \(P(x)\) represents a Fibonacci sequence). The translated problem becomes: prove that you know \(P\) and \(D\) such that \(C(P(x), P(x+1), P(x+2)) = Z(x) \cdot D(x)\). For each of the 16 indices that the proof audits, the prover will need to provide Merkle branches for \(P(x)\), \(P(x+1)\), \(P(x+2)\) and \(D(x)\). The prover will additionally need to provide Merkle branches to show that \(P(0) = P(1) = 1\). Otherwise, the entire process is the same.Now, to accomplish this in reality there are two problems that need to be resolved. The first problem is that if we actually try to work with regular numbers the solution would not be efficient in practice, because the numbers themselves very easily get extremely large. The millionth Fibonacci number, for example, has 208988 digits. If we actually want to achieve succinctness in practice, instead of doing these polynomials with regular numbers, we need to use finite fields - number systems that still follow the same arithmetic laws we know and love, like \(a \cdot (b+c) = (a\cdot b) + (a\cdot c)\) and \((a^2 - b^2) = (a-b) \cdot (a+b)\), but where each number is guaranteed to take up a constant amount of space. Proving claims about the millionth Fibonacci number would then require a more complicated design that implements big-number arithmetic on top of this finite field math.The simplest possible finite field is modular arithmetic; that is, replace every instance of \(a + b\) with \(a + b \mod\) for some prime \(N\), do the same for subtraction and multiplication, and for division use modular inverses (eg. if \(N = 7\), then \(3 + 4 = 0\), \(2 + 6 = 1\), \(3 \cdot 4 = 5\), \(4 / 2 = 2\) and \(5 / 2 = 6\)). You can learn more about these kinds of number systems from my description on prime fields here (search "prime field" in the page) or this Wikipedia article on modular arithmetic (the articles that you'll find by searching directly for "finite fields" and "prime fields" unfortunately tend to be very complicated and go straight into abstract algebra, don't bother with those).Second, you might have noticed that in my above proof sketch for soundness I neglected to cover one kind of attack: what if, instead of a plausible degree-1,000,000 \(P(x)\) and degree-9,000,000 \(D(x)\), the attacker commits to some values that are not on any such relatively-low-degree polynomial? Then, the argument that an invalid \(C(P(x))\) must differ from any valid \(C(P(x))\) on at least 990 million points does not apply, and so different and much more effective kinds of attacks are possible. For example, an attacker could generate a random value \(p\) for every \(x\), then compute \(d = C(p) / Z(x)\) and commit to these values in place of \(P(x)\) and \(D(x)\). These values would not be on any kind of low-degree polynomial, but they would pass the test.It turns out that this possibility can be effectively defended against, though the tools for doing so are fairly complex, and so you can quite legitimately say that they make up the bulk of the mathematical innovation in STARKs. Also, the solution has a limitation: you can weed out commitments to data that are very far from any degree-1,000,000 polynomial (eg. you would need to change 20% of all the values to make it a degree-1,000,000 polynomial), but you cannot weed out commitments to data that only differ from a polynomial in only one or two coordinates. Hence, what these tools will provide is proof of proximity - proof that most of the points on \(P\) and \(D\) correspond to the right kind of polynomial.As it turns out, this is sufficient to make a proof, though there are two "catches". First, the verifier needs to check a few more indices to make up for the additional room for error that this limitation introduces. Second, if we are doing "boundary constraint checking" (eg. verifying \(P(0) = P(1) = 1\) in the Fibonacci example above), then we need to extend the proof of proximity to not only prove that most points are on the same polynomial, but also prove that those two specific points (or whatever other number of specific points you want to check) are on that polynomial.In the next part of this series, I will describe the solution to proximity checking in much more detail, and in the third part I will describe how more complex constraint functions can be constructed to check not just Fibonacci numbers and ranges, but also arbitrary computation. -

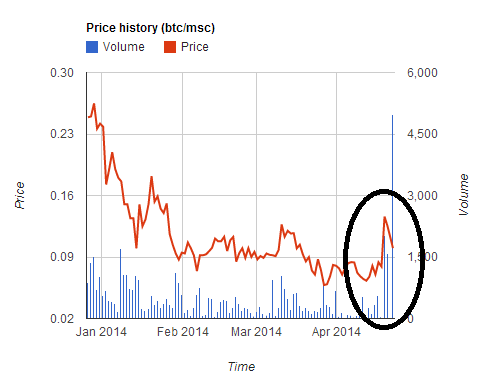

On Medium-of-Exchange Token Valuations On Medium-of-Exchange Token Valuations2017 Oct 17 See all posts On Medium-of-Exchange Token Valuations One kind of token model that has become popular among many recent token sale projects is the "network medium of exchange token". The general pitch for this kind of token goes as follows. We, the developers, build a network, and this network allows you to do new cool stuff. This network is a sharing-economy-style system: it consists purely of a set of sellers, that provide resources within some protocol, and buyers that purchase the services, where both buyers and sellers come from the community. But the purchase and sale of things within this network must be done with the new token that we're selling, and this is why the token will have value.If it were the developers themselves that were acting as the seller, then this would be a very reasonable and normal arrangement, very similar in nature to a Kickstarter-style product sale. The token actually would, in a meaningful economic sense, be backed by the services that are provided by the developers.We can see this in more detail by describing what is going on in a simple economic model. Suppose that \(N\) people value a product that a developer wants to release at \(\$x\), and believe the developer will give them the product. The developer does a sale, and raises \(N\) units for \(\$w < x\) each, thus raising a total revenue of \(\$Nw\). The developer builds the product, and gives it to each of the buyers. At the end of the day, the buyers are happy, and the developer is happy. Nobody feels like they made an avoidable mistake in participating, and everyone's expectations have been met. This kind of economic model is clearly stable.Now, let's look at the story with a "medium of exchange" token. \(N\) people value a product that will exist in a decentralized network at \(\$x\); the product will be sold at a price of \(\$w < x\). They each buy \(\$w\) of tokens in the sale. The developer builds the network. Some sellers come in, and offer the product inside the network for \(\$w\). The buyers use their tokens to purchase this product, spending \(\$w\) of tokens and getting \(\$x\) of value. The sellers spend \(\$v < w\) of resources and effort producing this product, and they now have \(\$w\) worth of tokens.Notice that here, the cycle is not complete, and in fact it never will be; there needs to be an ongoing stream of buyers and sellers for the token to continue having its value. The stream does not strictly speaking have to be endless; if in every round there is a chance of at least \(\frac\) that there will be a next round, then the model still works, as even though someone will eventually be cheated, the risk of any individual participant becoming that person is lower than the benefit that they get from participating. It's also totally possible that the token would depreciate in each round, with its value multiplying by some factor \(f\) where \(\frac < f < 1\), until it eventually reaches a price of zero, and it would still be on net in everyone's interest to participate. Hence, the model is theoretically feasible, but you can see how this model is more complex and more tenuous than the simple "developers as seller" model.Traditional macroeconomics has a simple equation to try to value a medium of exchange:\(MV = PT\)Here:\(M\) is the total money supply; that is, the total number of coins \(V\) is the "velocity of money"; that is, the number of times that an average coin changes hands every day \(P\) is the "price level". This is the price of goods and services in terms of the token; so it is actually the inverse of the currency's price \(T\) is the transaction volume: the economic value of transactions per day The proof for this is a trivial equality: if there are \(N\) coins, and each changes hands \(M\) times per day, then this is \(M \cdot N\) coins' worth of economic value transacted per day. If this represents \(\$T\) worth of economic value, then the price of each coin is \(\frac\), so the "price level" is the inverse of this, \(\frac\).For easier analysis, we can recast two variables:We refer to \(\frac\) with \(H\), the time that a user holds a coin before using it to make a transaction We refer to \(\frac\) with \(C\), the price of the currency (think \(C = cost\)) Now, we have:\(\frac = \frac\)\(MC = TH\)The left term is quite simply the market cap. The right term is the economic value transacted per day, multiplied by the amount of time that a user holds a coin before using it to transact.This is a steady-state model, assuming that the same quantity of users will also be there. In reality, however, the quantity of users may change, and so the price may change. The time that users hold a coin may change, and this may cause the price to change as well.Let us now look once again at the economic effect on the users. What do users lose by using an application with a built-in appcoin rather than plain old ether (or bitcoin, or USD)? The simplest way to express this is as follows: the "implicit cost" imposed by such a system on users the cost to the user of holding those coins for that period of time, instead of holding that value in the currency that they would otherwise have preferred to hold.There are many factors involved in this cost: cognitive costs, exchange costs and spreads, transaction fees, and many smaller items. One particular significant factor of this implicit cost is expected return. If a user expects the appcoin to only grow in value by 1% per year, while their other available alternatives grow 3% per year, and they hold $20 of the currency for five days, then that is an expected loss of roughly \(\$20 \cdot 2% \cdot 5 / 365 = \$0.0054\).One immediate conclusion from this particular insight is that appcoins are very much a multi-equilibrium game. If the appcoin grows at 2% per year, then the fee drops to $0.0027, and this essentially makes the "de-facto fee" of the application (or at least a large component of it) 2x cheaper, attracting more users and growing its value more. If the appcoin starts falling at 10% per year, however, then the "de-facto fee" grows to $0.035, driving many users away and accelerating its growth.This leads to increased opportunities for market manipulation, as a manipulator would not just be wasting their money fighting against a single equilibrium, but may in fact successfully nudge a given currency from one equilibrium into another, and profit from successfully "predicting" (ie. causing) this shift. It also means there is a large amount of path dependency, and established brands matter a lot; witness the epic battles over which fork of the bitcoin blockchain can be called Bitcoin for one particular high-profile example.Another, and perhaps even more important, conclusion is that the market cap of an appcoin depends crucially on the holding time \(H\). If someone creates a very efficient exchange, which allows users to purchase an appcoin in real time and then immediately use it in the application, then allowing sellers to immediately cash out, then the market cap would drop precipitously. If a currency is stable or prospects are looking optimistic, then this may not matter because users actually see no disadvantage from holding the token instead of holding something else (ie. zero "de-facto fee"), but if prospects start to turn sour then such a well-functioning exchange can acelerate its demise.You might think that exchanges are inherently inefficient, requiring users to create an account, login, deposit coins, wait for 36 confirmations, trade and logout, but in fact hyper-efficient exchanges are around the corner. Here is a thread discussing designs for fully autonomous synchronous on-chain transactions, which can convert token A into token B, and possibly even then use token B to do something, within a single transaction. Many other platforms are being developed as well.What this all serves to show is that relying purely on the medium-of-exchange argument to support a token value, while attractive because of its seeming ability to print money out of thin air, is ultimately quite brittle. Protocol tokens using this model may well be sustained for some time due to irrationality and temporary equilibria where the implicit cost of holding the token is zero, but it is a kind of model which always has an unavoidable risk of collapsing at any time.So what is the alternative? One simple alternative is the etherdelta approach, where an application simply collects fees in the interface. One common criticism is: but can't someone fork the interface to take out the fees? A counter-retort is: someone can also fork the interface to replace your protocol token with ETH, BTC, DOGE or whatever else users would prefer to use. One can make a more sophisticated argument that this is hard because the "pirate" version would have to compete with the "official" version for network effect, but one can just as easily create an official fee-paying client that refuses to interact with non-fee-paying clients as well; this kind of network effect-based enforcement is similar to how value-added-taxes are typically enforced in Europe and other places. Official-client buyers would not interact with non-official-client sellers, and official-client sellers would not interact with non-official-client buyers, so a large group of users would need to switch to the "pirate" client at the same time to successfully dodge fees. This is not perfectly robust, but it is certainly as good as the approach of creating a new protocol token.If developers want to front-load revenue to fund initial development, then they can sell a token, with the property that all fees paid are used to buy back some of the token and burn it; this would make the token backed by the future expected value of upcoming fees spent inside the system. One can transform this design into a more direct utility token by requiring users to use the utility token to pay fees, and having the interface use an exchange to automatically purchase tokens if the user does not have tokens already.The important thing is that for the token to have a stable value, it is highly beneficial for the token supply to have sinks - places where tokens actually disappear and so the total token quantity decreases over time. This way, there is a more transparent and explicit fee paid by users, instead of the highly variable and difficult to calculate "de-facto fee", and there is also a more transparent and explicit way to figure out what the value of protocol tokens should be.

On Medium-of-Exchange Token Valuations On Medium-of-Exchange Token Valuations2017 Oct 17 See all posts On Medium-of-Exchange Token Valuations One kind of token model that has become popular among many recent token sale projects is the "network medium of exchange token". The general pitch for this kind of token goes as follows. We, the developers, build a network, and this network allows you to do new cool stuff. This network is a sharing-economy-style system: it consists purely of a set of sellers, that provide resources within some protocol, and buyers that purchase the services, where both buyers and sellers come from the community. But the purchase and sale of things within this network must be done with the new token that we're selling, and this is why the token will have value.If it were the developers themselves that were acting as the seller, then this would be a very reasonable and normal arrangement, very similar in nature to a Kickstarter-style product sale. The token actually would, in a meaningful economic sense, be backed by the services that are provided by the developers.We can see this in more detail by describing what is going on in a simple economic model. Suppose that \(N\) people value a product that a developer wants to release at \(\$x\), and believe the developer will give them the product. The developer does a sale, and raises \(N\) units for \(\$w < x\) each, thus raising a total revenue of \(\$Nw\). The developer builds the product, and gives it to each of the buyers. At the end of the day, the buyers are happy, and the developer is happy. Nobody feels like they made an avoidable mistake in participating, and everyone's expectations have been met. This kind of economic model is clearly stable.Now, let's look at the story with a "medium of exchange" token. \(N\) people value a product that will exist in a decentralized network at \(\$x\); the product will be sold at a price of \(\$w < x\). They each buy \(\$w\) of tokens in the sale. The developer builds the network. Some sellers come in, and offer the product inside the network for \(\$w\). The buyers use their tokens to purchase this product, spending \(\$w\) of tokens and getting \(\$x\) of value. The sellers spend \(\$v < w\) of resources and effort producing this product, and they now have \(\$w\) worth of tokens.Notice that here, the cycle is not complete, and in fact it never will be; there needs to be an ongoing stream of buyers and sellers for the token to continue having its value. The stream does not strictly speaking have to be endless; if in every round there is a chance of at least \(\frac\) that there will be a next round, then the model still works, as even though someone will eventually be cheated, the risk of any individual participant becoming that person is lower than the benefit that they get from participating. It's also totally possible that the token would depreciate in each round, with its value multiplying by some factor \(f\) where \(\frac < f < 1\), until it eventually reaches a price of zero, and it would still be on net in everyone's interest to participate. Hence, the model is theoretically feasible, but you can see how this model is more complex and more tenuous than the simple "developers as seller" model.Traditional macroeconomics has a simple equation to try to value a medium of exchange:\(MV = PT\)Here:\(M\) is the total money supply; that is, the total number of coins \(V\) is the "velocity of money"; that is, the number of times that an average coin changes hands every day \(P\) is the "price level". This is the price of goods and services in terms of the token; so it is actually the inverse of the currency's price \(T\) is the transaction volume: the economic value of transactions per day The proof for this is a trivial equality: if there are \(N\) coins, and each changes hands \(M\) times per day, then this is \(M \cdot N\) coins' worth of economic value transacted per day. If this represents \(\$T\) worth of economic value, then the price of each coin is \(\frac\), so the "price level" is the inverse of this, \(\frac\).For easier analysis, we can recast two variables:We refer to \(\frac\) with \(H\), the time that a user holds a coin before using it to make a transaction We refer to \(\frac\) with \(C\), the price of the currency (think \(C = cost\)) Now, we have:\(\frac = \frac\)\(MC = TH\)The left term is quite simply the market cap. The right term is the economic value transacted per day, multiplied by the amount of time that a user holds a coin before using it to transact.This is a steady-state model, assuming that the same quantity of users will also be there. In reality, however, the quantity of users may change, and so the price may change. The time that users hold a coin may change, and this may cause the price to change as well.Let us now look once again at the economic effect on the users. What do users lose by using an application with a built-in appcoin rather than plain old ether (or bitcoin, or USD)? The simplest way to express this is as follows: the "implicit cost" imposed by such a system on users the cost to the user of holding those coins for that period of time, instead of holding that value in the currency that they would otherwise have preferred to hold.There are many factors involved in this cost: cognitive costs, exchange costs and spreads, transaction fees, and many smaller items. One particular significant factor of this implicit cost is expected return. If a user expects the appcoin to only grow in value by 1% per year, while their other available alternatives grow 3% per year, and they hold $20 of the currency for five days, then that is an expected loss of roughly \(\$20 \cdot 2% \cdot 5 / 365 = \$0.0054\).One immediate conclusion from this particular insight is that appcoins are very much a multi-equilibrium game. If the appcoin grows at 2% per year, then the fee drops to $0.0027, and this essentially makes the "de-facto fee" of the application (or at least a large component of it) 2x cheaper, attracting more users and growing its value more. If the appcoin starts falling at 10% per year, however, then the "de-facto fee" grows to $0.035, driving many users away and accelerating its growth.This leads to increased opportunities for market manipulation, as a manipulator would not just be wasting their money fighting against a single equilibrium, but may in fact successfully nudge a given currency from one equilibrium into another, and profit from successfully "predicting" (ie. causing) this shift. It also means there is a large amount of path dependency, and established brands matter a lot; witness the epic battles over which fork of the bitcoin blockchain can be called Bitcoin for one particular high-profile example.Another, and perhaps even more important, conclusion is that the market cap of an appcoin depends crucially on the holding time \(H\). If someone creates a very efficient exchange, which allows users to purchase an appcoin in real time and then immediately use it in the application, then allowing sellers to immediately cash out, then the market cap would drop precipitously. If a currency is stable or prospects are looking optimistic, then this may not matter because users actually see no disadvantage from holding the token instead of holding something else (ie. zero "de-facto fee"), but if prospects start to turn sour then such a well-functioning exchange can acelerate its demise.You might think that exchanges are inherently inefficient, requiring users to create an account, login, deposit coins, wait for 36 confirmations, trade and logout, but in fact hyper-efficient exchanges are around the corner. Here is a thread discussing designs for fully autonomous synchronous on-chain transactions, which can convert token A into token B, and possibly even then use token B to do something, within a single transaction. Many other platforms are being developed as well.What this all serves to show is that relying purely on the medium-of-exchange argument to support a token value, while attractive because of its seeming ability to print money out of thin air, is ultimately quite brittle. Protocol tokens using this model may well be sustained for some time due to irrationality and temporary equilibria where the implicit cost of holding the token is zero, but it is a kind of model which always has an unavoidable risk of collapsing at any time.So what is the alternative? One simple alternative is the etherdelta approach, where an application simply collects fees in the interface. One common criticism is: but can't someone fork the interface to take out the fees? A counter-retort is: someone can also fork the interface to replace your protocol token with ETH, BTC, DOGE or whatever else users would prefer to use. One can make a more sophisticated argument that this is hard because the "pirate" version would have to compete with the "official" version for network effect, but one can just as easily create an official fee-paying client that refuses to interact with non-fee-paying clients as well; this kind of network effect-based enforcement is similar to how value-added-taxes are typically enforced in Europe and other places. Official-client buyers would not interact with non-official-client sellers, and official-client sellers would not interact with non-official-client buyers, so a large group of users would need to switch to the "pirate" client at the same time to successfully dodge fees. This is not perfectly robust, but it is certainly as good as the approach of creating a new protocol token.If developers want to front-load revenue to fund initial development, then they can sell a token, with the property that all fees paid are used to buy back some of the token and burn it; this would make the token backed by the future expected value of upcoming fees spent inside the system. One can transform this design into a more direct utility token by requiring users to use the utility token to pay fees, and having the interface use an exchange to automatically purchase tokens if the user does not have tokens already.The important thing is that for the token to have a stable value, it is highly beneficial for the token supply to have sinks - places where tokens actually disappear and so the total token quantity decreases over time. This way, there is a more transparent and explicit fee paid by users, instead of the highly variable and difficult to calculate "de-facto fee", and there is also a more transparent and explicit way to figure out what the value of protocol tokens should be. -

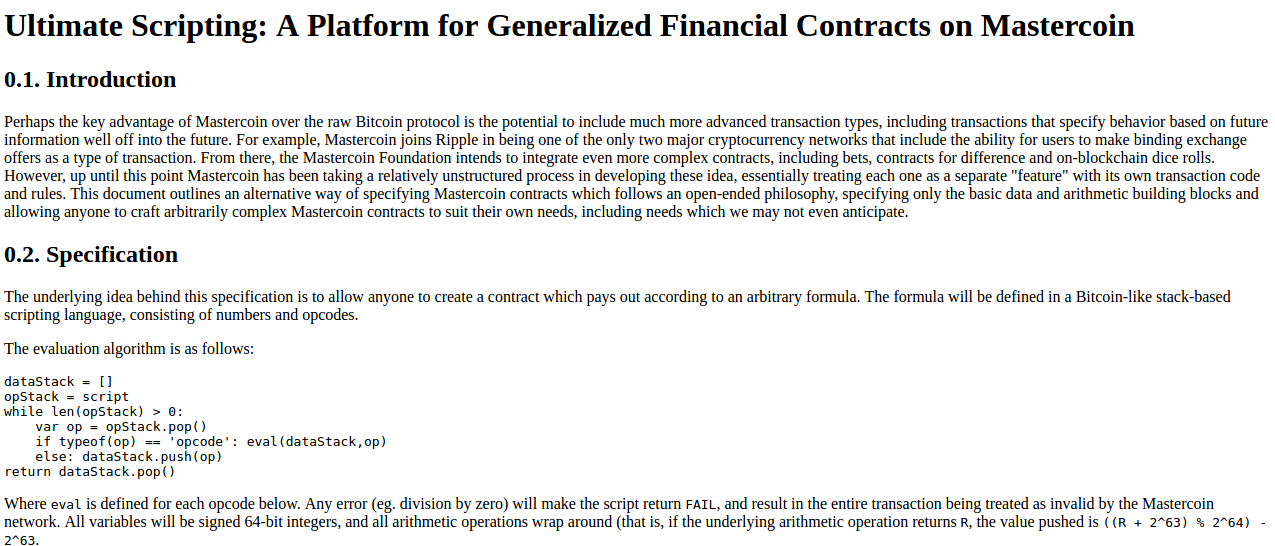

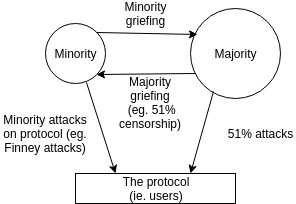

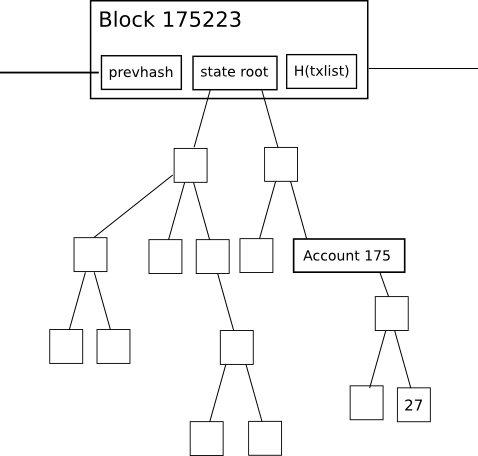

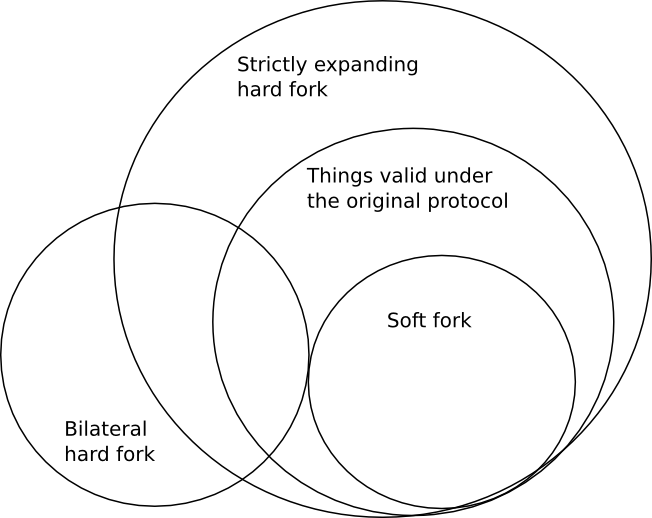

A Prehistory of the Ethereum Protocol A Prehistory of the Ethereum Protocol2017 Sep 14 See all posts A Prehistory of the Ethereum Protocol Although the ideas behind the current Ethereum protocol have largely been stable for two years, Ethereum did not emerge all at once, in its current conception and fully formed. Before the blockchain has launched, the protocol went through a number of significant evolutions and design decisions. The purpose of this article will be to go through the various evolutions that the protocol went through from start to launch; the countless work that was done on the implementations of the protocol such as Geth, cppethereum, pyethereum, and EthereumJ, as well as the history of applications and businesses in the Ethereum ecosystem, is deliberately out of scope.Also out of scope is the history of Casper and sharding research. While we can certainly make more blog posts talking about all of the various ideas Vlad, Gavin, myself and others came up with, and discarded, including "proof of proof of work", hub-and-spoke chains, "hypercubes", shadow chains (arguably a precursor to Plasma), chain fibers, and various iterations of Casper, as well as Vlad's rapidly evolving thoughts on reasoning about incentives of actors in consensus protocols and properties thereof, this would also be far too complex a story to go through in one post, so we will leave it out for now.Let us first begin with the very earliest version of what would eventually become Ethereum, back when it was not even called Ethereum. When I was visiting Israel in October 2013, I spent quite a bit of time with the Mastercoin team, and even suggested a few features for them. After spending a couple of times thinking about what they were doing, I sent the team a proposal to make their protocol more generalized and support more types of contracts without adding an equally large and complex set of features:https://web.archive.org/web/20150627031414/http://vbuterin.com/ultimatescripting.htmlNotice that this is very far from the later and more expansive vision of Ethereum: it specialized purely in what Mastercoin was trying to specialize in already, namely two-party contracts where parties A and B would both put in money, and then they would later get money out according to some formula specified in the contract (eg. a bet would say "if X happens then give all the money to A, otherwise give all the money to B"). The scripting language was not Turing-complete.The Mastercoin team was impressed, but they were not interested in dropping everything they were doing to go in this direction, which I was increasingly convinced is the correct choice. So here comes version 2, circa December:https://web.archive.org/web/20131219030753/http://vitalik.ca/ethereum.htmlHere you can see the results of a substantial rearchitecting, largely a result of a long walk through San Francisco I took in November once I realized that smart contracts could potentially be fully generalized. Instead of the scripting language being simply a way of describing the terms of relations between two parties, contracts were themselves fully-fledged accounts, and had the ability to hold, send and receive assets, and even maintain a permanent storage (back then, the permanent storage was called "memory", and the only temporary "memory" was the 256 registers). The language switched from being a stack-based machine to being a register-based one on my own volition; I had little argument for this other than that it seemed more sophisticated.Additionally, notice that there is now a built-in fee mechanism:At this point, ether literally was gas; after every single computational step, the balance of the contract that a transaction was calling would drop a little bit, and if the contract ran out of money execution would halt. Note that this "receiver pays" mechanism meant that the contract itself had to require the sender to pay the contract a fee, and immediately exit if this fee is not present; the protocol allocated an allowance of 16 free execution steps to allow contracts to reject non-fee-paying transactions.This was the time when the Ethereum protocol was entirely my own creation. From here on, however, new participants started to join the fold. By far the most prominent on the protocol side was Gavin Wood, who reached out to me in an about.me message in December 2013:Jeffrey Wilcke, lead developer of the Go client (back then called "ethereal") also reached out and started coding around the same time, though his contributions were much more on the side of client development rather than protocol research. "Hey Jeremy, glad to see you're interested in Ethereum..." Gavin's initial contributions were two-fold. First, you might notice that the contract calling model in the initial design was an asynchronous one: although contract A could create an "internal transaction" to contract B ("internal transaction" is Etherscan's lingo; initially they were just called "transactions" and then later "message calls" or "calls"), the internal transaction's execution would not start until the execution of the first transaction completely finished. This meant that transactions could not use internal transactions as a way of getting information from other contracts; the only way to do that was the EXTRO opcode (kind of like an SLOAD that you could use to read other contracts' storage), and this too was later removed with the support of Gavin and others.When implementing my initial spec, Gavin naturally implemented internal transactions synchronously without even realizing that the intent was different - that is to say, in Gavin's implementation, when a contract calls another contract, the internal transaction gets executed immediately, and once that execution finishes, the VM returns back to the contract that created the internal transaction and proceeds to the next opcode. This approach seemed to both of us to be superior, so we decided to make it part of the spec.Second, a discussion between him and myself (during a walk in San Francisco, so the exact details will be forever lost to the winds of history and possibly a copy or two in the deep archives of the NSA) led to a re-factoring of the transaction fee model, moving away from the "contract pays" approach to a "sender pays" approach, and also switching to the "gas" architecture. Instead of each individual transaction step immediately taking away a bit of ether, the transaction sender pays for and is allocated some "gas" (roughly, a counter of computational steps), and computational steps drew from this allowance of gas. If a transaction runs out of gas, the gas would still be forfeit, but the entire execution would be reverted; this seemed like the safest thing to do, as it removed an entire class of "partial execution" attacks that contracts previously had to worry about. When a transaction execution finishes, the fee for any unused gas is refunded.Gavin can also be largely credited for the subtle change in vision from viewing Ethereum as a platform for building programmable money, with blockchain-based contracts that can hold digital assets and transfer them according to pre-set rules, to a general-purpose computing platform. This started with subtle changes in emphasis and terminology, and later this influence became stronger with the increasing emphasis on the "Web 3" ensemble, which saw Ethereum as being one piece of a suite of decentralized technologies, the other two being Whisper and Swarm.There were also changes made around the start of 2014 that were suggested by others. We ended up moving back to a stack-based architecture after the idea was suggested by Andrew Miller and others.Charles Hoskinson suggested the switch from Bitcoin's SHA256 to the newer SHA3 (or, more accurately, keccak256). Although there was some controversy for a while, discussions with Gavin, Andrew and others led to establishing that the size of values on the stack should be limited to 32 bytes; the other alternative being considered, unlimited-size integers, had the problem that it was too difficult to figure out how much gas to charge for additions, multiplications and other operations.The initial mining algorithm that we had in mind, back in January 2014, was a contraption called Dagger:https://github.com/ethereum/wiki/blob/master/Dagger.mdDagger was named after the "directed acyclic graph" (DAG), the mathematical structure that is used in the algorithm. The idea is that every N blocks, a new DAG would be pseudorandomly generated from a seed, and the bottom layer of the DAG would be a collection of nodes that takes several gigabytes to store. However, generating any individual value in the DAG would require calculating only a few thousand entries. A "Dagger computation" involved getting some number of values in random positions in this bottom-level dataset and hashing them together. This meant that there was a fast way to make a Dagger calculation - already having the data in memory, and a slow, but not memory intensive way - regenerating each value from the DAG that you need to get from scratch.The intention of this algorithm was to have the same "memory-hardness" properties as algorithms that were popular at the time, like Scrypt, but still be light-client friendly. Miners would use the fast way, and so their mining would be constrained by memory bandwidth (the theory is that consumer-grade RAM is already very heavily optimized, and so it would be hard to further optimize it with ASICs), but light clients could use the memory-free but slower version for verification. The fast way might take a few microseconds and the slow but memory-free way a few milliseconds, so it would still be very viable for light clients.From here, the algorithm would change several times over the course of Ethereum development. The next idea that we went through is "adaptive proof of work"; here, the proof of work would involve executing randomly selected Ethereum contracts, and there is a clever reason why this is expected to be ASIC-resistant: if an ASIC was developed, competing miners would have the incentive to create and publish many contracts that that ASIC was not good at executing. There is no such thing as an ASIC for general computation, the story goes, as that is just a CPU, so we could instead use this kind of adversarial incentive mechanism to make a proof of work that essentially was executing general computation.This fell apart for one simple reason: long-range attacks. An attacker could start a chain from block 1, fill it up with only simple contracts that they can create specialized hardware for, and rapidly overtake the main chain. So... back to the drawing board.The next algorithm was something called Random Circuit, described in this google doc here, proposed by myself and Vlad Zamfir, and analyzed by Matthew Wampler-Doty and others. The idea here was also to simulate general-purpose computation inside a mining algorithm, this time by executing randomly generated circuits. There's no hard proof that something based on these principles could not work, but the computer hardware experts that we reached out to in 2014 tended to be fairly pessimistic on it. Matthew Wampler-Doty himself suggested a proof of work based on SAT solving, but this too was ultimately rejected.Finally, we came full circle with an algorithm called "Dagger Hashimoto". "Dashimoto", as it was sometimes called in short, borrowed many ideas from Hashimoto, a proof of work algorithm by Thaddeus Dryja that pioneered the notion of "I/O bound proof of work", where the dominant limiting factor in mining speed was not hashes per second, but rather megabytes per second of RAM access. However, it combined this with Dagger's notion of light-client-friendly DAG-generated datasets. After many rounds of tweaking by myself, Matthew, Tim and others, the ideas finally converged into the algorithm we now call Ethash.By the summer of 2014, the protocol had considerably stabilized, with the major exception of the proof of work algorithm which would not reach the Ethash phase until around the beginning of 2015, and a semi-formal specification existed in the form of Gavin's yellow paper.In August 2014, I developed and introduced the uncle mechanism, which allows Ethereum's blockchain to have a shorter block time and higher capacity while mitigating centralization risks. This was introduced as part of PoC6.Discussions with the Bitshares team led us to consider adding heaps as a first-class data structure, though we ended up not doing this due to lack of time, and later security audits and DoS attacks will show that it is actually much harder than we had thought at the time to do this safely.In September, Gavin and I planned out the next two major changes to the protocol design. First, alongside the state tree and transaction tree, every block would also contain a "receipt tree". The receipt tree would include hashes of the logs created by a transaction, along with intermediate state roots. Logs would allow transactions to create "outputs" that are saved in the blockchain, and are accessible to light clients, but that are not accessible to future state calculations. This could be used to allow decentralized applications to easily query for events, such as token transfers, purchases, exchange orders being created and filled, auctions being started, and so forth.There were other ideas that were considered, like making a Merkle tree out of the entire execution trace of a transaction to allow anything to be proven; logs were chosen because they were a compromise between simplicity and completeness.The second was the idea of "precompiles", solving the problem of allowing complex cryptographic computations to be usable in the EVM without having to deal with EVM overhead. We had also gone through many more ambitious ideas about "native contracts", where if miners have an optimized implementation of some contracts they could "vote" the gasprice of those contracts down, so contracts that most miners could execute much more quickly would naturally have a lower gas price; however, all of these ideas were rejected because we could not come up with a cryptoeconomically safe way to implement such a thing. An attacker could always create a contract which executes some trapdoored cryptographic operation, distribute the trapdoor to themselves and their friends to allow them to execute this contract much faster, then vote the gasprice down and use this to DoS the network. Instead we opted for the much less ambitious approach of having a smaller number of precompiles that are simply specified in the protocol, for common operations such as hashes and signature schemes.Gavin was also a key initial voice in developing the idea of "protocol abstraction" - moving as many parts of the protocol such as ether balances, transaction signing algorithms, nonces, etc into the protocol itself as contracts, with a theoretical final goal of reaching a situation where the entire ethereum protocol could be described as making a function call into a virtual machine that has some pre-initialized state. There was not enough time for these ideas to get into the initial Frontier release, but the principles are expected to start slowly getting integrated through some of the Constantinople changes, the Casper contract and the sharding specification.This was all implemented in PoC7; after PoC7, the protocol did not really change much, with the exception of minor, though in some cases important, details that would come out through security audits...In early 2015, came the pre-launch security audits organized by Jutta Steiner and others, which included both software code audits and academic audits. The software audits were primarily on the C++ and Go implementations, which were led by Gavin Wood and Jeffrey Wilcke, respectively, though there was also a smaller audit on my pyethereum implementation. Of the two academic audits, one was performed by Ittay Eyal (of "selfish mining" fame), and the other by Andrew Miller and others from Least Authority. The Eyal audit led to a minor protocol change: the total difficulty of a chain would not include uncles. The Least Authority audit was more focused on smart contract and gas economics, as well as the Patricia tree. This audit led to several protocol changes. One small one is the use of sha3(addr) and sha3(key) as trie keys instead of the address and key directly; this would make it harder to perform a worst-case attack on the trie. And a warning that was perhaps a bit too far ahead of its time... Another significant thing that we discussed was the gas limit voting mechanism. At the time, we were already concerned by perceived lack of progress in the bitcoin block size debate, and wanted to have a more flexible design in Ethereum that could adjust over time as needed. But the challenge is: what is the optimal limit? My initial thought had been to make a dynamic limit, targeting \(1.5 \cdot\) the long-term exponential moving average of the actual gas usage, so that in the long run on average blocks would be \(\frac\) full. However, Andrew showed that this was exploitable in some ways - specifically, miners who wanted to raise the limit would simply include transactions in their own blocks that consume a very large amount of gas, but take very little time to process, and thereby always create full blocks at no cost to themselves. The security model was thus, at least in the upward direction, equivalent to simply having miners vote on the gas limit.We did not manage to come up with a gas limit strategy that was less likely to break, and so Andrew's recommended solution was to simply have miners vote on the gas limit explicitly, and have the default strategy for voting be the \(1.5\cdot\) EMA rule. The reasoning was that we were still very far from knowing the right approach for setting maximum gas limits, and the risk of any specific approach failing seemed greater than the risk of miners abusing their voting power. Hence, we might as well simply let miners vote on the gas limit, and accept the risk that the limit will go too high or too low, in exchange for the benefit of flexibility, and the ability for miners to work together to very quickly adjust the limit upwards or downwards as needed.After a mini-hackathon between Gavin, Jeff and myself, PoC9 was launched in March, and was intended to be the final proof of concept release. A testnet, Olympic, ran for four months, using the protocol that was intended to be used in the livenet, and Ethereum's long-term plan was established. Vinay Gupta wrote a blog post, "The Ethereum Launch Process", that described the four expected stages of Ethereum livenet development, and gave them their current names: Frontier, Homestead, Metropolis and Serenity.Olympic ran for four months. In the first two months, many bugs were found in the various implementations, consensus failures happened, among other issues, but around June the network noticeably stabilized. In July a decision was made to make a code-freeze, followed by a release, and on July 30 the release took place.