找到

1087

篇与

heyuan

相关的结果

-

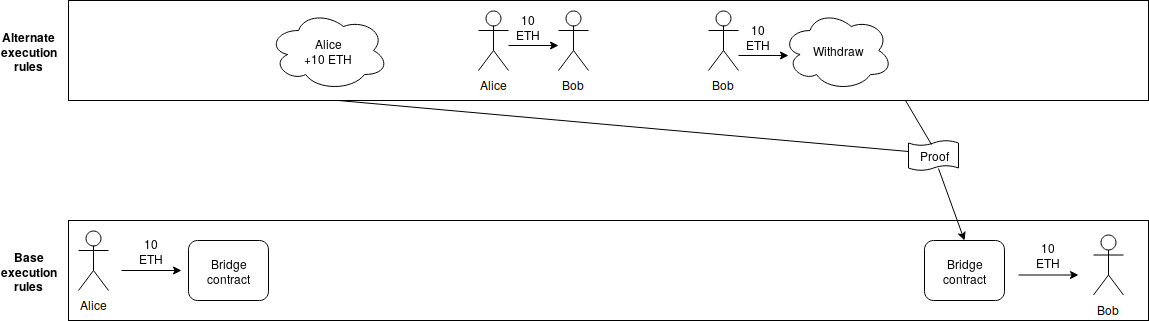

Layer 1 Should Be Innovative in the Short Term but Less in the Long Term Layer 1 Should Be Innovative in the Short Term but Less in the Long Term2018 Aug 26 See all posts Layer 1 Should Be Innovative in the Short Term but Less in the Long Term See update 2018-08-29One of the key tradeoffs in blockchain design is whether to build more functionality into base-layer blockchains themselves ("layer 1"), or to build it into protocols that live on top of the blockchain, and can be created and modified without changing the blockchain itself ("layer 2"). The tradeoff has so far shown itself most in the scaling debates, with block size increases (and sharding) on one side and layer-2 solutions like Plasma and channels on the other, and to some extent blockchain governance, with loss and theft recovery being solvable by either the DAO fork or generalizations thereof such as EIP 867, or by layer-2 solutions such as Reversible Ether (RETH). So which approach is ultimately better? Those who know me well, or have seen me out myself as a dirty centrist, know that I will inevitably say "some of both". However, in the longer term, I do think that as blockchains become more and more mature, layer 1 will necessarily stabilize, and layer 2 will take on more and more of the burden of ongoing innovation and change.There are several reasons why. The first is that layer 1 solutions require ongoing protocol change to happen at the base protocol layer, base layer protocol change requires governance, and it has still not been shown that, in the long term, highly "activist" blockchain governance can continue without causing ongoing political uncertainty or collapsing into centralization.To take an example from another sphere, consider Moxie Marlinspike's defense of Signal's centralized and non-federated nature. A document by a company defending its right to maintain control over an ecosystem it depends on for its key business should of course be viewed with massive grains of salt, but one can still benefit from the arguments. Quoting:One of the controversial things we did with Signal early on was to build it as an unfederated service. Nothing about any of the protocols we've developed requires centralization; it's entirely possible to build a federated Signal Protocol-based messenger, but I no longer believe that it is possible to build a competitive federated messenger at all.And:Their retort was "that's dumb, how far would the internet have gotten without interoperable protocols defined by 3rd parties?" I thought about it. We got to the first production version of IP, and have been trying for the past 20 years to switch to a second production version of IP with limited success. We got to HTTP version 1.1 in 1997, and have been stuck there until now. Likewise, SMTP, IRC, DNS, XMPP, are all similarly frozen in time circa the late 1990s. To answer his question, that's how far the internet got. It got to the late 90s. That has taken us pretty far, but it's undeniable that once you federate your protocol, it becomes very difficult to make changes. And right now, at the application level, things that stand still don't fare very well in a world where the ecosystem is moving ... So long as federation means stasis while centralization means movement, federated protocols are going to have trouble existing in a software climate that demands movement as it does today.At this point in time, and in the medium term going forward, it seems clear that decentralized application platforms, cryptocurrency payments, identity systems, reputation systems, decentralized exchange mechanisms, auctions, privacy solutions, programming languages that support privacy solutions, and most other interesting things that can be done on blockchains are spheres where there will continue to be significant and ongoing innovation. Decentralized application platforms often need continued reductions in confirmation time, payments need fast confirmations, low transaction costs, privacy, and many other built-in features, exchanges are appearing in many shapes and sizes including on-chain automated market makers, frequent batch auctions, combinatorial auctions and more. Hence, "building in" any of these into a base layer blockchain would be a bad idea, as it would create a high level of governance overhead as the platform would have to continually discuss, implement and coordinate newly discovered technical improvements. For the same reason federated messengers have a hard time getting off the ground without re-centralizing, blockchains would also need to choose between adopting activist governance, with the perils that entails, and falling behind newly appearing alternatives.Even Ethereum's limited level of application-specific functionality, precompiles, has seen some of this effect. Less than a year ago, Ethereum adopted the Byzantium hard fork, including operations to facilitate elliptic curve operations needed for ring signatures, ZK-SNARKs and other applications, using the alt-bn128 curve. Now, Zcash and other blockchains are moving toward BLS-12-381, and Ethereum would need to fork again to catch up. In part to avoid having similar problems in the future, the Ethereum community is looking to upgrade the EVM to E-WASM, a virtual machine that is sufficiently more efficient that there is far less need to incorporate application-specific precompiles.But there is also a second argument in favor of layer 2 solutions, one that does not depend on speed of anticipated technical development: sometimes there are inevitable tradeoffs, with no single globally optimal solution. This is less easily visible in Ethereum 1.0-style blockchains, where there are certain models that are reasonably universal (eg. Ethereum's account-based model is one). In sharded blockchains, however, one type of question that does not exist in Ethereum today crops up: how to do cross-shard transactions? That is, suppose that the blockchain state has regions A and B, where few or no nodes are processing both A and B. How does the system handle transactions that affect both A and B?The current answer involves asynchronous cross-shard communication, which is sufficient for transferring assets and some other applications, but insufficient for many others. Synchronous operations (eg. to solve the train and hotel problem) can be bolted on top with cross-shard yanking, but this requires multiple rounds of cross-shard interaction, leading to significant delays. We can solve these problems with a synchronous execution scheme, but this comes with several tradeoffs:The system cannot process more than one transaction for the same account per block Transactions must declare in advance what shards and addresses they affect There is a high risk of any given transaction failing (and still being required to pay fees!) if the transaction is only accepted in some of the shards that it affects but not others It seems very likely that a better scheme can be developed, but it would be more complex, and may well have limitations that this scheme does not. There are known results preventing perfection; at the very least, Amdahl's law puts a hard limit on the ability of some applications and some types of interaction to process more transactions per second through parallelization.So how do we create an environment where better schemes can be tested and deployed? The answer is an idea that can be credited to Justin Drake: layer 2 execution engines. Users would be able to send assets into a "bridge contract", which would calculate (using some indirect technique such as interactive verification or ZK-SNARKs) state roots using some alternative set of rules for processing the blockchain (think of this as equivalent to layer-two "meta-protocols" like Mastercoin/OMNI and Counterparty on top of Bitcoin, except because of the bridge contract these protocols would be able to handle assets whose "base ledger" is defined on the underlying protocol), and which would process withdrawals if and only if the alternative ruleset generates a withdrawal request. Note that anyone can create a layer 2 execution engine at any time, different users can use different execution engines, and one can switch from one execution engine to any other, or to the base protocol, fairly quickly. The base blockchain no longer has to worry about being an optimal smart contract processing engine; it need only be a data availability layer with execution rules that are quasi-Turing-complete so that any layer 2 bridge contract can be built on top, and that allow basic operations to carry state between shards (in fact, only ETH transfers being fungible across shards is sufficient, but it takes very little effort to also allow cross-shard calls, so we may as well support them), but does not require complexity beyond that. Note also that layer 2 execution engines can have different state management rules than layer 1, eg. not having storage rent; anything goes, as it's the responsibility of the users of that specific execution engine to make sure that it is sustainable, and if they fail to do so the consequences are contained to within the users of that particular execution engine.In the long run, layer 1 would not be actively competing on all of these improvements; it would simply provide a stable platform for the layer 2 innovation to happen on top. Does this mean that, say, sharding is a bad idea, and we should keep the blockchain size and state small so that even 10 year old computers can process everyone's transactions? Absolutely not. Even if execution engines are something that gets partially or fully moved to layer 2, consensus on data ordering and availability is still a highly generalizable and necessary function; to see how difficult layer 2 execution engines are without layer 1 scalable data availability consensus, see the difficulties in Plasma research, and its difficulty of naturally extending to fully general purpose blockchains, for an example. And if people want to throw a hundred megabytes per second of data into a system where they need consensus on availability, then we need a hundred megabytes per second of data availability consensus.Additionally, layer 1 can still improve on reducing latency; if layer 1 is slow, the only strategy for achieving very low latency is state channels, which often have high capital requirements and can be difficult to generalize. State channels will always beat layer 1 blockchains in latency as state channels require only a single network message, but in those cases where state channels do not work well, layer 1 blockchains can still come closer than they do today.Hence, the other extreme position, that blockchain base layers can be truly absolutely minimal, and not bother with either a quasi-Turing-complete execution engine or scalability to beyond the capacity of a single node, is also clearly false; there is a certain minimal level of complexity that is required for base layers to be powerful enough for applications to build on top of them, and we have not yet reached that level. Additional complexity is needed, though it should be chosen very carefully to make sure that it is maximally general purpose, and not targeted toward specific applications or technologies that will go out of fashion in two years due to loss of interest or better alternatives.And even in the future base layers will need to continue to make some upgrades, especially if new technologies (eg. STARKs reaching higher levels of maturity) allow them to achieve stronger properties than they could before, though developers today can take care to make base layer platforms maximally forward-compatible with such potential improvements. So it will continue to be true that a balance between layer 1 and layer 2 improvements is needed to continue improving scalability, privacy and versatility, though layer 2 will continue to take up a larger and larger share of the innovation over time.Update 2018.08.29: Justin Drake pointed out to me another good reason why some features may be best implemented on layer 1: those features are public goods, and so could not be efficiently or reliably funded with feature-specific use fees, and hence are best paid for by subsidies paid out of issuance or burned transaction fees. One possible example of this is secure random number generation, and another is generation of zero knowledge proofs for more efficient client validation of correctness of various claims about blockchain contents or state.

Layer 1 Should Be Innovative in the Short Term but Less in the Long Term Layer 1 Should Be Innovative in the Short Term but Less in the Long Term2018 Aug 26 See all posts Layer 1 Should Be Innovative in the Short Term but Less in the Long Term See update 2018-08-29One of the key tradeoffs in blockchain design is whether to build more functionality into base-layer blockchains themselves ("layer 1"), or to build it into protocols that live on top of the blockchain, and can be created and modified without changing the blockchain itself ("layer 2"). The tradeoff has so far shown itself most in the scaling debates, with block size increases (and sharding) on one side and layer-2 solutions like Plasma and channels on the other, and to some extent blockchain governance, with loss and theft recovery being solvable by either the DAO fork or generalizations thereof such as EIP 867, or by layer-2 solutions such as Reversible Ether (RETH). So which approach is ultimately better? Those who know me well, or have seen me out myself as a dirty centrist, know that I will inevitably say "some of both". However, in the longer term, I do think that as blockchains become more and more mature, layer 1 will necessarily stabilize, and layer 2 will take on more and more of the burden of ongoing innovation and change.There are several reasons why. The first is that layer 1 solutions require ongoing protocol change to happen at the base protocol layer, base layer protocol change requires governance, and it has still not been shown that, in the long term, highly "activist" blockchain governance can continue without causing ongoing political uncertainty or collapsing into centralization.To take an example from another sphere, consider Moxie Marlinspike's defense of Signal's centralized and non-federated nature. A document by a company defending its right to maintain control over an ecosystem it depends on for its key business should of course be viewed with massive grains of salt, but one can still benefit from the arguments. Quoting:One of the controversial things we did with Signal early on was to build it as an unfederated service. Nothing about any of the protocols we've developed requires centralization; it's entirely possible to build a federated Signal Protocol-based messenger, but I no longer believe that it is possible to build a competitive federated messenger at all.And:Their retort was "that's dumb, how far would the internet have gotten without interoperable protocols defined by 3rd parties?" I thought about it. We got to the first production version of IP, and have been trying for the past 20 years to switch to a second production version of IP with limited success. We got to HTTP version 1.1 in 1997, and have been stuck there until now. Likewise, SMTP, IRC, DNS, XMPP, are all similarly frozen in time circa the late 1990s. To answer his question, that's how far the internet got. It got to the late 90s. That has taken us pretty far, but it's undeniable that once you federate your protocol, it becomes very difficult to make changes. And right now, at the application level, things that stand still don't fare very well in a world where the ecosystem is moving ... So long as federation means stasis while centralization means movement, federated protocols are going to have trouble existing in a software climate that demands movement as it does today.At this point in time, and in the medium term going forward, it seems clear that decentralized application platforms, cryptocurrency payments, identity systems, reputation systems, decentralized exchange mechanisms, auctions, privacy solutions, programming languages that support privacy solutions, and most other interesting things that can be done on blockchains are spheres where there will continue to be significant and ongoing innovation. Decentralized application platforms often need continued reductions in confirmation time, payments need fast confirmations, low transaction costs, privacy, and many other built-in features, exchanges are appearing in many shapes and sizes including on-chain automated market makers, frequent batch auctions, combinatorial auctions and more. Hence, "building in" any of these into a base layer blockchain would be a bad idea, as it would create a high level of governance overhead as the platform would have to continually discuss, implement and coordinate newly discovered technical improvements. For the same reason federated messengers have a hard time getting off the ground without re-centralizing, blockchains would also need to choose between adopting activist governance, with the perils that entails, and falling behind newly appearing alternatives.Even Ethereum's limited level of application-specific functionality, precompiles, has seen some of this effect. Less than a year ago, Ethereum adopted the Byzantium hard fork, including operations to facilitate elliptic curve operations needed for ring signatures, ZK-SNARKs and other applications, using the alt-bn128 curve. Now, Zcash and other blockchains are moving toward BLS-12-381, and Ethereum would need to fork again to catch up. In part to avoid having similar problems in the future, the Ethereum community is looking to upgrade the EVM to E-WASM, a virtual machine that is sufficiently more efficient that there is far less need to incorporate application-specific precompiles.But there is also a second argument in favor of layer 2 solutions, one that does not depend on speed of anticipated technical development: sometimes there are inevitable tradeoffs, with no single globally optimal solution. This is less easily visible in Ethereum 1.0-style blockchains, where there are certain models that are reasonably universal (eg. Ethereum's account-based model is one). In sharded blockchains, however, one type of question that does not exist in Ethereum today crops up: how to do cross-shard transactions? That is, suppose that the blockchain state has regions A and B, where few or no nodes are processing both A and B. How does the system handle transactions that affect both A and B?The current answer involves asynchronous cross-shard communication, which is sufficient for transferring assets and some other applications, but insufficient for many others. Synchronous operations (eg. to solve the train and hotel problem) can be bolted on top with cross-shard yanking, but this requires multiple rounds of cross-shard interaction, leading to significant delays. We can solve these problems with a synchronous execution scheme, but this comes with several tradeoffs:The system cannot process more than one transaction for the same account per block Transactions must declare in advance what shards and addresses they affect There is a high risk of any given transaction failing (and still being required to pay fees!) if the transaction is only accepted in some of the shards that it affects but not others It seems very likely that a better scheme can be developed, but it would be more complex, and may well have limitations that this scheme does not. There are known results preventing perfection; at the very least, Amdahl's law puts a hard limit on the ability of some applications and some types of interaction to process more transactions per second through parallelization.So how do we create an environment where better schemes can be tested and deployed? The answer is an idea that can be credited to Justin Drake: layer 2 execution engines. Users would be able to send assets into a "bridge contract", which would calculate (using some indirect technique such as interactive verification or ZK-SNARKs) state roots using some alternative set of rules for processing the blockchain (think of this as equivalent to layer-two "meta-protocols" like Mastercoin/OMNI and Counterparty on top of Bitcoin, except because of the bridge contract these protocols would be able to handle assets whose "base ledger" is defined on the underlying protocol), and which would process withdrawals if and only if the alternative ruleset generates a withdrawal request. Note that anyone can create a layer 2 execution engine at any time, different users can use different execution engines, and one can switch from one execution engine to any other, or to the base protocol, fairly quickly. The base blockchain no longer has to worry about being an optimal smart contract processing engine; it need only be a data availability layer with execution rules that are quasi-Turing-complete so that any layer 2 bridge contract can be built on top, and that allow basic operations to carry state between shards (in fact, only ETH transfers being fungible across shards is sufficient, but it takes very little effort to also allow cross-shard calls, so we may as well support them), but does not require complexity beyond that. Note also that layer 2 execution engines can have different state management rules than layer 1, eg. not having storage rent; anything goes, as it's the responsibility of the users of that specific execution engine to make sure that it is sustainable, and if they fail to do so the consequences are contained to within the users of that particular execution engine.In the long run, layer 1 would not be actively competing on all of these improvements; it would simply provide a stable platform for the layer 2 innovation to happen on top. Does this mean that, say, sharding is a bad idea, and we should keep the blockchain size and state small so that even 10 year old computers can process everyone's transactions? Absolutely not. Even if execution engines are something that gets partially or fully moved to layer 2, consensus on data ordering and availability is still a highly generalizable and necessary function; to see how difficult layer 2 execution engines are without layer 1 scalable data availability consensus, see the difficulties in Plasma research, and its difficulty of naturally extending to fully general purpose blockchains, for an example. And if people want to throw a hundred megabytes per second of data into a system where they need consensus on availability, then we need a hundred megabytes per second of data availability consensus.Additionally, layer 1 can still improve on reducing latency; if layer 1 is slow, the only strategy for achieving very low latency is state channels, which often have high capital requirements and can be difficult to generalize. State channels will always beat layer 1 blockchains in latency as state channels require only a single network message, but in those cases where state channels do not work well, layer 1 blockchains can still come closer than they do today.Hence, the other extreme position, that blockchain base layers can be truly absolutely minimal, and not bother with either a quasi-Turing-complete execution engine or scalability to beyond the capacity of a single node, is also clearly false; there is a certain minimal level of complexity that is required for base layers to be powerful enough for applications to build on top of them, and we have not yet reached that level. Additional complexity is needed, though it should be chosen very carefully to make sure that it is maximally general purpose, and not targeted toward specific applications or technologies that will go out of fashion in two years due to loss of interest or better alternatives.And even in the future base layers will need to continue to make some upgrades, especially if new technologies (eg. STARKs reaching higher levels of maturity) allow them to achieve stronger properties than they could before, though developers today can take care to make base layer platforms maximally forward-compatible with such potential improvements. So it will continue to be true that a balance between layer 1 and layer 2 improvements is needed to continue improving scalability, privacy and versatility, though layer 2 will continue to take up a larger and larger share of the innovation over time.Update 2018.08.29: Justin Drake pointed out to me another good reason why some features may be best implemented on layer 1: those features are public goods, and so could not be efficiently or reliably funded with feature-specific use fees, and hence are best paid for by subsidies paid out of issuance or burned transaction fees. One possible example of this is secure random number generation, and another is generation of zero knowledge proofs for more efficient client validation of correctness of various claims about blockchain contents or state. -

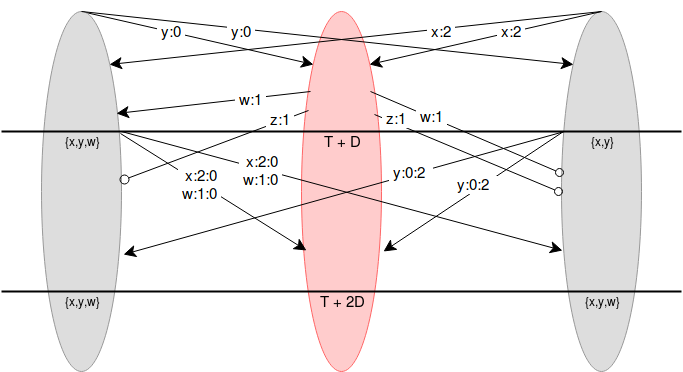

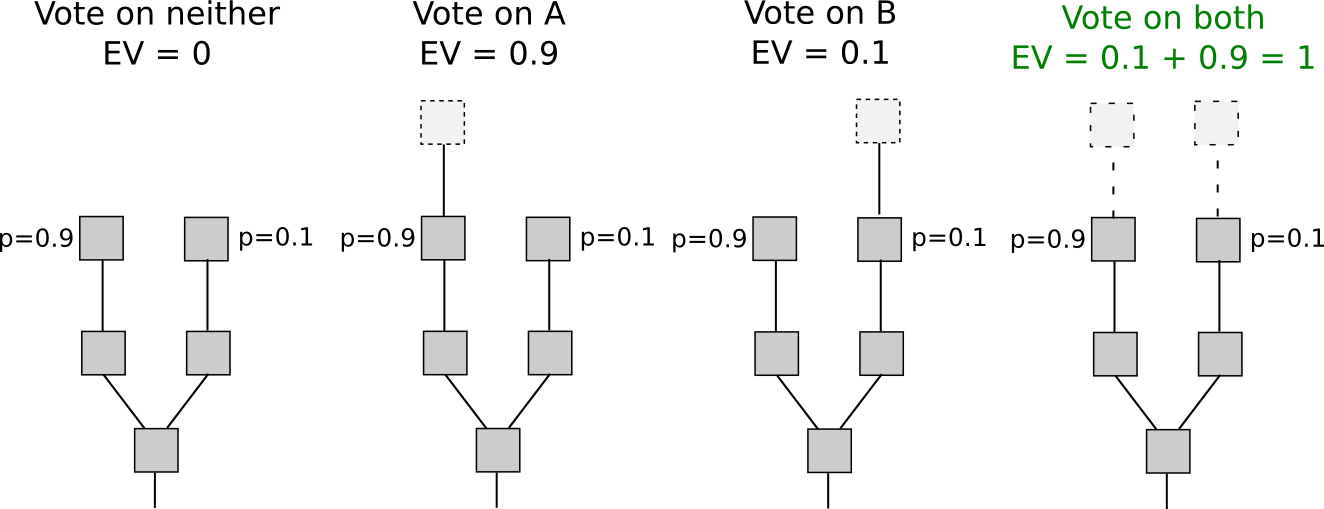

A Guide to 99% Fault Tolerant Consensus A Guide to 99% Fault Tolerant Consensus2018 Aug 07 See all posts A Guide to 99% Fault Tolerant Consensus Special thanks to Emin Gun Sirer for reviewWe've heard for a long time that it's possible to achieve consensus with 50% fault tolerance in a synchronous network where messages broadcasted by any honest node are guaranteed to be received by all other honest nodes within some known time period (if an attacker has more than 50%, they can perform a "51% attack", and there's an analogue of this for any algorithm of this type). We've also heard for a long time that if you want to relax the synchrony assumption, and have an algorithm that's "safe under asynchrony", the maximum achievable fault tolerance drops to 33% (PBFT, Casper FFG, etc all fall into this category). But did you know that if you add even more assumptions (specifically, you require observers, ie. users that are not actively participating in the consensus but care about its output, to also be actively watching the consensus, and not just downloading its output after the fact), you can increase fault tolerance all the way to 99%?This has in fact been known for a long time; Leslie Lamport's famous 1982 paper "The Byzantine Generals Problem" (link here) contains a description of the algorithm. The following will be my attempt to describe and reformulate the algorithm in a simplified form.Suppose that there are \(N\) consensus-participating nodes, and everyone agrees who these nodes are ahead of time (depending on context, they could have been selected by a trusted party or, if stronger decentralization is desired, by some proof of work or proof of stake scheme). We label these nodes \(0 ...N-1\). Suppose also that there is a known bound \(D\) on network latency plus clock disparity (eg. \(D\) = 8 seconds). Each node has the ability to publish a value at time \(T\) (a malicious node can of course propose values earlier or later than \(T\)). All nodes wait \((N-1) \cdot D\) seconds, running the following process. Define \(x : i\) as "the value \(x\) signed by node \(i\)", \(x : i : j\) as "the value \(x\) signed by \(i\), and that value and signature together signed by \(j\)", etc. The proposals published in the first stage will be of the form \(v: i\) for some \(v\) and \(i\), containing the signature of the node that proposed it.If a validator \(i\) receives some message \(v : i[1] : ... : i[k]\), where \(i[1] ... i[k]\) is a list of indices that have (sequentially) signed the message already (just \(v\) by itself would count as \(k=0\), and \(v:i\) as \(k=1\)), then the validator checks that (i) the time is less than \(T + k \cdot D\), and (ii) they have not yet seen a valid message containing \(v\); if both checks pass, they publish \(v : i[1] : ... : i[k] : i\).At time \(T + (N-1) \cdot D\), nodes stop listening. At this point, there is a guarantee that honest nodes have all "validly seen" the same set of values. Node 1 (red) is malicious, and nodes 0 and 2 (grey) are honest. At the start, the two honest nodes make their proposals \(y\) and \(x\), and the attacker proposes both \(w\) and \(z\) late. \(w\) reaches node 0 on time but not node 2, and \(z\) reaches neither node on time. At time \(T + D\), nodes 0 and 2 rebroadcast all values they've seen that they have not yet broadcasted, but add their signatures on (\(x\) and \(w\) for node 0, \(y\) for node 2). Both honest nodes saw \(\).If the problem demands choosing one value, they can use some "choice" function to pick a single value out of the values they have seen (eg. they take the one with the lowest hash). The nodes can then agree on this value.Now, let's explore why this works. What we need to prove is that if one honest node has seen a particular value (validly), then every other honest node has also seen that value (and if we prove this, then we know that all honest nodes have seen the same set of values, and so if all honest nodes are running the same choice function, they will choose the same value). Suppose that any honest node receives a message \(v : i[1] : ... : i[k]\) that they perceive to be valid (ie. it arrives before time \(T + k \cdot D\)). Suppose \(x\) is the index of a single other honest node. Either \(x\) is part of \(\) or it is not.In the first case (say \(x = i[j]\) for this message), we know that the honest node \(x\) had already broadcasted that message, and they did so in response to a message with \(j-1\) signatures that they received before time \(T + (j-1) \cdot D\), so they broadcast their message at that time, and so the message must have been received by all honest nodes before time \(T + j \cdot D\). In the second case, since the honest node sees the message before time \(T + k \cdot D\), then they will broadcast the message with their signature and guarantee that everyone, including \(x\), will see it before time \(T + (k+1) \cdot D\). Notice that the algorithm uses the act of adding one's own signature as a kind of "bump" on the timeout of a message, and it's this ability that guarantees that if one honest node saw a message on time, they can ensure that everyone else sees the message on time as well, as the definition of "on time" increments by more than network latency with every added signature.In the case where one node is honest, can we guarantee that passive observers (ie. non-consensus-participating nodes that care about knowing the outcome) can also see the outcome, even if we require them to be watching the process the whole time? With the scheme as written, there's a problem. Suppose that a commander and some subset of \(k\) (malicious) validators produce a message \(v : i[1] : .... : i[k]\), and broadcast it directly to some "victims" just before time \(T + k \cdot D\). The victims see the message as being "on time", but when they rebroadcast it, it only reaches all honest consensus-participating nodes after \(T + k \cdot D\), and so all honest consensus-participating nodes reject it. But we can plug this hole. We require \(D\) to be a bound on two times network latency plus clock disparity. We then put a different timeout on observers: an observer accepts \(v : i[1] : .... : i[k]\) before time \(T + (k - 0.5) \cdot D\). Now, suppose an observer sees a message an accepts it. They will be able to broadcast it to an honest node before time \(T + k \cdot D\), and the honest node will issue the message with their signature attached, which will reach all other observers before time \(T + (k + 0.5) \cdot D\), the timeout for messages with \(k+1\) signatures. Retrofitting onto other consensus algorithmsThe above could theoretically be used as a standalone consensus algorithm, and could even be used to run a proof-of-stake blockchain. The validator set of round \(N+1\) of the consensus could itself be decided during round \(N\) of the consensus (eg. each round of a consensus could also accept "deposit" and "withdraw" transactions, which if accepted and correctly signed would add or remove validators into the next round). The main additional ingredient that would need to be added is a mechanism for deciding who is allowed to propose blocks (eg. each round could have one designated proposer). It could also be modified to be usable as a proof-of-work blockchain, by allowing consensus-participating nodes to "declare themselves" in real time by publishing a proof of work solution on top of their public key at th same time as signing a message with it.However, the synchrony assumption is very strong, and so we would like to be able to work without it in the case where we don't need more than 33% or 50% fault tolerance. There is a way to accomplish this. Suppose that we have some other consensus algorithm (eg. PBFT, Casper FFG, chain-based PoS) whose output can be seen by occasionally-online observers (we'll call this the threshold-dependent consensus algorithm, as opposed to the algorithm above, which we'll call the latency-dependent consensus algorithm). Suppose that the threshold-dependent consensus algorithm runs continuously, in a mode where it is constantly "finalizing" new blocks onto a chain (ie. each finalized value points to some previous finalized value as a "parent"; if there's a sequence of pointers \(A \rightarrow ... \rightarrow B\), we'll call \(A\) a descendant of \(B\)).We can retrofit the latency-dependent algorithm onto this structure, giving always-online observers access to a kind of "strong finality" on checkpoints, with fault tolerance ~95% (you can push this arbitrarily close to 100% by adding more validators and requiring the process to take longer).Every time the time reaches some multiple of 4096 seconds, we run the latency-dependent algorithm, choosing 512 random nodes to participate in the algorithm. A valid proposal is any valid chain of values that were finalized by the threshold-dependent algorithm. If a node sees some finalized value before time \(T + k \cdot D\) (\(D\) = 8 seconds) with \(k\) signatures, it accepts the chain into its set of known chains and rebroadcasts it with its own signature added; observers use a threshold of \(T + (k - 0.5) \cdot D\) as before.The "choice" function used at the end is simple:Finalized values that are not descendants of what was already agreed to be a finalized value in the previous round are ignored Finalized values that are invalid are ignored To choose between two valid finalized values, pick the one with the lower hash If 5% of validators are honest, there is only a roughly 1 in 1 trillion chance that none of the 512 randomly selected nodes will be honest, and so as long as the network latency plus clock disparity is less than \(\frac\) the above algorithm will work, correctly coordinating nodes on some single finalized value, even if multiple conflicting finalized values are presented because the fault tolerance of the threshold-dependent algorithm is broken.If the fault tolerance of the threshold-dependent consensus algorithm is met (usually 50% or 67% honest), then the threshold-dependent consensus algorithm will either not finalize any new checkpoints, or it will finalize new checkpoints that are compatible with each other (eg. a series of checkpoints where each points to the previous as a parent), so even if network latency exceeds \(\frac\) (or even \(D\)), and as a result nodes participating in the latency-dependent algorithm disagree on which value they accept, the values they accept are still guaranteed to be part of the same chain and so there is no actual disagreement. Once latency recovers back to normal in some future round, the latency-dependent consensus will get back "in sync".If the assumptions of both the threshold-dependent and latency-dependent consensus algorithms are broken at the same time (or in consecutive rounds), then the algorithm can break down. For example, suppose in one round, the threshold-dependent consensus finalizes \(Z \rightarrow Y \rightarrow X\) and the latency-dependent consensus disagrees between \(Y\) and \(X\), and in the next round the threshold-dependent consensus finalizes a descendant \(W\) of \(X\) which is not a descendant of \(Y\); in the latency-dependent consensus, the nodes who agreed \(Y\) will not accept \(W\), but the nodes that agreed \(X\) will. However, this is unavoidable; the impossibility of safe-under-asynchrony consensus with more than \(\frac\) fault tolerance is a well known result in Byzantine fault tolerance theory, as is the impossibility of more than \(\frac\) fault tolerance even allowing synchrony assumptions but assuming offline observers.

A Guide to 99% Fault Tolerant Consensus A Guide to 99% Fault Tolerant Consensus2018 Aug 07 See all posts A Guide to 99% Fault Tolerant Consensus Special thanks to Emin Gun Sirer for reviewWe've heard for a long time that it's possible to achieve consensus with 50% fault tolerance in a synchronous network where messages broadcasted by any honest node are guaranteed to be received by all other honest nodes within some known time period (if an attacker has more than 50%, they can perform a "51% attack", and there's an analogue of this for any algorithm of this type). We've also heard for a long time that if you want to relax the synchrony assumption, and have an algorithm that's "safe under asynchrony", the maximum achievable fault tolerance drops to 33% (PBFT, Casper FFG, etc all fall into this category). But did you know that if you add even more assumptions (specifically, you require observers, ie. users that are not actively participating in the consensus but care about its output, to also be actively watching the consensus, and not just downloading its output after the fact), you can increase fault tolerance all the way to 99%?This has in fact been known for a long time; Leslie Lamport's famous 1982 paper "The Byzantine Generals Problem" (link here) contains a description of the algorithm. The following will be my attempt to describe and reformulate the algorithm in a simplified form.Suppose that there are \(N\) consensus-participating nodes, and everyone agrees who these nodes are ahead of time (depending on context, they could have been selected by a trusted party or, if stronger decentralization is desired, by some proof of work or proof of stake scheme). We label these nodes \(0 ...N-1\). Suppose also that there is a known bound \(D\) on network latency plus clock disparity (eg. \(D\) = 8 seconds). Each node has the ability to publish a value at time \(T\) (a malicious node can of course propose values earlier or later than \(T\)). All nodes wait \((N-1) \cdot D\) seconds, running the following process. Define \(x : i\) as "the value \(x\) signed by node \(i\)", \(x : i : j\) as "the value \(x\) signed by \(i\), and that value and signature together signed by \(j\)", etc. The proposals published in the first stage will be of the form \(v: i\) for some \(v\) and \(i\), containing the signature of the node that proposed it.If a validator \(i\) receives some message \(v : i[1] : ... : i[k]\), where \(i[1] ... i[k]\) is a list of indices that have (sequentially) signed the message already (just \(v\) by itself would count as \(k=0\), and \(v:i\) as \(k=1\)), then the validator checks that (i) the time is less than \(T + k \cdot D\), and (ii) they have not yet seen a valid message containing \(v\); if both checks pass, they publish \(v : i[1] : ... : i[k] : i\).At time \(T + (N-1) \cdot D\), nodes stop listening. At this point, there is a guarantee that honest nodes have all "validly seen" the same set of values. Node 1 (red) is malicious, and nodes 0 and 2 (grey) are honest. At the start, the two honest nodes make their proposals \(y\) and \(x\), and the attacker proposes both \(w\) and \(z\) late. \(w\) reaches node 0 on time but not node 2, and \(z\) reaches neither node on time. At time \(T + D\), nodes 0 and 2 rebroadcast all values they've seen that they have not yet broadcasted, but add their signatures on (\(x\) and \(w\) for node 0, \(y\) for node 2). Both honest nodes saw \(\).If the problem demands choosing one value, they can use some "choice" function to pick a single value out of the values they have seen (eg. they take the one with the lowest hash). The nodes can then agree on this value.Now, let's explore why this works. What we need to prove is that if one honest node has seen a particular value (validly), then every other honest node has also seen that value (and if we prove this, then we know that all honest nodes have seen the same set of values, and so if all honest nodes are running the same choice function, they will choose the same value). Suppose that any honest node receives a message \(v : i[1] : ... : i[k]\) that they perceive to be valid (ie. it arrives before time \(T + k \cdot D\)). Suppose \(x\) is the index of a single other honest node. Either \(x\) is part of \(\) or it is not.In the first case (say \(x = i[j]\) for this message), we know that the honest node \(x\) had already broadcasted that message, and they did so in response to a message with \(j-1\) signatures that they received before time \(T + (j-1) \cdot D\), so they broadcast their message at that time, and so the message must have been received by all honest nodes before time \(T + j \cdot D\). In the second case, since the honest node sees the message before time \(T + k \cdot D\), then they will broadcast the message with their signature and guarantee that everyone, including \(x\), will see it before time \(T + (k+1) \cdot D\). Notice that the algorithm uses the act of adding one's own signature as a kind of "bump" on the timeout of a message, and it's this ability that guarantees that if one honest node saw a message on time, they can ensure that everyone else sees the message on time as well, as the definition of "on time" increments by more than network latency with every added signature.In the case where one node is honest, can we guarantee that passive observers (ie. non-consensus-participating nodes that care about knowing the outcome) can also see the outcome, even if we require them to be watching the process the whole time? With the scheme as written, there's a problem. Suppose that a commander and some subset of \(k\) (malicious) validators produce a message \(v : i[1] : .... : i[k]\), and broadcast it directly to some "victims" just before time \(T + k \cdot D\). The victims see the message as being "on time", but when they rebroadcast it, it only reaches all honest consensus-participating nodes after \(T + k \cdot D\), and so all honest consensus-participating nodes reject it. But we can plug this hole. We require \(D\) to be a bound on two times network latency plus clock disparity. We then put a different timeout on observers: an observer accepts \(v : i[1] : .... : i[k]\) before time \(T + (k - 0.5) \cdot D\). Now, suppose an observer sees a message an accepts it. They will be able to broadcast it to an honest node before time \(T + k \cdot D\), and the honest node will issue the message with their signature attached, which will reach all other observers before time \(T + (k + 0.5) \cdot D\), the timeout for messages with \(k+1\) signatures. Retrofitting onto other consensus algorithmsThe above could theoretically be used as a standalone consensus algorithm, and could even be used to run a proof-of-stake blockchain. The validator set of round \(N+1\) of the consensus could itself be decided during round \(N\) of the consensus (eg. each round of a consensus could also accept "deposit" and "withdraw" transactions, which if accepted and correctly signed would add or remove validators into the next round). The main additional ingredient that would need to be added is a mechanism for deciding who is allowed to propose blocks (eg. each round could have one designated proposer). It could also be modified to be usable as a proof-of-work blockchain, by allowing consensus-participating nodes to "declare themselves" in real time by publishing a proof of work solution on top of their public key at th same time as signing a message with it.However, the synchrony assumption is very strong, and so we would like to be able to work without it in the case where we don't need more than 33% or 50% fault tolerance. There is a way to accomplish this. Suppose that we have some other consensus algorithm (eg. PBFT, Casper FFG, chain-based PoS) whose output can be seen by occasionally-online observers (we'll call this the threshold-dependent consensus algorithm, as opposed to the algorithm above, which we'll call the latency-dependent consensus algorithm). Suppose that the threshold-dependent consensus algorithm runs continuously, in a mode where it is constantly "finalizing" new blocks onto a chain (ie. each finalized value points to some previous finalized value as a "parent"; if there's a sequence of pointers \(A \rightarrow ... \rightarrow B\), we'll call \(A\) a descendant of \(B\)).We can retrofit the latency-dependent algorithm onto this structure, giving always-online observers access to a kind of "strong finality" on checkpoints, with fault tolerance ~95% (you can push this arbitrarily close to 100% by adding more validators and requiring the process to take longer).Every time the time reaches some multiple of 4096 seconds, we run the latency-dependent algorithm, choosing 512 random nodes to participate in the algorithm. A valid proposal is any valid chain of values that were finalized by the threshold-dependent algorithm. If a node sees some finalized value before time \(T + k \cdot D\) (\(D\) = 8 seconds) with \(k\) signatures, it accepts the chain into its set of known chains and rebroadcasts it with its own signature added; observers use a threshold of \(T + (k - 0.5) \cdot D\) as before.The "choice" function used at the end is simple:Finalized values that are not descendants of what was already agreed to be a finalized value in the previous round are ignored Finalized values that are invalid are ignored To choose between two valid finalized values, pick the one with the lower hash If 5% of validators are honest, there is only a roughly 1 in 1 trillion chance that none of the 512 randomly selected nodes will be honest, and so as long as the network latency plus clock disparity is less than \(\frac\) the above algorithm will work, correctly coordinating nodes on some single finalized value, even if multiple conflicting finalized values are presented because the fault tolerance of the threshold-dependent algorithm is broken.If the fault tolerance of the threshold-dependent consensus algorithm is met (usually 50% or 67% honest), then the threshold-dependent consensus algorithm will either not finalize any new checkpoints, or it will finalize new checkpoints that are compatible with each other (eg. a series of checkpoints where each points to the previous as a parent), so even if network latency exceeds \(\frac\) (or even \(D\)), and as a result nodes participating in the latency-dependent algorithm disagree on which value they accept, the values they accept are still guaranteed to be part of the same chain and so there is no actual disagreement. Once latency recovers back to normal in some future round, the latency-dependent consensus will get back "in sync".If the assumptions of both the threshold-dependent and latency-dependent consensus algorithms are broken at the same time (or in consecutive rounds), then the algorithm can break down. For example, suppose in one round, the threshold-dependent consensus finalizes \(Z \rightarrow Y \rightarrow X\) and the latency-dependent consensus disagrees between \(Y\) and \(X\), and in the next round the threshold-dependent consensus finalizes a descendant \(W\) of \(X\) which is not a descendant of \(Y\); in the latency-dependent consensus, the nodes who agreed \(Y\) will not accept \(W\), but the nodes that agreed \(X\) will. However, this is unavoidable; the impossibility of safe-under-asynchrony consensus with more than \(\frac\) fault tolerance is a well known result in Byzantine fault tolerance theory, as is the impossibility of more than \(\frac\) fault tolerance even allowing synchrony assumptions but assuming offline observers. -

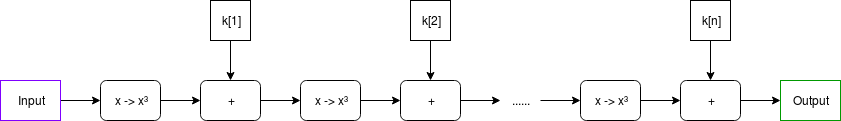

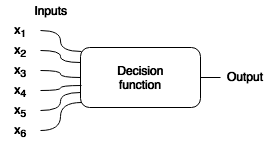

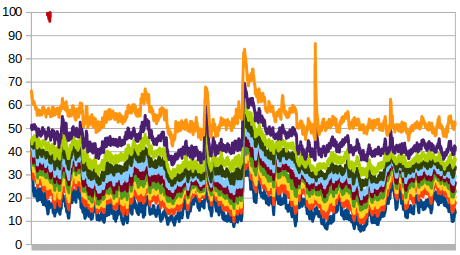

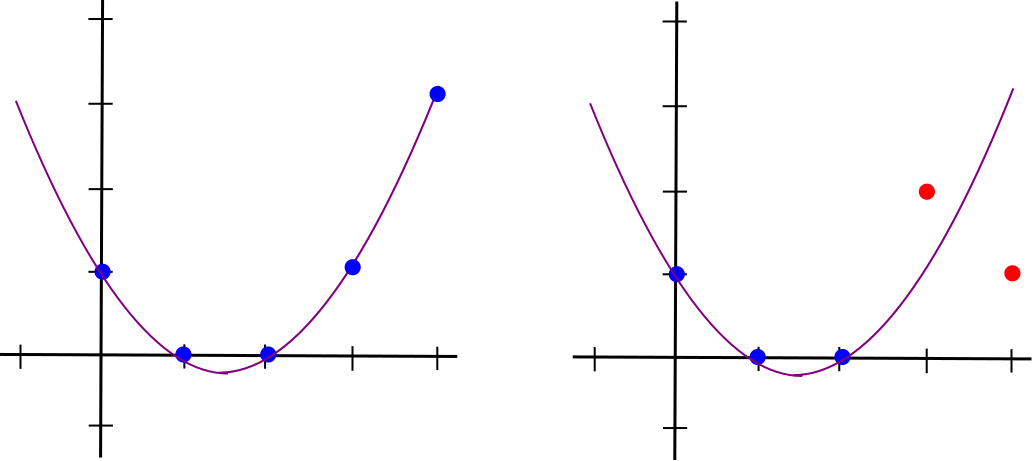

STARKs, Part 3: Into the Weeds STARKs, Part 3: Into the Weeds2018 Jul 21 See all posts STARKs, Part 3: Into the Weeds Special thanks to Eli ben Sasson for his kind assistance, as usual. Special thanks to Chih-Cheng Liang and Justin Drake for review, and to Ben Fisch for suggesting the reverse MIMC technique for a VDF (paper here)Trigger warning: math and lots of pythonAs a followup to Part 1 and Part 2 of this series, this post will cover what it looks like to actually implement a STARK, complete with an implementation in python. STARKs ("Scalable Transparent ARgument of Knowledge" are a technique for creating a proof that \(f(x)=y\) where \(f\) may potentially take a very long time to calculate, but where the proof can be verified very quickly. A STARK is "doubly scalable": for a computation with \(t\) steps, it takes roughly \(O(t \cdot \log)\) steps to produce a proof, which is likely optimal, and it takes ~\(O(\log^2)\) steps to verify, which for even moderately large values of \(t\) is much faster than the original computation. STARKs can also have a privacy-preserving "zero knowledge" property, though the use case we will apply them to here, making verifiable delay functions, does not require this property, so we do not need to worry about it.First, some disclaimers:This code has not been thoroughly audited; soundness in production use cases is not guaranteed This code is very suboptimal (it's written in Python, what did you expect) STARKs "in real life" (ie. as implemented in Eli and co's production implementations) tend to use binary fields and not prime fields for application-specific efficiency reasons; however, they do stress in their writings the prime field-based approach to STARKs described here is legitimate and can be used There is no "one true way" to do a STARK. It's a broad category of cryptographic and mathematical constructs, with different setups optimal for different applications and constant ongoing research to reduce prover and verifier complexity and improve soundness. This article absolutely expects you to know how modular arithmetic and prime fields work, and be comfortable with the concepts of polynomials, interpolation and evaluation. If you don't, go back to Part 2, and also this earlier post on quadratic arithmetic programs Now, let's get to it.MIMCHere is the function we'll be doing a STARK of:def mimc(inp, steps, round_constants): start_time = time.time() for i in range(steps-1): inp = (inp**3 + round_constants[i % len(round_constants)]) % modulus print("MIMC computed in%.4fsec" % (time.time() - start_time)) return inpWe choose MIMC (see paper) as the example because it is both (i) simple to understand and (ii) interesting enough to be useful in real life. The function can be viewed visually as follows: Note: in many discussions of MIMC, you will typically see XOR used instead of +; this is because MIMC is typically done over binary fields, where addition is XOR; here we are doing it over prime fields.In our example, the round constants will be a relatively small list (eg. 64 items) that gets cycled through over and over again (that is, after k[64] it loops back to using k[1]).MIMC with a very large number of rounds, as we're doing here, is useful as a verifiable delay function - a function which is difficult to compute, and particularly non-parallelizable to compute, but relatively easy to verify. MIMC by itself achieves this property to some extent because MIMC can be computed "backward" (recovering the "input" from its corresponding "output"), but computing it backward takes about 100 times longer to compute than the forward direction (and neither direction can be significantly sped up by parallelization). So you can think of computing the function in the backward direction as being the act of "computing" the non-parallelizable proof of work, and computing the function in the forward direction as being the process of "verifying" it. \(x \rightarrow x^\) gives the inverse of \(x \rightarrow x^3\); this is true because of Fermat's Little Theorem, a theorem that despite its supposed littleness is arguably much more important to mathematics than Fermat's more famous "Last Theorem".What we will try to achieve here is to make verification much more efficient by using a STARK - instead of the verifier having to run MIMC in the forward direction themselves, the prover, after completing the computation in the "backward direction", would compute a STARK of the computation in the "forward direction", and the verifier would simply verify the STARK. The hope is that the overhead of computing a STARK can be less than the difference in speed running MIMC forwards relative to backwards, so a prover's time would still be dominated by the initial "backward" computation, and not the (highly parallelizable) STARK computation. Verification of a STARK can be relatively fast (in our python implementation, ~0.05-0.3 seconds), no matter how long the original computation is.All calculations are done modulo \(2^ - 351 \cdot 2^ + 1\); we are using this prime field modulus because it is the largest prime below \(2^\) whose multiplicative group contains an order \(2^\) subgroup (that is, there's a number \(g\) such that successive powers of \(g\) modulo this prime loop around back to \(1\) after exactly \(2^\) cycles), and which is of the form \(6k+5\). The first property is necessary to make sure that our efficient versions of the FFT and FRI algorithms can work, and the second ensures that MIMC actually can be computed "backwards" (see the use of \(x \rightarrow x^\) above).Prime field operationsWe start off by building a convenience class that does prime field operations, as well as operations with polynomials over prime fields. The code is here. First some trivial bits:class PrimeField(): def __init__(self, modulus): # Quick primality test assert pow(2, modulus, modulus) == 2 self.modulus = modulus def add(self, x, y): return (x+y) % self.modulus def sub(self, x, y): return (x-y) % self.modulus def mul(self, x, y): return (x*y) % self.modulusAnd the Extended Euclidean Algorithm for computing modular inverses (the equivalent of computing \(\frac\) in a prime field):# Modular inverse using the extended Euclidean algorithm def inv(self, a): if a == 0: return 0 lm, hm = 1, 0 low, high = a % self.modulus, self.modulus while low > 1: r = high//low nm, new = hm-lm*r, high-low*r lm, low, hm, high = nm, new, lm, low return lm % self.modulusThe above algorithm is relatively expensive; fortunately, for the special case where we need to do many modular inverses, there's a simple mathematical trick that allows us to compute many inverses, called Montgomery batch inversion: Using Montgomery batch inversion to compute modular inverses. Inputs purple, outputs green, multiplication gates black; the red square is the only modular inversion.The code below implements this algorithm, with some slightly ugly special case logic so that if there are zeroes in the set of what we are inverting, it sets their inverse to 0 and moves along.def multi_inv(self, values): partials = [1] for i in range(len(values)): partials.append(self.mul(partials[-1], values[i] or 1)) inv = self.inv(partials[-1]) outputs = [0] * len(values) for i in range(len(values), 0, -1): outputs[i-1] = self.mul(partials[i-1], inv) if values[i-1] else 0 inv = self.mul(inv, values[i-1] or 1) return outputsThis batch inverse algorithm will prove important later on, when we start dealing with dividing sets of evaluations of polynomials.Now we move on to some polynomial operations. We treat a polynomial as an array, where element \(i\) is the \(i\)th degree term (eg. \(x^ + 2x + 1\) becomes [1, 2, 0, 1]). Here's the operation of evaluating a polynomial at one point:# Evaluate a polynomial at a point def eval_poly_at(self, p, x): y = 0 power_of_x = 1 for i, p_coeff in enumerate(p): y += power_of_x * p_coeff power_of_x = (power_of_x * x) % self.modulus return y % self.modulusChallengeWhat is the output of f.eval_poly_at([4, 5, 6], 2) if the modulus is 31?Mouseover below for answer\(6 \cdot 2^ + 5 \cdot 2 + 4 = 38, 38 \bmod 31 = 7\).There is also code for adding, subtracting, multiplying and dividing polynomials; this is textbook long addition/subtraction/multiplication/division. The one non-trivial thing is Lagrange interpolation, which takes as input a set of x and y coordinates, and returns the minimal polynomial that passes through all of those points (you can think of it as being the inverse of polynomial evaluation):# Build a polynomial that returns 0 at all specified xs def zpoly(self, xs): root = [1] for x in xs: root.insert(0, 0) for j in range(len(root)-1): root[j] -= root[j+1] * x return [x % self.modulus for x in root] def lagrange_interp(self, xs, ys): # Generate master numerator polynomial, eg. (x - x1) * (x - x2) * ... * (x - xn) root = self.zpoly(xs) # Generate per-value numerator polynomials, eg. for x=x2, # (x - x1) * (x - x3) * ... * (x - xn), by dividing the master # polynomial back by each x coordinate nums = [self.div_polys(root, [-x, 1]) for x in xs] # Generate denominators by evaluating numerator polys at each x denoms = [self.eval_poly_at(nums[i], xs[i]) for i in range(len(xs))] invdenoms = self.multi_inv(denoms) # Generate output polynomial, which is the sum of the per-value numerator # polynomials rescaled to have the right y values b = [0 for y in ys] for i in range(len(xs)): yslice = self.mul(ys[i], invdenoms[i]) for j in range(len(ys)): if nums[i][j] and ys[i]: b[j] += nums[i][j] * yslice return [x % self.modulus for x in b]See the "M of N" section of this article for a description of the math. Note that we also have special-case methods lagrange_interp_4 and lagrange_interp_2 to speed up the very frequent operations of Lagrange interpolation of degree \(< 2\) and degree \(< 4\) polynomials.Fast Fourier TransformsIf you read the above algorithms carefully, you might notice that Lagrange interpolation and multi-point evaluation (that is, evaluating a degree \(< N\) polynomial at \(N\) points) both take quadratic time to execute, so for example doing a Lagrange interpolation of one thousand points takes a few million steps to execute, and a Lagrange interpolation of one million points takes a few trillion. This is an unacceptably high level of inefficiency, so we will use a more efficient algorithm, the Fast Fourier Transform.The FFT only takes \(O(n \cdot log(n))\) time (ie. ~10,000 steps for 1,000 points, ~20 million steps for 1 million points), though it is more restricted in scope; the x coordinates must be a complete set of roots of unity of some order \(N = 2^\). That is, if there are \(N\) points, the x coordinates must be successive powers \(1, p, p^, p^\)... of some \(p\) where \(p^ = 1\). The algorithm can, surprisingly enough, be used for multi-point evaluation or interpolation, with one small parameter tweak.Challenge Find a 16th root of unity mod 337 that is not an 8th root of unity.Mouseover below for answer59, 146, 30, 297, 278, 191, 307, 40You could have gotten this list by doing something like [print(x) for x in range(337) if pow(x, 16, 337) == 1 and pow(x, 8, 337) != 1], though there is a smarter way that works for much larger moduluses: first, identify a single primitive root mod 337 (that is, not a perfect square), by looking for a value x such that pow(x, 336 // 2, 337) != 1 (these are easy to find; one answer is 5), and then taking the (336 / 16)'th power of it.Here's the algorithm (in a slightly simplified form; see code here for something slightly more optimized):def fft(vals, modulus, root_of_unity): if len(vals) == 1: return vals L = fft(vals[::2], modulus, pow(root_of_unity, 2, modulus)) R = fft(vals[1::2], modulus, pow(root_of_unity, 2, modulus)) o = [0 for i in vals] for i, (x, y) in enumerate(zip(L, R)): y_times_root = y*pow(root_of_unity, i, modulus) o[i] = (x+y_times_root) % modulus o[i+len(L)] = (x-y_times_root) % modulus return o def inv_fft(vals, modulus, root_of_unity): f = PrimeField(modulus) # Inverse FFT invlen = f.inv(len(vals)) return [(x*invlen) % modulus for x in fft(vals, modulus, f.inv(root_of_unity))]You can try running it on a few inputs yourself and check that it gives results that, when you use eval_poly_at on them, give you the answers you expect to get. For example:>>> fft.fft([3,1,4,1,5,9,2,6], 337, 85, inv=True) [46, 169, 29, 149, 126, 262, 140, 93] >>> f = poly_utils.PrimeField(337) >>> [f.eval_poly_at([46, 169, 29, 149, 126, 262, 140, 93], f.exp(85, i)) for i in range(8)] [3, 1, 4, 1, 5, 9, 2, 6]A Fourier transform takes as input [x[0] .... x[n-1]], and its goal is to output x[0] + x[1] + ... + x[n-1] as the first element, x[0] + x[1] * 2 + ... + x[n-1] * w**(n-1) as the second element, etc etc; a fast Fourier transform accomplishes this by splitting the data in half, doing an FFT on both halves, and then gluing the result back together. A diagram of how information flows through the FFT computation. Notice how the FFT consists of a "gluing" step followed by two copies of the FFT on two halves of the data, and so on recursively until you're down to one element.I recommend this for more intuition on how or why the FFT works and polynomial math in general, and this thread for some more specifics on DFT vs FFT, though be warned that most literature on Fourier transforms talks about Fourier transforms over real and complex numbers, not prime fields. If you find this too hard and don't want to understand it, just treat it as weird spooky voodoo that just works because you ran the code a few times and verified that it works, and you'll be fine too.Thank Goodness It's FRI-day (that's "Fast Reed-Solomon Interactive Oracle Proofs of Proximity")Reminder: now may be a good time to review and re-read Part 2Now, we'll get into the code for making a low-degree proof. To review, a low-degree proof is a (probabilistic) proof that at least some high percentage (eg. 80%) of a given set of values represent the evaluations of some specific polynomial whose degree is much lower than the number of values given. Intuitively, just think of it as a proof that "some Merkle root that we claim represents a polynomial actually does represent a polynomial, possibly with a few errors". As input, we have:A set of values that we claim are the evaluation of a low-degree polynomial A root of unity; the x coordinates at which the polynomial is evaluated are successive powers of this root of unity A value \(N\) such that we are proving the degree of the polynomial is strictly less than \(N\) The modulus Our approach is a recursive one, with two cases. First, if the degree is low enough, we just provide the entire list of values as a proof; this is the "base case". Verification of the base case is trivial: do an FFT or Lagrange interpolation or whatever else to interpolate the polynomial representing those values, and verify that its degree is \(< N\). Otherwise, if the degree is higher than some set minimum, we do the vertical-and-diagonal trick described at the bottom of Part 2.We start off by putting the values into a Merkle tree and using the Merkle root to select a pseudo-random x coordinate (special_x). We then calculate the "column":# Calculate the set of x coordinates xs = get_power_cycle(root_of_unity, modulus) column = [] for i in range(len(xs)//4): x_poly = f.lagrange_interp_4( [xs[i+len(xs)*j//4] for j in range(4)], [values[i+len(values)*j//4] for j in range(4)], ) column.append(f.eval_poly_at(x_poly, special_x))This packs a lot into a few lines of code. The broad idea is to re-interpret the polynomial \(P(x)\) as a polynomial \(Q(x, y)\), where \(P(x) = Q(x, x^4)\). If \(P\) has degree \(< N\), then \(P'(y) = Q(special\_x, y)\) will have degree \(< \frac\). Since we don't want to take the effort to actually compute \(Q\) in coefficient form (that would take a still-relatively-nasty-and-expensive FFT!), we instead use another trick. For any given value of \(x^\), there are 4 corresponding values of \(x\): \(x\), \(modulus - x\), and \(x\) multiplied by the two modular square roots of \(-1\). So we already have four values of \(Q(?, x^4)\), which we can use to interpolate the polynomial \(R(x) = Q(x, x^4)\), and from there calculate \(R(special\_x) = Q(special\_x, x^4) = P'(x^4)\). There are \(\frac\) possible values of \(x^\), and this lets us easily calculate all of them. A diagram from part 2; it helps to keep this in mind when understanding what's going on hereOur proof consists of some number (eg. 40) of random queries from the list of values of \(x^\) (using the Merkle root of the column as a seed), and for each query we provide Merkle branches of the five values of \(Q(?, x^4)\):m2 = merkelize(column) # Pseudo-randomly select y indices to sample # (m2[1] is the Merkle root of the column) ys = get_pseudorandom_indices(m2[1], len(column), 40) # Compute the Merkle branches for the values in the polynomial and the column branches = [] for y in ys: branches.append([mk_branch(m2, y)] + [mk_branch(m, y + (len(xs) // 4) * j) for j in range(4)])The verifier's job will be to verify that these five values actually do lie on the same degree \(< 4\) polynomial. From there, we recurse and do an FRI on the column, verifying that the column actually does have degree \(< \frac\). That really is all there is to FRI.As a challenge exercise, you could try creating low-degree proofs of polynomial evaluations that have errors in them, and see how many errors you can get away passing the verifier with (hint, you'll need to modify the prove_low_degree function; with the default prover, even one error will balloon up and cause verification to fail).The STARKReminder: now may be a good time to review and re-read Part 1Now, we get to the actual meat that puts all of these pieces together: def mk_mimc_proof(inp, steps, round_constants) (code here), which generates a proof of the execution result of running the MIMC function with the given input for some number of steps. First, some asserts:assert steps