找到

1087

篇与

heyuan

相关的结果

-

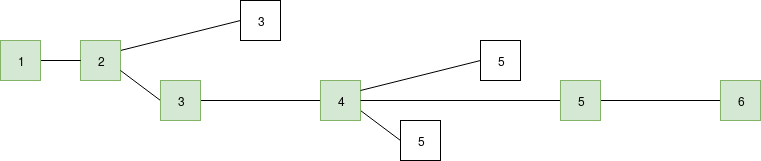

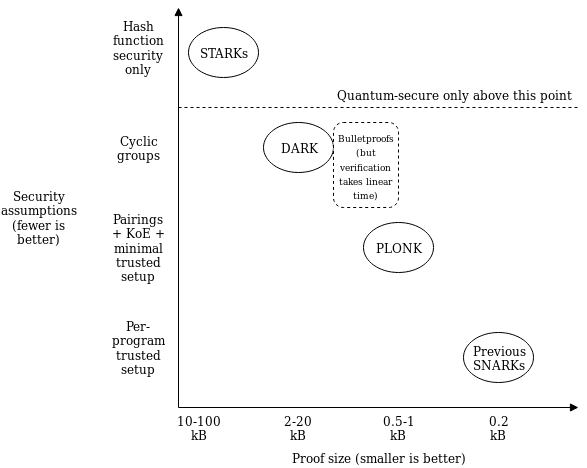

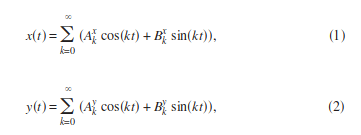

Understanding PLONK Understanding PLONK2019 Sep 22 See all posts Understanding PLONK Special thanks to Justin Drake, Karl Floersch, Hsiao-wei Wang, Barry Whitehat, Dankrad Feist, Kobi Gurkan and Zac Williamson for reviewVery recently, Ariel Gabizon, Zac Williamson and Oana Ciobotaru announced a new general-purpose zero-knowledge proof scheme called PLONK, standing for the unwieldy quasi-backronym "Permutations over Lagrange-bases for Oecumenical Noninteractive arguments of Knowledge". While improvements to general-purpose zero-knowledge proof protocols have been coming for years, what PLONK (and the earlier but more complex SONIC and the more recent Marlin) bring to the table is a series of enhancements that may greatly improve the usability and progress of these kinds of proofs in general.The first improvement is that while PLONK still requires a trusted setup procedure similar to that needed for the SNARKs in Zcash, it is a "universal and updateable" trusted setup. This means two things: first, instead of there being one separate trusted setup for every program you want to prove things about, there is one single trusted setup for the whole scheme after which you can use the scheme with any program (up to some maximum size chosen when making the setup). Second, there is a way for multiple parties to participate in the trusted setup such that it is secure as long as any one of them is honest, and this multi-party procedure is fully sequential: first one person participates, then the second, then the third... The full set of participants does not even need to be known ahead of time; new participants could just add themselves to the end. This makes it easy for the trusted setup to have a large number of participants, making it quite safe in practice.The second improvement is that the "fancy cryptography" it relies on is one single standardized component, called a "polynomial commitment". PLONK uses "Kate commitments", based on a trusted setup and elliptic curve pairings, but you can instead swap it out with other schemes, such as FRI (which would turn PLONK into a kind of STARK) or DARK (based on hidden-order groups). This means the scheme is theoretically compatible with any (achievable) tradeoff between proof size and security assumptions. What this means is that use cases that require different tradeoffs between proof size and security assumptions (or developers that have different ideological positions about this question) can still share the bulk of the same tooling for "arithmetization" - the process for converting a program into a set of polynomial equations that the polynomial commitments are then used to check. If this kind of scheme becomes widely adopted, we can thus expect rapid progress in improving shared arithmetization techniques.How PLONK worksLet us start with an explanation of how PLONK works, in a somewhat abstracted format that focuses on polynomial equations without immediately explaining how those equations are verified. A key ingredient in PLONK, as is the case in the QAPs used in SNARKs, is a procedure for converting a problem of the form "give me a value \(X\) such that a specific program \(P\) that I give you, when evaluated with \(X\) as an input, gives some specific result \(Y\)" into the problem "give me a set of values that satisfies a set of math equations". The program \(P\) can represent many things; for example the problem could be "give me a solution to this sudoku", which you would encode by setting \(P\) to be a sudoku verifier plus some initial values encoded and setting \(Y\) to \(1\) (ie. "yes, this solution is correct"), and a satisfying input \(X\) would be a valid solution to the sudoku. This is done by representing \(P\) as a circuit with logic gates for addition and multiplication, and converting it into a system of equations where the variables are the values on all the wires and there is one equation per gate (eg. \(x_6 = x_4 \cdot x_7\) for multiplication, \(x_8 = x_5 + x_9\) for addition).Here is an example of the problem of finding \(x\) such that \(P(x) = x^3 + x + 5 = 35\) (hint: \(x = 3\)): We can label the gates and wires as follows: On the gates and wires, we have two types of constraints: gate constraints (equations between wires attached to the same gate, eg. \(a_1 \cdot b_1 = c_1\)) and copy constraints (claims about equality of different wires anywhere in the circuit, eg. \(a_0 = a_1 = b_1 = b_2 = a_3\) or \(c_0 = a_1\)). We will need to create a structured system of equations, which will ultimately reduce to a very small number of polynomial equations, to represent both.In PLONK, the setup for these equations is as follows. Each equation is of the following form (think: \(L\) = left, \(R\) = right, \(O\) = output, \(M\) = multiplication, \(C\) = constant):\[ \left(Q_}\right) a_+\left(Q_}\right) b_+\left(Q_}\right) c_+\left(Q_}\right) a_ b_+Q_}=0 \]Each \(Q\) value is a constant; the constants in each equation (and the number of equations) will be different for each program. Each small-letter value is a variable, provided by the user: \(a_i\) is the left input wire of the \(i\)'th gate, \(b_i\) is the right input wire, and \(c_i\) is the output wire of the \(i\)'th gate. For an addition gate, we set:\[ Q_}=1, Q_}=1, Q_}=0, Q_}=-1, Q_}=0 \]Plugging these constants into the equation and simplifying gives us \(a_i + b_i - c_i = 0\), which is exactly the constraint that we want. For a multiplication gate, we set:\[ Q_}=0, Q_}=0, Q_}=1, Q_}=-1, Q_}=0 \]For a constant gate setting \(a_i\) to some constant \(x\), we set:\[ Q_=1, Q_=0, Q_=0, Q_=0, Q_=-x \]You may have noticed that each end of a wire, as well as each wire in a set of wires that clearly must have the same value (eg. \(x\)), corresponds to a distinct variable; there's nothing so far forcing the output of one gate to be the same as the input of another gate (what we call "copy constraints"). PLONK does of course have a way of enforcing copy constraints, but we'll get to this later. So now we have a problem where a prover wants to prove that they have a bunch of \(x_, x_\) and \(x_\) values that satisfy a bunch of equations that are of the same form. This is still a big problem, but unlike "find a satisfying input to this computer program" it's a very structured big problem, and we have mathematical tools to "compress" it.From linear systems to polynomialsIf you have read about STARKs or QAPs, the mechanism described in this next section will hopefully feel somewhat familiar, but if you have not that's okay too. The main ingredient here is to understand a polynomial as a mathematical tool for encapsulating a whole lot of values into a single object. Typically, we think of polynomials in "coefficient form", that is an expression like:\[ y=x^-5 x^+7 x-2 \]But we can also view polynomials in "evaluation form". For example, we can think of the above as being "the" degree \(< 4\) polynomial with evaluations \((-2, 1, 0, 1)\) at the coordinates \((0, 1, 2, 3)\) respectively. Now here's the next step. Systems of many equations of the same form can be re-interpreted as a single equation over polynomials. For example, suppose that we have the system:\[ \begin-x_+3 x_=8} \\ +4 x_-5 x_=5} \\ -x_-x_=-2}\end \]Let us define four polynomials in evaluation form: \(L(x)\) is the degree \(< 3\) polynomial that evaluates to \((2, 1, 8)\) at the coordinates \((0, 1, 2)\), and at those same coordinates \(M(x)\) evaluates to \((-1, 4, -1)\), \(R(x)\) to \((3, -5, -1)\) and \(O(x)\) to \((8, 5, -2)\) (it is okay to directly define polynomials in this way; you can use Lagrange interpolation to convert to coefficient form). Now, consider the equation:\[ L(x) \cdot x_+M(x) \cdot x_+R(x) \cdot x_-O(x)=Z(x) H(x) \]Here, \(Z(x)\) is shorthand for \((x-0) \cdot (x-1) \cdot (x-2)\) - the minimal (nontrivial) polynomial that returns zero over the evaluation domain \((0, 1, 2)\). A solution to this equation (\(x_1 = 1, x_2 = 6, x_3 = 4, H(x) = 0\)) is also a solution to the original system of equations, except the original system does not need \(H(x)\). Notice also that in this case, \(H(x)\) is conveniently zero, but in more complex cases \(H\) may need to be nonzero.So now we know that we can represent a large set of constraints within a small number of mathematical objects (the polynomials). But in the equations that we made above to represent the gate wire constraints, the \(x_1, x_2, x_3\) variables are different per equation. We can handle this by making the variables themselves polynomials rather than constants in the same way. And so we get:\[ Q_(x) a(x)+Q_(x) b(x)+Q_(x) c(x)+Q_(x) a(x) b(x)+Q_(x)=0 \]As before, each \(Q\) polynomial is a parameter that can be generated from the program that is being verified, and the \(a\), \(b\), \(c\) polynomials are the user-provided inputs.Copy constraintsNow, let us get back to "connecting" the wires. So far, all we have is a bunch of disjoint equations about disjoint values that are independently easy to satisfy: constant gates can be satisfied by setting the value to the constant and addition and multiplication gates can simply be satisfied by setting all wires to zero! To make the problem actually challenging (and actually represent the problem encoded in the original circuit), we need to add an equation that verifies "copy constraints": constraints such as \(a(5) = c(7)\), \(c(10) = c(12)\), etc. This requires some clever trickery.Our strategy will be to design a "coordinate pair accumulator", a polynomial \(p(x)\) which works as follows. First, let \(X(x)\) and \(Y(x)\) be two polynomials representing the \(x\) and \(y\) coordinates of a set of points (eg. to represent the set \(((0, -2), (1, 1), (2, 0), (3, 1))\) you might set \(X(x) = x\) and \(Y(x) = x^3 - 5x^2 + 7x - 2)\). Our goal will be to let \(p(x)\) represent all the points up to (but not including) the given position, so \(p(0)\) starts at \(1\), \(p(1)\) represents just the first point, \(p(2)\) the first and the second, etc. We will do this by "randomly" selecting two constants, \(v_1\) and \(v_2\), and constructing \(p(x)\) using the constraints \(p(0) = 1\) and \(p(x+1) = p(x) \cdot (v_1 + X(x) + v_2 \cdot Y(x))\) at least within the domain \((0, 1, 2, 3)\).For example, letting \(v_1 = 3\) and \(v_2 = 2\), we get: X(x) 0 1 2 3 4 \(Y(x)\) -2 1 0 1 \(v_1 + X(x) + v_2 \cdot Y(x)\) -1 6 5 8 \(p(x)\) 1 -1 -6 -30 -240 Notice that (aside from the first column) every \(p(x)\) value equals the value to the left of it multiplied by the value to the left and above it. The result we care about is \(p(4) = -240\). Now, consider the case where instead of \(X(x) = x\), we set \(X(x) = \frac x^3 - 4x^2 + \fracx\) (that is, the polynomial that evaluates to \((0, 3, 2, 1)\) at the coordinates \((0, 1, 2, 3)\)). If you run the same procedure, you'll find that you also get \(p(4) = -240\). This is not a coincidence (in fact, if you randomly pick \(v_1\) and \(v_2\) from a sufficiently large field, it will almost never happen coincidentally). Rather, this happens because \(Y(1) = Y(3)\), so if you "swap the \(X\) coordinates" of the points \((1, 1)\) and \((3, 1)\) you're not changing the set of points, and because the accumulator encodes a set (as multiplication does not care about order) the value at the end will be the same.Now we can start to see the basic technique that we will use to prove copy constraints. First, consider the simple case where we only want to prove copy constraints within one set of wires (eg. we want to prove \(a(1) = a(3)\)). We'll make two coordinate accumulators: one where \(X(x) = x\) and \(Y(x) = a(x)\), and the other where \(Y(x) = a(x)\) but \(X'(x)\) is the polynomial that evaluates to the permutation that flips (or otherwise rearranges) the values in each copy constraint; in the \(a(1) = a(3)\) case this would mean the permutation would start \(0 3 2 1 4...\). The first accumulator would be compressing \(((0, a(0)), (1, a(1)), (2, a(2)), (3, a(3)), (4, a(4))...\), the second \(((0, a(0)), (3, a(1)), (2, a(2)), (1, a(3)), (4, a(4))...\). The only way the two can give the same result is if \(a(1) = a(3)\).To prove constraints between \(a\), \(b\) and \(c\), we use the same procedure, but instead "accumulate" together points from all three polynomials. We assign each of \(a\), \(b\), \(c\) a range of \(X\) coordinates (eg. \(a\) gets \(X_a(x) = x\) ie. \(0...n-1\), \(b\) gets \(X_b(x) = n+x\), ie. \(n...2n-1\), \(c\) gets \(X_c(x) = 2n+x\), ie. \(2n...3n-1\). To prove copy constraints that hop between different sets of wires, the "alternate" \(X\) coordinates would be slices of a permutation across all three sets. For example, if we want to prove \(a(2) = b(4)\) with \(n = 5\), then \(X'_a(x)\) would have evaluations \(\\) and \(X'_b(x)\) would have evaluations \(\\) (notice the \(2\) and \(9\) flipped, where \(9\) corresponds to the \(b_4\) wire). Often, \(X'_a(x)\), \(X'_b(x)\) and \(X'_c(x)\) are also called \(\sigma_a(x)\), \(\sigma_b(x)\) and \(\sigma_c(x)\).We would then instead of checking equality within one run of the procedure (ie. checking \(p(4) = p'(4)\) as before), we would check the product of the three different runs on each side:\[ p_(n) \cdot p_(n) \cdot p_(n)=p_^(n) \cdot p_^(n) \cdot p_^(n) \]The product of the three \(p(n)\) evaluations on each side accumulates all coordinate pairs in the \(a\), \(b\) and \(c\) runs on each side together, so this allows us to do the same check as before, except that we can now check copy constraints not just between positions within one of the three sets of wires \(a\), \(b\) or \(c\), but also between one set of wires and another (eg. as in \(a(2) = b(4)\)).And that's all there is to it!Putting it all togetherIn reality, all of this math is done not over integers, but over a prime field; check the section "A Modular Math Interlude" here for a description of what prime fields are. Also, for mathematical reasons perhaps best appreciated by reading and understanding this article on FFT implementation, instead of representing wire indices with \(x=0....n-1\), we'll use powers of \(\omega: 1, \omega, \omega ^2....\omega ^\) where \(\omega\) is a high-order root-of-unity in the field. This changes nothing about the math, except that the coordinate pair accumulator constraint checking equation changes from \(p(x + 1) = p(x) \cdot (v_1 + X(x) + v_2 \cdot Y(x))\) to \(p(\omega \cdot x) = p(x) \cdot (v_1 + X(x) + v_2 \cdot Y(x))\), and instead of using \(0..n-1\), \(n..2n-1\), \(2n..3n-1\) as coordinates we use \(\omega^i, g \cdot \omega^i\) and \(g^2 \cdot \omega^i\) where \(g\) can be some random high-order element in the field.Now let's write out all the equations we need to check. First, the main gate-constraint satisfaction check:\[ Q_(x) a(x)+Q_(x) b(x)+Q_(x) c(x)+Q_(x) a(x) b(x)+Q_(x)=0 \]Then the polynomial accumulator transition constraint (note: think of "\(= Z(x) \cdot H(x)\)" as meaning "equals zero for all coordinates within some particular domain that we care about, but not necessarily outside of it"):\[ \begin(\omega x)-P_(x)\left(v_+x+v_ a(x)\right) =Z(x) H_(x)} \\ }(\omega x)-P_}(x)\left(v_+\sigma_(x)+v_ a(x)\right)=Z(x) H_(x)} \\ (\omega x)-P_(x)\left(v_+g x+v_ b(x)\right)=Z(x) H_(x)} \\ }(\omega x)-P_}(x)\left(v_+\sigma_(x)+v_ b(x)\right)=Z(x) H_(x)} \\ (\omega x)-P_(x)\left(v_+g^ x+v_ c(x)\right)=Z(x) H_(x)} \\ }(\omega x)-P_}(x)\left(v_+\sigma_(x)+v_ c(x)\right)=Z(x) H_(x)}\end \]Then the polynomial accumulator starting and ending constraints:\[ \begin(1)=P_(1)=P_(1)=P_}(1)=P_}(1)=P_}(1)=1} \\ \left(\omega^\right) P_\left(\omega^\right) P_\left(\omega^\right)=P_}\left(\omega^\right) P_}\left(\omega^\right) P_}\left(\omega^\right)}\end \]The user-provided polynomials are:The wire assignments \(a(x), b(x), c(x)\) The coordinate accumulators \(P_a(x), P_b(x), P_c(x), P_(x), P_(x), P_(x)\) The quotients \(H(x)\) and \(H_1(x)...H_6(x)\) The program-specific polynomials that the prover and verifier need to compute ahead of time are:\(Q_L(x), Q_R(x), Q_O(x), Q_M(x), Q_C(x)\), which together represent the gates in the circuit (note that \(Q_C(x)\) encodes public inputs, so it may need to be computed or modified at runtime) The "permutation polynomials" \(\sigma_a(x), \sigma_b(x)\) and \(\sigma_c(x)\), which encode the copy constraints between the \(a\), \(b\) and \(c\) wires Note that the verifier need only store commitments to these polynomials. The only remaining polynomial in the above equations is \(Z(x) = (x - 1) \cdot (x - \omega) \cdot ... \cdot (x - \omega ^)\) which is designed to evaluate to zero at all those points. Fortunately, \(\omega\) can be chosen to make this polynomial very easy to evaluate: the usual technique is to choose \(\omega\) to satisfy \(\omega ^n = 1\), in which case \(Z(x) = x^n - 1\).There is one nuance here: the constraint between \(P_a(\omega^)\) and \(P_a(\omega^i)\) can't be true across the entire circle of powers of \(\omega\); it's almost always false at \(\omega^\) as the next coordinate is \(\omega^n = 1\) which brings us back to the start of the "accumulator"; to fix this, we can modify the constraint to say "either the constraint is true or \(x = \omega^\)", which one can do by multiplying \(x - \omega^\) into the constraint so it equals zero at that point.The only constraint on \(v_1\) and \(v_2\) is that the user must not be able to choose \(a(x), b(x)\) or \(c(x)\) after \(v_1\) and \(v_2\) become known, so we can satisfy this by computing \(v_1\) and \(v_2\) from hashes of commitments to \(a(x), b(x)\) and \(c(x)\).So now we've turned the program satisfaction problem into a simple problem of satisfying a few equations with polynomials, and there are some optimizations in PLONK that allow us to remove many of the polynomials in the above equations that I will not go into to preserve simplicity. But the polynomials themselves, both the program-specific parameters and the user inputs, are big. So the next question is, how do we get around this so we can make the proof short?Polynomial commitmentsA polynomial commitment is a short object that "represents" a polynomial, and allows you to verify evaluations of that polynomial, without needing to actually contain all of the data in the polynomial. That is, if someone gives you a commitment \(c\) representing \(P(x)\), they can give you a proof that can convince you, for some specific \(z\), what the value of \(P(z)\) is. There is a further mathematical result that says that, over a sufficiently big field, if certain kinds of equations (chosen before \(z\) is known) about polynomials evaluated at a random \(z\) are true, those same equations are true about the whole polynomial as well. For example, if \(P(z) \cdot Q(z) + R(z) = S(z) + 5\), then we know that it's overwhelmingly likely that \(P(x) \cdot Q(x) + R(x) = S(x) + 5\) in general. Using such polynomial commitments, we could very easily check all of the above polynomial equations above - make the commitments, use them as input to generate \(z\), prove what the evaluations are of each polynomial at \(z\), and then run the equations with these evaluations instead of the original polynomials. But how do these commitments work?There are two parts: the commitment to the polynomial \(P(x) \rightarrow c\), and the opening to a value \(P(z)\) at some \(z\). To make a commitment, there are many techniques; one example is FRI, and another is Kate commitments which I will describe below. To prove an opening, it turns out that there is a simple generic "subtract-and-divide" trick: to prove that \(P(z) = a\), you prove that\[ \frac \]is also a polynomial (using another polynomial commitment). This works because if the quotient is a polynomial (ie. it is not fractional), then \(x - z\) is a factor of \(P(x) - a\), so \((P(x) - a)(z) = 0\), so \(P(z) = a\). Try it with some polynomial, eg. \(P(x) = x^3 + 2 \cdot x^2 + 5\) with \((z = 6, a = 293)\), yourself; and try \((z = 6, a = 292)\) and see how it fails (if you're lazy, see WolframAlpha here vs here). Note also a generic optimization: to prove many openings of many polynomials at the same time, after committing to the outputs do the subtract-and-divide trick on a random linear combination of the polynomials and the outputs.So how do the commitments themselves work? Kate commitments are, fortunately, much simpler than FRI. A trusted-setup procedure generates a set of elliptic curve points \(G, G \cdot s, G \cdot s^2\) .... \(G \cdot s^n\), as well as \(G_2 \cdot s\), where \(G\) and \(G_2\) are the generators of two elliptic curve groups and \(s\) is a secret that is forgotten once the procedure is finished (note that there is a multi-party version of this setup, which is secure as long as at least one of the participants forgets their share of the secret). These points are published and considered to be "the proving key" of the scheme; anyone who needs to make a polynomial commitment will need to use these points. A commitment to a degree-d polynomial is made by multiplying each of the first d+1 points in the proving key by the corresponding coefficient in the polynomial, and adding the results together.Notice that this provides an "evaluation" of that polynomial at \(s\), without knowing \(s\). For example, \(x^3 + 2x^2+5\) would be represented by \((G \cdot s^3) + 2 \cdot (G \cdot s^2) + 5 \cdot G\). We can use the notation \([P]\) to refer to \(P\) encoded in this way (ie. \(G \cdot P(s)\)). When doing the subtract-and-divide trick, you can prove that the two polynomials actually satisfy the relation by using elliptic curve pairings: check that \(e([P] - G \cdot a, G_2) = e([Q], [x] - G_2 \cdot z)\) as a proxy for checking that \(P(x) - a = Q(x) \cdot (x - z)\).But there are more recently other types of polynomial commitments coming out too. A new scheme called DARK ("Diophantine arguments of knowledge") uses "hidden order groups" such as class groups to implement another kind of polynomial commitment. Hidden order groups are unique because they allow you to compress arbitrarily large numbers into group elements, even numbers much larger than the size of the group element, in a way that can't be "spoofed"; constructions from VDFs to accumulators to range proofs to polynomial commitments can be built on top of this. Another option is to use bulletproofs, using regular elliptic curve groups at the cost of the proof taking much longer to verify. Because polynomial commitments are much simpler than full-on zero knowledge proof schemes, we can expect more such schemes to get created in the future.RecapTo finish off, let's go over the scheme again. Given a program \(P\), you convert it into a circuit, and generate a set of equations that look like this:\[ \left(Q_}\right) a_+\left(Q_}\right) b_+\left(Q_}\right) c_+\left(Q_}\right) a_ b_+Q_}=0 \]You then convert this set of equations into a single polynomial equation:\[ Q_(x) a(x)+Q_(x) b(x)+Q_(x) c(x)+Q_(x) a(x) b(x)+Q_(x)=0 \]You also generate from the circuit a list of copy constraints. From these copy constraints you generate the three polynomials representing the permuted wire indices: \(\sigma_a(x), \sigma_b(x), \sigma_c(x)\). To generate a proof, you compute the values of all the wires and convert them into three polynomials: \(a(x), b(x), c(x)\). You also compute six "coordinate pair accumulator" polynomials as part of the permutation-check argument. Finally you compute the cofactors \(H_i(x)\).There is a set of equations between the polynomials that need to be checked; you can do this by making commitments to the polynomials, opening them at some random \(z\) (along with proofs that the openings are correct), and running the equations on these evaluations instead of the original polynomials. The proof itself is just a few commitments and openings and can be checked with a few equations. And that's all there is to it!

Understanding PLONK Understanding PLONK2019 Sep 22 See all posts Understanding PLONK Special thanks to Justin Drake, Karl Floersch, Hsiao-wei Wang, Barry Whitehat, Dankrad Feist, Kobi Gurkan and Zac Williamson for reviewVery recently, Ariel Gabizon, Zac Williamson and Oana Ciobotaru announced a new general-purpose zero-knowledge proof scheme called PLONK, standing for the unwieldy quasi-backronym "Permutations over Lagrange-bases for Oecumenical Noninteractive arguments of Knowledge". While improvements to general-purpose zero-knowledge proof protocols have been coming for years, what PLONK (and the earlier but more complex SONIC and the more recent Marlin) bring to the table is a series of enhancements that may greatly improve the usability and progress of these kinds of proofs in general.The first improvement is that while PLONK still requires a trusted setup procedure similar to that needed for the SNARKs in Zcash, it is a "universal and updateable" trusted setup. This means two things: first, instead of there being one separate trusted setup for every program you want to prove things about, there is one single trusted setup for the whole scheme after which you can use the scheme with any program (up to some maximum size chosen when making the setup). Second, there is a way for multiple parties to participate in the trusted setup such that it is secure as long as any one of them is honest, and this multi-party procedure is fully sequential: first one person participates, then the second, then the third... The full set of participants does not even need to be known ahead of time; new participants could just add themselves to the end. This makes it easy for the trusted setup to have a large number of participants, making it quite safe in practice.The second improvement is that the "fancy cryptography" it relies on is one single standardized component, called a "polynomial commitment". PLONK uses "Kate commitments", based on a trusted setup and elliptic curve pairings, but you can instead swap it out with other schemes, such as FRI (which would turn PLONK into a kind of STARK) or DARK (based on hidden-order groups). This means the scheme is theoretically compatible with any (achievable) tradeoff between proof size and security assumptions. What this means is that use cases that require different tradeoffs between proof size and security assumptions (or developers that have different ideological positions about this question) can still share the bulk of the same tooling for "arithmetization" - the process for converting a program into a set of polynomial equations that the polynomial commitments are then used to check. If this kind of scheme becomes widely adopted, we can thus expect rapid progress in improving shared arithmetization techniques.How PLONK worksLet us start with an explanation of how PLONK works, in a somewhat abstracted format that focuses on polynomial equations without immediately explaining how those equations are verified. A key ingredient in PLONK, as is the case in the QAPs used in SNARKs, is a procedure for converting a problem of the form "give me a value \(X\) such that a specific program \(P\) that I give you, when evaluated with \(X\) as an input, gives some specific result \(Y\)" into the problem "give me a set of values that satisfies a set of math equations". The program \(P\) can represent many things; for example the problem could be "give me a solution to this sudoku", which you would encode by setting \(P\) to be a sudoku verifier plus some initial values encoded and setting \(Y\) to \(1\) (ie. "yes, this solution is correct"), and a satisfying input \(X\) would be a valid solution to the sudoku. This is done by representing \(P\) as a circuit with logic gates for addition and multiplication, and converting it into a system of equations where the variables are the values on all the wires and there is one equation per gate (eg. \(x_6 = x_4 \cdot x_7\) for multiplication, \(x_8 = x_5 + x_9\) for addition).Here is an example of the problem of finding \(x\) such that \(P(x) = x^3 + x + 5 = 35\) (hint: \(x = 3\)): We can label the gates and wires as follows: On the gates and wires, we have two types of constraints: gate constraints (equations between wires attached to the same gate, eg. \(a_1 \cdot b_1 = c_1\)) and copy constraints (claims about equality of different wires anywhere in the circuit, eg. \(a_0 = a_1 = b_1 = b_2 = a_3\) or \(c_0 = a_1\)). We will need to create a structured system of equations, which will ultimately reduce to a very small number of polynomial equations, to represent both.In PLONK, the setup for these equations is as follows. Each equation is of the following form (think: \(L\) = left, \(R\) = right, \(O\) = output, \(M\) = multiplication, \(C\) = constant):\[ \left(Q_}\right) a_+\left(Q_}\right) b_+\left(Q_}\right) c_+\left(Q_}\right) a_ b_+Q_}=0 \]Each \(Q\) value is a constant; the constants in each equation (and the number of equations) will be different for each program. Each small-letter value is a variable, provided by the user: \(a_i\) is the left input wire of the \(i\)'th gate, \(b_i\) is the right input wire, and \(c_i\) is the output wire of the \(i\)'th gate. For an addition gate, we set:\[ Q_}=1, Q_}=1, Q_}=0, Q_}=-1, Q_}=0 \]Plugging these constants into the equation and simplifying gives us \(a_i + b_i - c_i = 0\), which is exactly the constraint that we want. For a multiplication gate, we set:\[ Q_}=0, Q_}=0, Q_}=1, Q_}=-1, Q_}=0 \]For a constant gate setting \(a_i\) to some constant \(x\), we set:\[ Q_=1, Q_=0, Q_=0, Q_=0, Q_=-x \]You may have noticed that each end of a wire, as well as each wire in a set of wires that clearly must have the same value (eg. \(x\)), corresponds to a distinct variable; there's nothing so far forcing the output of one gate to be the same as the input of another gate (what we call "copy constraints"). PLONK does of course have a way of enforcing copy constraints, but we'll get to this later. So now we have a problem where a prover wants to prove that they have a bunch of \(x_, x_\) and \(x_\) values that satisfy a bunch of equations that are of the same form. This is still a big problem, but unlike "find a satisfying input to this computer program" it's a very structured big problem, and we have mathematical tools to "compress" it.From linear systems to polynomialsIf you have read about STARKs or QAPs, the mechanism described in this next section will hopefully feel somewhat familiar, but if you have not that's okay too. The main ingredient here is to understand a polynomial as a mathematical tool for encapsulating a whole lot of values into a single object. Typically, we think of polynomials in "coefficient form", that is an expression like:\[ y=x^-5 x^+7 x-2 \]But we can also view polynomials in "evaluation form". For example, we can think of the above as being "the" degree \(< 4\) polynomial with evaluations \((-2, 1, 0, 1)\) at the coordinates \((0, 1, 2, 3)\) respectively. Now here's the next step. Systems of many equations of the same form can be re-interpreted as a single equation over polynomials. For example, suppose that we have the system:\[ \begin-x_+3 x_=8} \\ +4 x_-5 x_=5} \\ -x_-x_=-2}\end \]Let us define four polynomials in evaluation form: \(L(x)\) is the degree \(< 3\) polynomial that evaluates to \((2, 1, 8)\) at the coordinates \((0, 1, 2)\), and at those same coordinates \(M(x)\) evaluates to \((-1, 4, -1)\), \(R(x)\) to \((3, -5, -1)\) and \(O(x)\) to \((8, 5, -2)\) (it is okay to directly define polynomials in this way; you can use Lagrange interpolation to convert to coefficient form). Now, consider the equation:\[ L(x) \cdot x_+M(x) \cdot x_+R(x) \cdot x_-O(x)=Z(x) H(x) \]Here, \(Z(x)\) is shorthand for \((x-0) \cdot (x-1) \cdot (x-2)\) - the minimal (nontrivial) polynomial that returns zero over the evaluation domain \((0, 1, 2)\). A solution to this equation (\(x_1 = 1, x_2 = 6, x_3 = 4, H(x) = 0\)) is also a solution to the original system of equations, except the original system does not need \(H(x)\). Notice also that in this case, \(H(x)\) is conveniently zero, but in more complex cases \(H\) may need to be nonzero.So now we know that we can represent a large set of constraints within a small number of mathematical objects (the polynomials). But in the equations that we made above to represent the gate wire constraints, the \(x_1, x_2, x_3\) variables are different per equation. We can handle this by making the variables themselves polynomials rather than constants in the same way. And so we get:\[ Q_(x) a(x)+Q_(x) b(x)+Q_(x) c(x)+Q_(x) a(x) b(x)+Q_(x)=0 \]As before, each \(Q\) polynomial is a parameter that can be generated from the program that is being verified, and the \(a\), \(b\), \(c\) polynomials are the user-provided inputs.Copy constraintsNow, let us get back to "connecting" the wires. So far, all we have is a bunch of disjoint equations about disjoint values that are independently easy to satisfy: constant gates can be satisfied by setting the value to the constant and addition and multiplication gates can simply be satisfied by setting all wires to zero! To make the problem actually challenging (and actually represent the problem encoded in the original circuit), we need to add an equation that verifies "copy constraints": constraints such as \(a(5) = c(7)\), \(c(10) = c(12)\), etc. This requires some clever trickery.Our strategy will be to design a "coordinate pair accumulator", a polynomial \(p(x)\) which works as follows. First, let \(X(x)\) and \(Y(x)\) be two polynomials representing the \(x\) and \(y\) coordinates of a set of points (eg. to represent the set \(((0, -2), (1, 1), (2, 0), (3, 1))\) you might set \(X(x) = x\) and \(Y(x) = x^3 - 5x^2 + 7x - 2)\). Our goal will be to let \(p(x)\) represent all the points up to (but not including) the given position, so \(p(0)\) starts at \(1\), \(p(1)\) represents just the first point, \(p(2)\) the first and the second, etc. We will do this by "randomly" selecting two constants, \(v_1\) and \(v_2\), and constructing \(p(x)\) using the constraints \(p(0) = 1\) and \(p(x+1) = p(x) \cdot (v_1 + X(x) + v_2 \cdot Y(x))\) at least within the domain \((0, 1, 2, 3)\).For example, letting \(v_1 = 3\) and \(v_2 = 2\), we get: X(x) 0 1 2 3 4 \(Y(x)\) -2 1 0 1 \(v_1 + X(x) + v_2 \cdot Y(x)\) -1 6 5 8 \(p(x)\) 1 -1 -6 -30 -240 Notice that (aside from the first column) every \(p(x)\) value equals the value to the left of it multiplied by the value to the left and above it. The result we care about is \(p(4) = -240\). Now, consider the case where instead of \(X(x) = x\), we set \(X(x) = \frac x^3 - 4x^2 + \fracx\) (that is, the polynomial that evaluates to \((0, 3, 2, 1)\) at the coordinates \((0, 1, 2, 3)\)). If you run the same procedure, you'll find that you also get \(p(4) = -240\). This is not a coincidence (in fact, if you randomly pick \(v_1\) and \(v_2\) from a sufficiently large field, it will almost never happen coincidentally). Rather, this happens because \(Y(1) = Y(3)\), so if you "swap the \(X\) coordinates" of the points \((1, 1)\) and \((3, 1)\) you're not changing the set of points, and because the accumulator encodes a set (as multiplication does not care about order) the value at the end will be the same.Now we can start to see the basic technique that we will use to prove copy constraints. First, consider the simple case where we only want to prove copy constraints within one set of wires (eg. we want to prove \(a(1) = a(3)\)). We'll make two coordinate accumulators: one where \(X(x) = x\) and \(Y(x) = a(x)\), and the other where \(Y(x) = a(x)\) but \(X'(x)\) is the polynomial that evaluates to the permutation that flips (or otherwise rearranges) the values in each copy constraint; in the \(a(1) = a(3)\) case this would mean the permutation would start \(0 3 2 1 4...\). The first accumulator would be compressing \(((0, a(0)), (1, a(1)), (2, a(2)), (3, a(3)), (4, a(4))...\), the second \(((0, a(0)), (3, a(1)), (2, a(2)), (1, a(3)), (4, a(4))...\). The only way the two can give the same result is if \(a(1) = a(3)\).To prove constraints between \(a\), \(b\) and \(c\), we use the same procedure, but instead "accumulate" together points from all three polynomials. We assign each of \(a\), \(b\), \(c\) a range of \(X\) coordinates (eg. \(a\) gets \(X_a(x) = x\) ie. \(0...n-1\), \(b\) gets \(X_b(x) = n+x\), ie. \(n...2n-1\), \(c\) gets \(X_c(x) = 2n+x\), ie. \(2n...3n-1\). To prove copy constraints that hop between different sets of wires, the "alternate" \(X\) coordinates would be slices of a permutation across all three sets. For example, if we want to prove \(a(2) = b(4)\) with \(n = 5\), then \(X'_a(x)\) would have evaluations \(\\) and \(X'_b(x)\) would have evaluations \(\\) (notice the \(2\) and \(9\) flipped, where \(9\) corresponds to the \(b_4\) wire). Often, \(X'_a(x)\), \(X'_b(x)\) and \(X'_c(x)\) are also called \(\sigma_a(x)\), \(\sigma_b(x)\) and \(\sigma_c(x)\).We would then instead of checking equality within one run of the procedure (ie. checking \(p(4) = p'(4)\) as before), we would check the product of the three different runs on each side:\[ p_(n) \cdot p_(n) \cdot p_(n)=p_^(n) \cdot p_^(n) \cdot p_^(n) \]The product of the three \(p(n)\) evaluations on each side accumulates all coordinate pairs in the \(a\), \(b\) and \(c\) runs on each side together, so this allows us to do the same check as before, except that we can now check copy constraints not just between positions within one of the three sets of wires \(a\), \(b\) or \(c\), but also between one set of wires and another (eg. as in \(a(2) = b(4)\)).And that's all there is to it!Putting it all togetherIn reality, all of this math is done not over integers, but over a prime field; check the section "A Modular Math Interlude" here for a description of what prime fields are. Also, for mathematical reasons perhaps best appreciated by reading and understanding this article on FFT implementation, instead of representing wire indices with \(x=0....n-1\), we'll use powers of \(\omega: 1, \omega, \omega ^2....\omega ^\) where \(\omega\) is a high-order root-of-unity in the field. This changes nothing about the math, except that the coordinate pair accumulator constraint checking equation changes from \(p(x + 1) = p(x) \cdot (v_1 + X(x) + v_2 \cdot Y(x))\) to \(p(\omega \cdot x) = p(x) \cdot (v_1 + X(x) + v_2 \cdot Y(x))\), and instead of using \(0..n-1\), \(n..2n-1\), \(2n..3n-1\) as coordinates we use \(\omega^i, g \cdot \omega^i\) and \(g^2 \cdot \omega^i\) where \(g\) can be some random high-order element in the field.Now let's write out all the equations we need to check. First, the main gate-constraint satisfaction check:\[ Q_(x) a(x)+Q_(x) b(x)+Q_(x) c(x)+Q_(x) a(x) b(x)+Q_(x)=0 \]Then the polynomial accumulator transition constraint (note: think of "\(= Z(x) \cdot H(x)\)" as meaning "equals zero for all coordinates within some particular domain that we care about, but not necessarily outside of it"):\[ \begin(\omega x)-P_(x)\left(v_+x+v_ a(x)\right) =Z(x) H_(x)} \\ }(\omega x)-P_}(x)\left(v_+\sigma_(x)+v_ a(x)\right)=Z(x) H_(x)} \\ (\omega x)-P_(x)\left(v_+g x+v_ b(x)\right)=Z(x) H_(x)} \\ }(\omega x)-P_}(x)\left(v_+\sigma_(x)+v_ b(x)\right)=Z(x) H_(x)} \\ (\omega x)-P_(x)\left(v_+g^ x+v_ c(x)\right)=Z(x) H_(x)} \\ }(\omega x)-P_}(x)\left(v_+\sigma_(x)+v_ c(x)\right)=Z(x) H_(x)}\end \]Then the polynomial accumulator starting and ending constraints:\[ \begin(1)=P_(1)=P_(1)=P_}(1)=P_}(1)=P_}(1)=1} \\ \left(\omega^\right) P_\left(\omega^\right) P_\left(\omega^\right)=P_}\left(\omega^\right) P_}\left(\omega^\right) P_}\left(\omega^\right)}\end \]The user-provided polynomials are:The wire assignments \(a(x), b(x), c(x)\) The coordinate accumulators \(P_a(x), P_b(x), P_c(x), P_(x), P_(x), P_(x)\) The quotients \(H(x)\) and \(H_1(x)...H_6(x)\) The program-specific polynomials that the prover and verifier need to compute ahead of time are:\(Q_L(x), Q_R(x), Q_O(x), Q_M(x), Q_C(x)\), which together represent the gates in the circuit (note that \(Q_C(x)\) encodes public inputs, so it may need to be computed or modified at runtime) The "permutation polynomials" \(\sigma_a(x), \sigma_b(x)\) and \(\sigma_c(x)\), which encode the copy constraints between the \(a\), \(b\) and \(c\) wires Note that the verifier need only store commitments to these polynomials. The only remaining polynomial in the above equations is \(Z(x) = (x - 1) \cdot (x - \omega) \cdot ... \cdot (x - \omega ^)\) which is designed to evaluate to zero at all those points. Fortunately, \(\omega\) can be chosen to make this polynomial very easy to evaluate: the usual technique is to choose \(\omega\) to satisfy \(\omega ^n = 1\), in which case \(Z(x) = x^n - 1\).There is one nuance here: the constraint between \(P_a(\omega^)\) and \(P_a(\omega^i)\) can't be true across the entire circle of powers of \(\omega\); it's almost always false at \(\omega^\) as the next coordinate is \(\omega^n = 1\) which brings us back to the start of the "accumulator"; to fix this, we can modify the constraint to say "either the constraint is true or \(x = \omega^\)", which one can do by multiplying \(x - \omega^\) into the constraint so it equals zero at that point.The only constraint on \(v_1\) and \(v_2\) is that the user must not be able to choose \(a(x), b(x)\) or \(c(x)\) after \(v_1\) and \(v_2\) become known, so we can satisfy this by computing \(v_1\) and \(v_2\) from hashes of commitments to \(a(x), b(x)\) and \(c(x)\).So now we've turned the program satisfaction problem into a simple problem of satisfying a few equations with polynomials, and there are some optimizations in PLONK that allow us to remove many of the polynomials in the above equations that I will not go into to preserve simplicity. But the polynomials themselves, both the program-specific parameters and the user inputs, are big. So the next question is, how do we get around this so we can make the proof short?Polynomial commitmentsA polynomial commitment is a short object that "represents" a polynomial, and allows you to verify evaluations of that polynomial, without needing to actually contain all of the data in the polynomial. That is, if someone gives you a commitment \(c\) representing \(P(x)\), they can give you a proof that can convince you, for some specific \(z\), what the value of \(P(z)\) is. There is a further mathematical result that says that, over a sufficiently big field, if certain kinds of equations (chosen before \(z\) is known) about polynomials evaluated at a random \(z\) are true, those same equations are true about the whole polynomial as well. For example, if \(P(z) \cdot Q(z) + R(z) = S(z) + 5\), then we know that it's overwhelmingly likely that \(P(x) \cdot Q(x) + R(x) = S(x) + 5\) in general. Using such polynomial commitments, we could very easily check all of the above polynomial equations above - make the commitments, use them as input to generate \(z\), prove what the evaluations are of each polynomial at \(z\), and then run the equations with these evaluations instead of the original polynomials. But how do these commitments work?There are two parts: the commitment to the polynomial \(P(x) \rightarrow c\), and the opening to a value \(P(z)\) at some \(z\). To make a commitment, there are many techniques; one example is FRI, and another is Kate commitments which I will describe below. To prove an opening, it turns out that there is a simple generic "subtract-and-divide" trick: to prove that \(P(z) = a\), you prove that\[ \frac \]is also a polynomial (using another polynomial commitment). This works because if the quotient is a polynomial (ie. it is not fractional), then \(x - z\) is a factor of \(P(x) - a\), so \((P(x) - a)(z) = 0\), so \(P(z) = a\). Try it with some polynomial, eg. \(P(x) = x^3 + 2 \cdot x^2 + 5\) with \((z = 6, a = 293)\), yourself; and try \((z = 6, a = 292)\) and see how it fails (if you're lazy, see WolframAlpha here vs here). Note also a generic optimization: to prove many openings of many polynomials at the same time, after committing to the outputs do the subtract-and-divide trick on a random linear combination of the polynomials and the outputs.So how do the commitments themselves work? Kate commitments are, fortunately, much simpler than FRI. A trusted-setup procedure generates a set of elliptic curve points \(G, G \cdot s, G \cdot s^2\) .... \(G \cdot s^n\), as well as \(G_2 \cdot s\), where \(G\) and \(G_2\) are the generators of two elliptic curve groups and \(s\) is a secret that is forgotten once the procedure is finished (note that there is a multi-party version of this setup, which is secure as long as at least one of the participants forgets their share of the secret). These points are published and considered to be "the proving key" of the scheme; anyone who needs to make a polynomial commitment will need to use these points. A commitment to a degree-d polynomial is made by multiplying each of the first d+1 points in the proving key by the corresponding coefficient in the polynomial, and adding the results together.Notice that this provides an "evaluation" of that polynomial at \(s\), without knowing \(s\). For example, \(x^3 + 2x^2+5\) would be represented by \((G \cdot s^3) + 2 \cdot (G \cdot s^2) + 5 \cdot G\). We can use the notation \([P]\) to refer to \(P\) encoded in this way (ie. \(G \cdot P(s)\)). When doing the subtract-and-divide trick, you can prove that the two polynomials actually satisfy the relation by using elliptic curve pairings: check that \(e([P] - G \cdot a, G_2) = e([Q], [x] - G_2 \cdot z)\) as a proxy for checking that \(P(x) - a = Q(x) \cdot (x - z)\).But there are more recently other types of polynomial commitments coming out too. A new scheme called DARK ("Diophantine arguments of knowledge") uses "hidden order groups" such as class groups to implement another kind of polynomial commitment. Hidden order groups are unique because they allow you to compress arbitrarily large numbers into group elements, even numbers much larger than the size of the group element, in a way that can't be "spoofed"; constructions from VDFs to accumulators to range proofs to polynomial commitments can be built on top of this. Another option is to use bulletproofs, using regular elliptic curve groups at the cost of the proof taking much longer to verify. Because polynomial commitments are much simpler than full-on zero knowledge proof schemes, we can expect more such schemes to get created in the future.RecapTo finish off, let's go over the scheme again. Given a program \(P\), you convert it into a circuit, and generate a set of equations that look like this:\[ \left(Q_}\right) a_+\left(Q_}\right) b_+\left(Q_}\right) c_+\left(Q_}\right) a_ b_+Q_}=0 \]You then convert this set of equations into a single polynomial equation:\[ Q_(x) a(x)+Q_(x) b(x)+Q_(x) c(x)+Q_(x) a(x) b(x)+Q_(x)=0 \]You also generate from the circuit a list of copy constraints. From these copy constraints you generate the three polynomials representing the permuted wire indices: \(\sigma_a(x), \sigma_b(x), \sigma_c(x)\). To generate a proof, you compute the values of all the wires and convert them into three polynomials: \(a(x), b(x), c(x)\). You also compute six "coordinate pair accumulator" polynomials as part of the permutation-check argument. Finally you compute the cofactors \(H_i(x)\).There is a set of equations between the polynomials that need to be checked; you can do this by making commitments to the polynomials, opening them at some random \(z\) (along with proofs that the openings are correct), and running the equations on these evaluations instead of the original polynomials. The proof itself is just a few commitments and openings and can be checked with a few equations. And that's all there is to it! -

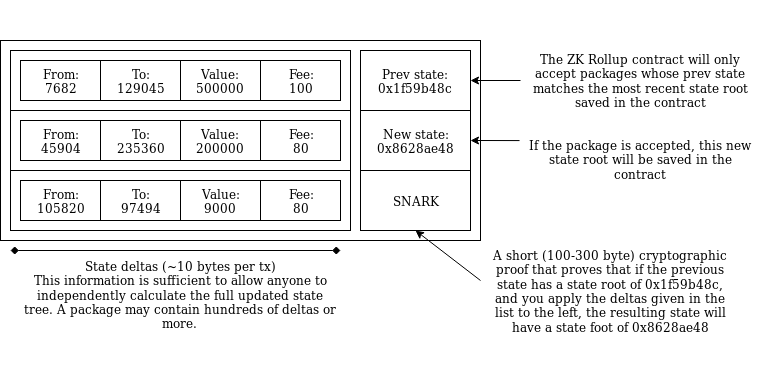

The Dawn of Hybrid Layer 2 Protocols The Dawn of Hybrid Layer 2 Protocols2019 Aug 28 See all posts The Dawn of Hybrid Layer 2 Protocols Special thanks to the Plasma Group team for review and feedbackCurrent approaches to layer 2 scaling - basically, Plasma and state channels - are increasingly moving from theory to practice, but at the same time it is becoming easier to see the inherent challenges in treating these techniques as a fully fledged scaling solution for Ethereum. Ethereum was arguably successful in large part because of its very easy developer experience: you write a program, publish the program, and anyone can interact with it. Designing a state channel or Plasma application, on the other hand, relies on a lot of explicit reasoning about incentives and application-specific development complexity. State channels work well for specific use cases such as repeated payments between the same two parties and two-player games (as successfully implemented in Celer), but more generalized usage is proving challenging. Plasma, particularly Plasma Cash, can work well for payments, but generalization similarly incurs challenges: even implementing a decentralized exchange requires clients to store much more history data, and generalizing to Ethereum-style smart contracts on Plasma seems extremely difficult.But at the same time, there is a resurgence of a forgotten category of "semi-layer-2" protocols - a category which promises less extreme gains in scaling, but with the benefit of much easier generalization and more favorable security models. A long-forgotten blog post from 2014 introduced the idea of "shadow chains", an architecture where block data is published on-chain, but blocks are not verified by default. Rather, blocks are tentatively accepted, and only finalized after some period of time (eg. 2 weeks). During those 2 weeks, a tentatively accepted block can be challenged; only then is the block verified, and if the block proves to be invalid then the chain from that block on is reverted, and the original publisher's deposit is penalized. The contract does not keep track of the full state of the system; it only keeps track of the state root, and users themselves can calculate the state by processing the data submitted to the chain from start to head. A more recent proposal, ZK Rollup, does the same thing without challenge periods, by using ZK-SNARKs to verify blocks' validity. Anatomy of a ZK Rollup package that is published on-chain. Hundreds of "internal transactions" that affect the state (ie. account balances) of the ZK Rollup system are compressed into a package that contains ~10 bytes per internal transaction that specifies the state transitions, plus a ~100-300 byte SNARK proving that the transitions are all valid. In both cases, the main chain is used to verify data availability, but does not (directly) verify block validity or perform any significant computation, unless challenges are made. This technique is thus not a jaw-droppingly huge scalability gain, because the on-chain data overhead eventually presents a bottleneck, but it is nevertheless a very significant one. Data is cheaper than computation, and there are ways to compress transaction data very significantly, particularly because the great majority of data in a transaction is the signature and many signatures can be compressed into one through many forms of aggregation. ZK Rollup promises 500 tx/sec, a 30x gain over the Ethereum chain itself, by compressing each transaction to a mere ~10 bytes; signatures do not need to be included because their validity is verified by the zero-knowledge proof. With BLS aggregate signatures a similar throughput can be achieved in shadow chains (more recently called "optimistic rollup" to highlight its similarities to ZK Rollup). The upcoming Istanbul hard fork will reduce the gas cost of data from 68 per byte to 16 per byte, increasing the throughput of these techniques by another 4x (that's over 2000 transactions per second).So what is the benefit of data on-chain techniques such as ZK/optimistic rollup versus data off-chain techniques such as Plasma? First of all, there is no need for semi-trusted operators. In ZK Rollup, because validity is verified by cryptographic proofs there is literally no way for a package submitter to be malicious (depending on the setup, a malicious submitter may cause the system to halt for a few seconds, but this is the most harm that can be done). In optimistic rollup, a malicious submitter can publish a bad block, but the next submitter will immediately challenge that block before publishing their own. In both ZK and optimistic rollup, enough data is published on chain to allow anyone to compute the complete internal state, simply by processing all of the submitted deltas in order, and there is no "data withholding attack" that can take this property away. Hence, becoming an operator can be fully permissionless; all that is needed is a security deposit (eg. 10 ETH) for anti-spam purposes.Second, optimistic rollup particularly is vastly easier to generalize; the state transition function in an optimistic rollup system can be literally anything that can be computed within the gas limit of a single block (including the Merkle branches providing the parts of the state needed to verify the transition). ZK Rollup is theoretically generalizeable in the same way, though in practice making ZK SNARKs over general-purpose computation (such as EVM execution) is very difficult, at least for now. Third, optimistic rollup is much easier to build clients for, as there is less need for second-layer networking infrastructure; more can be done by just scanning the blockchain.But where do these advantages come from? The answer lies in a highly technical issue known as the data availability problem (see note, video). Basically, there are two ways to try to cheat in a layer-2 system. The first is to publish invalid data to the blockchain. The second is to not publish data at all (eg. in Plasma, publishing the root hash of a new Plasma block to the main chain but without revealing the contents of the block to anyone). Published-but-invalid data is very easy to deal with, because once the data is published on-chain there are multiple ways to figure out unambiguously whether or not it's valid, and an invalid submission is unambiguously invalid so the submitter can be heavily penalized. Unavailable data, on the other hand, is much harder to deal with, because even though unavailability can be detected if challenged, one cannot reliably determine whose fault the non-publication is, especially if data is withheld by default and revealed on-demand only when some verification mechanism tries to verify its availability. This is illustrated in the "Fisherman's dilemma", which shows how a challenge-response game cannot distinguish between malicious submitters and malicious challengers: Fisherman's dilemma. If you only start watching the given specific piece of data at time T3, you have no idea whether you are living in Case 1 or Case 2, and hence who is at fault.Plasma and channels both work around the fisherman's dilemma by pushing the problem to users: if you as a user decide that another user you are interacting with (a counterparty in a state channel, an operator in a Plasma chain) is not publishing data to you that they should be publishing, it's your responsibility to exit and move to a different counterparty/operator. The fact that you as a user have all of the previous data, and data about all of the transactions you signed, allows you to prove to the chain what assets you held inside the layer-2 protocol, and thus safely bring them out of the system. You prove the existence of a (previously agreed) operation that gave the asset to you, no one else can prove the existence of an operation approved by you that sent the asset to someone else, so you get the asset.The technique is very elegant. However, it relies on a key assumption: that every state object has a logical "owner", and the state of the object cannot be changed without the owner's consent. This works well for UTXO-based payments (but not account-based payments, where you can edit someone else's balance upward without their consent; this is why account-based Plasma is so hard), and it can even be made to work for a decentralized exchange, but this "ownership" property is far from universal. Some applications, eg. Uniswap don't have a natural owner, and even in those applications that do, there are often multiple people that can legitimately make edits to the object. And there is no way to allow arbitrary third parties to exit an asset without introducing the possibility of denial-of-service (DoS) attacks, precisely because one cannot prove whether the publisher or submitter is at fault.There are other issues peculiar to Plasma and channels individually. Channels do not allow off-chain transactions to users that are not already part of the channel (argument: suppose there existed a way to send $1 to an arbitrary new user from inside a channel. Then this technique could be used many times in parallel to send $1 to more users than there are funds in the system, already breaking its security guarantee). Plasma requires users to store large amounts of history data, which gets even bigger when different assets can be intertwined (eg. when an asset is transferred conditional on transfer of another asset, as happens in a decentralized exchange with a single-stage order book mechanism).Because data-on-chain computation-off-chain layer 2 techniques don't have data availability issues, they have none of these weaknesses. ZK and optimistic rollup take great care to put enough data on chain to allow users to calculate the full state of the layer 2 system, ensuring that if any participant disappears a new one can trivially take their place. The only issue that they have is verifying computation without doing the computation on-chain, which is a much easier problem. And the scalability gains are significant: ~10 bytes per transaction in ZK Rollup, and a similar level of scalability can be achieved in optimistic rollup by using BLS aggregation to aggregate signatures. This corresponds to a theoretical maximum of ~500 transactions per second today, and over 2000 post-Istanbul.But what if you want more scalability? Then there is a large middle ground between data-on-chain layer 2 and data-off-chain layer 2 protocols, with many hybrid approaches that give you some of the benefits of both. To give a simple example, the history storage blowup in a decentralized exchange implemented on Plasma Cash can be prevented by publishing a mapping of which orders are matched with which orders (that's less than 4 bytes per order) on chain: Left: History data a Plasma Cash user needs to store if they own 1 coin. Middle: History data a Plasma Cash user needs to store if they own 1 coin that was exchanged with another coin using an atomic swap. Right: History data a Plasma Cash user needs to store if the order matching is published on chain. Even outside of the decentralized exchange context, the amount of history that users need to store in Plasma can be reduced by having the Plasma chain periodically publish some per-user data on-chain. One could also imagine a platform which works like Plasma in the case where some state does have a logical "owner" and works like ZK or optimistic rollup in the case where it does not. Plasma developers are already starting to work on these kinds of optimizations.There is thus a strong case to be made for developers of layer 2 scalability solutions to move to be more willing to publish per-user data on-chain at least some of the time: it greatly increases ease of development, generality and security and reduces per-user load (eg. no need for users storing history data). The efficiency losses of doing so are also overstated: even in a fully off-chain layer-2 architecture, users depositing, withdrawing and moving between different counterparties and providers is going to be an inevitable and frequent occurrence, and so there will be a significant amount of per-user on-chain data regardless. The hybrid route opens the door to a relatively fast deployment of fully generalized Ethereum-style smart contracts inside a quasi-layer-2 architecture.See also:Introducing the OVM Blog post by Karl Floersch Related ideas by John Adler

The Dawn of Hybrid Layer 2 Protocols The Dawn of Hybrid Layer 2 Protocols2019 Aug 28 See all posts The Dawn of Hybrid Layer 2 Protocols Special thanks to the Plasma Group team for review and feedbackCurrent approaches to layer 2 scaling - basically, Plasma and state channels - are increasingly moving from theory to practice, but at the same time it is becoming easier to see the inherent challenges in treating these techniques as a fully fledged scaling solution for Ethereum. Ethereum was arguably successful in large part because of its very easy developer experience: you write a program, publish the program, and anyone can interact with it. Designing a state channel or Plasma application, on the other hand, relies on a lot of explicit reasoning about incentives and application-specific development complexity. State channels work well for specific use cases such as repeated payments between the same two parties and two-player games (as successfully implemented in Celer), but more generalized usage is proving challenging. Plasma, particularly Plasma Cash, can work well for payments, but generalization similarly incurs challenges: even implementing a decentralized exchange requires clients to store much more history data, and generalizing to Ethereum-style smart contracts on Plasma seems extremely difficult.But at the same time, there is a resurgence of a forgotten category of "semi-layer-2" protocols - a category which promises less extreme gains in scaling, but with the benefit of much easier generalization and more favorable security models. A long-forgotten blog post from 2014 introduced the idea of "shadow chains", an architecture where block data is published on-chain, but blocks are not verified by default. Rather, blocks are tentatively accepted, and only finalized after some period of time (eg. 2 weeks). During those 2 weeks, a tentatively accepted block can be challenged; only then is the block verified, and if the block proves to be invalid then the chain from that block on is reverted, and the original publisher's deposit is penalized. The contract does not keep track of the full state of the system; it only keeps track of the state root, and users themselves can calculate the state by processing the data submitted to the chain from start to head. A more recent proposal, ZK Rollup, does the same thing without challenge periods, by using ZK-SNARKs to verify blocks' validity. Anatomy of a ZK Rollup package that is published on-chain. Hundreds of "internal transactions" that affect the state (ie. account balances) of the ZK Rollup system are compressed into a package that contains ~10 bytes per internal transaction that specifies the state transitions, plus a ~100-300 byte SNARK proving that the transitions are all valid. In both cases, the main chain is used to verify data availability, but does not (directly) verify block validity or perform any significant computation, unless challenges are made. This technique is thus not a jaw-droppingly huge scalability gain, because the on-chain data overhead eventually presents a bottleneck, but it is nevertheless a very significant one. Data is cheaper than computation, and there are ways to compress transaction data very significantly, particularly because the great majority of data in a transaction is the signature and many signatures can be compressed into one through many forms of aggregation. ZK Rollup promises 500 tx/sec, a 30x gain over the Ethereum chain itself, by compressing each transaction to a mere ~10 bytes; signatures do not need to be included because their validity is verified by the zero-knowledge proof. With BLS aggregate signatures a similar throughput can be achieved in shadow chains (more recently called "optimistic rollup" to highlight its similarities to ZK Rollup). The upcoming Istanbul hard fork will reduce the gas cost of data from 68 per byte to 16 per byte, increasing the throughput of these techniques by another 4x (that's over 2000 transactions per second).So what is the benefit of data on-chain techniques such as ZK/optimistic rollup versus data off-chain techniques such as Plasma? First of all, there is no need for semi-trusted operators. In ZK Rollup, because validity is verified by cryptographic proofs there is literally no way for a package submitter to be malicious (depending on the setup, a malicious submitter may cause the system to halt for a few seconds, but this is the most harm that can be done). In optimistic rollup, a malicious submitter can publish a bad block, but the next submitter will immediately challenge that block before publishing their own. In both ZK and optimistic rollup, enough data is published on chain to allow anyone to compute the complete internal state, simply by processing all of the submitted deltas in order, and there is no "data withholding attack" that can take this property away. Hence, becoming an operator can be fully permissionless; all that is needed is a security deposit (eg. 10 ETH) for anti-spam purposes.Second, optimistic rollup particularly is vastly easier to generalize; the state transition function in an optimistic rollup system can be literally anything that can be computed within the gas limit of a single block (including the Merkle branches providing the parts of the state needed to verify the transition). ZK Rollup is theoretically generalizeable in the same way, though in practice making ZK SNARKs over general-purpose computation (such as EVM execution) is very difficult, at least for now. Third, optimistic rollup is much easier to build clients for, as there is less need for second-layer networking infrastructure; more can be done by just scanning the blockchain.But where do these advantages come from? The answer lies in a highly technical issue known as the data availability problem (see note, video). Basically, there are two ways to try to cheat in a layer-2 system. The first is to publish invalid data to the blockchain. The second is to not publish data at all (eg. in Plasma, publishing the root hash of a new Plasma block to the main chain but without revealing the contents of the block to anyone). Published-but-invalid data is very easy to deal with, because once the data is published on-chain there are multiple ways to figure out unambiguously whether or not it's valid, and an invalid submission is unambiguously invalid so the submitter can be heavily penalized. Unavailable data, on the other hand, is much harder to deal with, because even though unavailability can be detected if challenged, one cannot reliably determine whose fault the non-publication is, especially if data is withheld by default and revealed on-demand only when some verification mechanism tries to verify its availability. This is illustrated in the "Fisherman's dilemma", which shows how a challenge-response game cannot distinguish between malicious submitters and malicious challengers: Fisherman's dilemma. If you only start watching the given specific piece of data at time T3, you have no idea whether you are living in Case 1 or Case 2, and hence who is at fault.Plasma and channels both work around the fisherman's dilemma by pushing the problem to users: if you as a user decide that another user you are interacting with (a counterparty in a state channel, an operator in a Plasma chain) is not publishing data to you that they should be publishing, it's your responsibility to exit and move to a different counterparty/operator. The fact that you as a user have all of the previous data, and data about all of the transactions you signed, allows you to prove to the chain what assets you held inside the layer-2 protocol, and thus safely bring them out of the system. You prove the existence of a (previously agreed) operation that gave the asset to you, no one else can prove the existence of an operation approved by you that sent the asset to someone else, so you get the asset.The technique is very elegant. However, it relies on a key assumption: that every state object has a logical "owner", and the state of the object cannot be changed without the owner's consent. This works well for UTXO-based payments (but not account-based payments, where you can edit someone else's balance upward without their consent; this is why account-based Plasma is so hard), and it can even be made to work for a decentralized exchange, but this "ownership" property is far from universal. Some applications, eg. Uniswap don't have a natural owner, and even in those applications that do, there are often multiple people that can legitimately make edits to the object. And there is no way to allow arbitrary third parties to exit an asset without introducing the possibility of denial-of-service (DoS) attacks, precisely because one cannot prove whether the publisher or submitter is at fault.There are other issues peculiar to Plasma and channels individually. Channels do not allow off-chain transactions to users that are not already part of the channel (argument: suppose there existed a way to send $1 to an arbitrary new user from inside a channel. Then this technique could be used many times in parallel to send $1 to more users than there are funds in the system, already breaking its security guarantee). Plasma requires users to store large amounts of history data, which gets even bigger when different assets can be intertwined (eg. when an asset is transferred conditional on transfer of another asset, as happens in a decentralized exchange with a single-stage order book mechanism).Because data-on-chain computation-off-chain layer 2 techniques don't have data availability issues, they have none of these weaknesses. ZK and optimistic rollup take great care to put enough data on chain to allow users to calculate the full state of the layer 2 system, ensuring that if any participant disappears a new one can trivially take their place. The only issue that they have is verifying computation without doing the computation on-chain, which is a much easier problem. And the scalability gains are significant: ~10 bytes per transaction in ZK Rollup, and a similar level of scalability can be achieved in optimistic rollup by using BLS aggregation to aggregate signatures. This corresponds to a theoretical maximum of ~500 transactions per second today, and over 2000 post-Istanbul.But what if you want more scalability? Then there is a large middle ground between data-on-chain layer 2 and data-off-chain layer 2 protocols, with many hybrid approaches that give you some of the benefits of both. To give a simple example, the history storage blowup in a decentralized exchange implemented on Plasma Cash can be prevented by publishing a mapping of which orders are matched with which orders (that's less than 4 bytes per order) on chain: Left: History data a Plasma Cash user needs to store if they own 1 coin. Middle: History data a Plasma Cash user needs to store if they own 1 coin that was exchanged with another coin using an atomic swap. Right: History data a Plasma Cash user needs to store if the order matching is published on chain. Even outside of the decentralized exchange context, the amount of history that users need to store in Plasma can be reduced by having the Plasma chain periodically publish some per-user data on-chain. One could also imagine a platform which works like Plasma in the case where some state does have a logical "owner" and works like ZK or optimistic rollup in the case where it does not. Plasma developers are already starting to work on these kinds of optimizations.There is thus a strong case to be made for developers of layer 2 scalability solutions to move to be more willing to publish per-user data on-chain at least some of the time: it greatly increases ease of development, generality and security and reduces per-user load (eg. no need for users storing history data). The efficiency losses of doing so are also overstated: even in a fully off-chain layer-2 architecture, users depositing, withdrawing and moving between different counterparties and providers is going to be an inevitable and frequent occurrence, and so there will be a significant amount of per-user on-chain data regardless. The hybrid route opens the door to a relatively fast deployment of fully generalized Ethereum-style smart contracts inside a quasi-layer-2 architecture.See also:Introducing the OVM Blog post by Karl Floersch Related ideas by John Adler -