找到

1087

篇与

heyuan

相关的结果

-

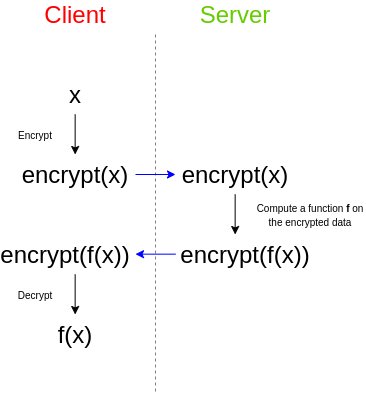

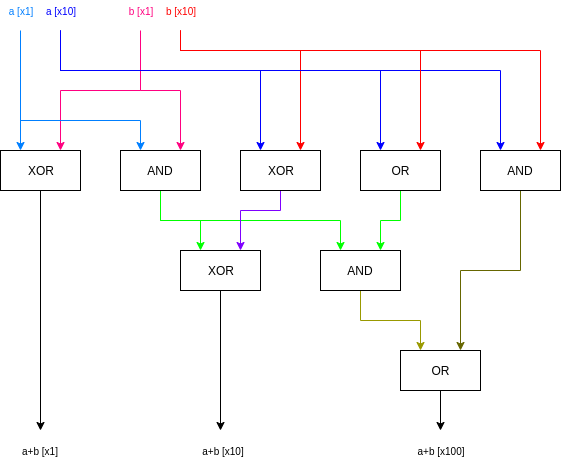

Exploring Fully Homomorphic Encryption Exploring Fully Homomorphic Encryption2020 Jul 20 See all posts Exploring Fully Homomorphic Encryption Special thanks to Karl Floersch and Dankrad Feist for reviewFully homomorphic encryption has for a long time been considered one of the holy grails of cryptography. The promise of fully homomorphic encryption (FHE) is powerful: it is a type of encryption that allows a third party to perform computations on encrypted data, and get an encrypted result that they can hand back to whoever has the decryption key for the original data, without the third party being able to decrypt the data or the result themselves. As a simple example, imagine that you have a set of emails, and you want to use a third party spam filter to check whether or not they are spam. The spam filter has a desire for privacy of their algorithm: either the spam filter provider wants to keep their source code closed, or the spam filter depends on a very large database that they do not want to reveal publicly as that would make attacking easier, or both. However, you care about the privacy of your data, and don't want to upload your unencrypted emails to a third party. So here's how you do it: Fully homomorphic encryption has many applications, including in the blockchain space. One key example is that can be used to implement privacy-preserving light clients (the light client hands the server an encrypted index i, the server computes and returns data[0] * (i = 0) + data[1] * (i = 1) + ... + data[n] * (i = n), where data[i] is the i'th piece of data in a block or state along with its Merkle branch and (i = k) is an expression that returns 1 if i = k and otherwise 0; the light client gets the data it needs and the server learns nothing about what the light client asked).It can also be used for:More efficient stealth address protocols, and more generally scalability solutions to privacy-preserving protocols that today require each user to personally scan the entire blockchain for incoming transactions Privacy-preserving data-sharing marketplaces that let users allow some specific computation to be performed on their data while keeping full control of their data for themselves An ingredient in more powerful cryptographic primitives, such as more efficient multi-party computation protocols and perhaps eventually obfuscation And it turns out that fully homomorphic encryption is, conceptually, not that difficult to understand!Partially, Somewhat, Fully homomorphic encryptionFirst, a note on definitions. There are different kinds of homomorphic encryption, some more powerful than others, and they are separated by what kinds of functions one can compute on the encrypted data.Partially homomorphic encryption allows evaluating only a very limited set of operations on encrypted data: either just additions (so given encrypt(a) and encrypt(b) you can compute encrypt(a+b)), or just multiplications (given encrypt(a) and encrypt(b) you can compute encrypt(a*b)). Somewhat homomorphic encryption allows computing additions as well as a limited number of multiplications (alternatively, polynomials up to a limited degree). That is, if you get encrypt(x1) ... encrypt(xn) (assuming these are "original" encryptions and not already the result of homomorphic computation), you can compute encrypt(p(x1 ... xn)), as long as p(x1 ... xn) is a polynomial with degree < D for some specific degree bound D (D is usually very low, think 5-15). Fully homomorphic encryption allows unlimited additions and multiplications. Additions and multiplications let you replicate any binary circuit gates (AND(x, y) = x*y, OR(x, y) = x+y-x*y, XOR(x, y) = x+y-2*x*y or just x+y if you only care about even vs odd, NOT(x) = 1-x...), so this is sufficient to do arbitrary computation on encrypted data. Partially homomorphic encryption is fairly easy; eg. RSA has a multiplicative homomorphism: \(enc(x) = x^e\), \(enc(y) = y^e\), so \(enc(x) * enc(y) = (xy)^e = enc(xy)\). Elliptic curves can offer similar properties with addition. Allowing both addition and multiplication is, it turns out, significantly harder.A simple somewhat-HE algorithmHere, we will go through a somewhat-homomorphic encryption algorithm (ie. one that supports a limited number of multiplications) that is surprisingly simple. A more complex version of this category of technique was used by Craig Gentry to create the first-ever fully homomorphic scheme in 2009. More recent efforts have switched to using different schemes based on vectors and matrices, but we will still go through this technique first.We will describe all of these encryption schemes as secret-key schemes; that is, the same key is used to encrypt and decrypt. Any secret-key HE scheme can be turned into a public key scheme easily: a "public key" is typically just a set of many encryptions of zero, as well as an encryption of one (and possibly more powers of two). To encrypt a value, generate it by adding together the appropriate subset of the non-zero encryptions, and then adding a random subset of the encryptions of zero to "randomize" the ciphertext and make it infeasible to tell what it represents.The secret key here is a large prime, \(p\) (think of \(p\) as having hundreds or even thousands of digits). The scheme can only encrypt 0 or 1, and "addition" becomes XOR, ie. 1 + 1 = 0. To encrypt a value \(m\) (which is either 0 or 1), generate a large random value \(R\) (this will typically be even larger than \(p\)) and a smaller random value \(r\) (typically much smaller than \(p\)), and output:\[enc(m) = R * p + r * 2 + m\]To decrypt a ciphertext \(ct\), compute:\[dec(ct) = (ct\ mod\ p)\ mod\ 2\]To add two ciphertexts \(ct_1\) and \(ct_2\), you simply, well, add them: \(ct_1 + ct_2\). And to multiply two ciphertexts, you once again... multiply them: \(ct_1 * ct_2\). We can prove the homomorphic property (that the sum of the encryptions is an encryption of the sum, and likewise for products) as follows.Let:\[ct_1 = R_1 * p + r_1 * 2 + m_1\] \[ct_2 = R_2 * p + r_2 * 2 + m_2\]We add:\[ct_1 + ct_2 = R_1 * p + R_2 * p + r_1 * 2 + r_2 * 2 + m_1 + m_2\]Which can be rewritten as:\[(R_1 + R_2) * p + (r_1 + r_2) * 2 + (m_1 + m_2)\]Which is of the exact same "form" as a ciphertext of \(m_1 + m_2\). If you decrypt it, the first \(mod\ p\) removes the first term, the second \(mod\ 2\) removes the second term, and what's left is \(m_1 + m_2\) (remember that if \(m_1 = 1\) and \(m_2 = 1\) then the 2 will get absorbed into the second term and you'll be left with zero). And so, voila, we have additive homomorphism!Now let's check multiplication:\[ct_1 * ct_2 = (R_1 * p + r_1 * 2 + m_1) * (R_2 * p + r_2 * 2 + m_2)\]Or:\[(R_1 * R_2 * p + r_1 * 2 + m_1 + r_2 * 2 + m_2) * p + \] \[(r_1 * r_2 * 2 + r_1 * m_2 + r_2 * m_1) * 2 + \] \[(m_1 * m_2)\]This was simply a matter of expanding the product above, and grouping together all the terms that contain \(p\), then all the remaining terms that contain \(2\), and finally the remaining term which is the product of the messages. If you decrypt, then once again the \(mod\ p\) removes the first group, the \(mod\ 2\) removes the second group, and only \(m_1 * m_2\) is left.But there are two problems here: first, the size of the ciphertext itself grows (the length roughly doubles when you multiply), and second, the "noise" (also often called "error") in the smaller \(\* 2\) term also gets quadratically bigger. Adding this error into the ciphertexts was necessary because the security of this scheme is based on the approximate GCD problem: Had we instead used the "exact GCD problem", breaking the system would be easy: if you just had a set of expressions of the form \(p * R_1 + m_1\), \(p * R_2 + m_2\)..., then you could use the Euclidean algorithm to efficiently compute the greatest common divisor \(p\). But if the ciphertexts are only approximate multiples of \(p\) with some "error", then extracting \(p\) quickly becomes impractical, and so the scheme can be secure.Unfortunately, the error introduces the inherent limitation that if you multiply the ciphertexts by each other enough times, the error eventually grows big enough that it exceeds \(p\), and at that point the \(mod\ p\) and \(mod\ 2\) steps "interfere" with each other, making the data unextractable. This will be an inherent tradeoff in all of these homomorphic encryption schemes: extracting information from approximate equations "with errors" is much harder than extracting information from exact equations, but any error you add quickly increases as you do computations on encrypted data, bounding the amount of computation that you can do before the error becomes overwhelming. And this is why these schemes are only "somewhat" homomorphic.BootstrappingThere are two classes of solution to this problem. First, in many somewhat homomorphic encryption schemes, there are clever tricks to make multiplication only increase the error by a constant factor (eg. 1000x) instead of squaring it. Increasing the error by 1000x still sounds by a lot, but keep in mind that if \(p\) (or its equivalent in other schemes) is a 300-digit number, that means that you can multiply numbers by each other 100 times, which is enough to compute a very wide class of computations. Second, there is Craig Gentry's technique of "bootstrapping".Suppose that you have a ciphertext \(ct\) that is an encryption of some \(m\) under a key \(p\), that has a lot of error. The idea is that we "refresh" the ciphertext by turning it into a new ciphertext of \(m\) under another key \(p_2\), where this process "clears out" the old error (though it will introduce a fixed amount of new error). The trick is quite clever. The holder of \(p\) and \(p_2\) provides a "bootstrapping key" that consists of an encryption of the bits of \(p\) under the key \(p_2\), as well as the public key for \(p_2\). Whoever is doing computations on data encrypted under \(p\) would then take the bits of the ciphertext \(ct\), and individually encrypt these bits under \(p_2\). They would then homomorphically compute the decryption under \(p\) using these ciphertexts, and get out the single bit, which would be \(m\) encrypted under \(p_2\). This is difficult to understand, so we can restate it as follows. The decryption procedure \(dec(ct, p)\) is itself a computation, and so it can itself be implemented as a circuit that takes as input the bits of \(ct\) and the bits of \(p\), and outputs the decrypted bit \(m \in \). If someone has a ciphertext \(ct\) encrypted under \(p\), a public key for \(p_2\), and the bits of \(p\) encrypted under \(p_2\), then they can compute \(dec(ct, p) = m\) "homomorphically", and get out \(m\) encrypted under \(p_2\). Notice that the decryption procedure itself washes away the old error; it just outputs 0 or 1. The decryption procedure is itself a circuit, which contains additions or multiplications, so it will introduce new error, but this new error does not depend on the amount of error in the original encryption.(Note that we can avoid having a distinct new key \(p_2\) (and if you want to bootstrap multiple times, also a \(p_3\), \(p_4\)...) by just setting \(p_2 = p\). However, this introduces a new assumption, usually called "circular security"; it becomes more difficult to formally prove security if you do this, though many cryptographers think it's fine and circular security poses no significant risk in practice)But.... there is a catch. In the scheme as described above (using circular security or not), the error blows up so quickly that even the decryption circuit of the scheme itself is too much for it. That is, the new \(m\) encrypted under \(p_2\) would already have so much error that it is unreadable. This is because each AND gate doubles the bit-length of the error, so a scheme using a \(d\)-bit modulus \(p\) can only handle less than \(log(d)\) multiplications (in series), but decryption requires computing \(mod\ p\) in a circuit made up of these binary logic gates, which requires... more than \(log(d)\) multiplications.Craig Gentry came up with clever techniques to get around this problem, but they are arguably too complicated to explain; instead, we will skip straight to newer work from 2011 and 2013, that solves this problem in a different way.Learning with errorsTo move further, we will introduce a different type of somewhat-homomorphic encryption introduced by Brakerski and Vaikuntanathan in 2011, and show how to bootstrap it. Here, we will move away from keys and ciphertexts being integers, and instead have keys and ciphertexts be vectors. Given a key \(k = \), to encrypt a message \(m\), construct a vector \(c = \) such that the inner product (or "dot product") \( = c_1k_1 + c_2k_1 + ... + c_nk_n\), modulo some fixed number \(p\), equals \(m+2e\) where \(m\) is the message (which must be 0 or 1), and \(e\) is a small (much smaller than \(p\)) "error" term. A "public key" that allows encryption but not decryption can be constructed, as before, by making a set of encryptions of 0; an encryptor can randomly combine a subset of these equations and add 1 if the message they are encrypting is 1. To decrypt a ciphertext \(c\) knowing the key \(k\), you would compute \(\) modulo \(p\), and see if the result is odd or even (this is the same "mod p mod 2" trick we used earlier). Note that here the \(mod\ p\) is typically a "symmetric" mod, that is, it returns a number between \(-\frac\) and \(\frac\) (eg. 137 mod 10 = -3, 212 mod 10 = 2); this allows our error to be positive or negative. Additionally, \(p\) does not necessarily have to be prime, though it does need to be odd. Key 3 14 15 92 65 Ciphertext 2 71 82 81 8 The key and the ciphertext are both vectors, in this example of five elements each.In this example, we set the modulus \(p = 103\). The dot product is 3 * 2 + 14 * 71 + 15 * 82 + 92 * 81 + 65 * 8 = 10202, and \(10202 = 99 * 103 + 5\). 5 itself is of course \(2 * 2 + 1\), so the message is 1. Note that in practice, the first element of the key is often set to \(1\); this makes it easier to generate ciphertexts for a particular value (see if you can figure out why).The security of the scheme is based on an assumption known as "learning with errors" (LWE) - or, in more jargony but also more understandable terms, the hardness of solving systems of equations with errors. A ciphertext can itself be viewed as an equation: \(k_1c_1 + .... + k_nc_n \approx 0\), where the key \(k_1 ... k_n\) is the unknowns, the ciphertext \(c_1 ... c_n\) is the coefficients, and the equality is only approximate because of both the message (0 or 1) and the error (\(2e\) for some relatively small \(e\)). The LWE assumption ensures that even if you have access to many of these ciphertexts, you cannot recover \(k\).Note that in some descriptions of LWE, can equal any value, but this value must be provided as part of the ciphertext. This is mathematically equivalent to the = m+2e formulation, because you can just add this answer to the end of the ciphertext and add -1 to the end of the key, and get two vectors that when multiplied together just give m+2e. We'll use the formulation that requires to be near-zero (ie. just m+2e) because it is simpler to work with.Multiplying ciphertextsIt is easy to verify that the encryption is additive: if \( = 2e_1 + m_1\) and \( = 2e_2 + m_2\), then \( = 2(e_1 + e_2) + m_1 + m_2\) (the addition here is modulo \(p\)). What is harder is multiplication: unlike with numbers, there is no natural way to multiply two length-n vectors into another length-n vector. The best that we can do is the outer product: a vector containing the products of each possible pair where the first element comes from the first vector and the second element comes from the second vector. That is, \(a \otimes b = a_1b_1 + a_2b_1 + ... + a_nb_1 + a_1b_2 + ... + a_nb_2 + ... + a_nb_n\). We can "multiply ciphertexts" using the convenient mathematical identity \( = * \).Given two ciphertexts \(c_1\) and \(c_2\), we compute the outer product \(c_1 \otimes c_2\). If both \(c_1\) and \(c_2\) were encrypted with \(k\), then \( = 2e_1 + m_1\) and \( = 2e_2 + m_2\). The outer product \(c_1 \otimes c_2\) can be viewed as an encryption of \(m_1 * m_2\) under \(k \otimes k\); we can see this by looking what happens when we try to decrypt with \(k \otimes k\):\[\] \[= * \] \[ = (2e_1 + m_1) * (2e_2 + m_2)\] \[ = 2(e_1m_2 + e_2m_1 + 2e_1e_2) + m_1m_2\]So this outer-product approach works. But there is, as you may have already noticed, a catch: the size of the ciphertext, and the key, grows quadratically.RelinearizationWe solve this with a relinearization procedure. The holder of the private key \(k\) provides, as part of the public key, a "relinearization key", which you can think of as "noisy" encryptions of \(k \otimes k\) under \(k\). The idea is that we provide these encrypted pieces of \(k \otimes k\) to anyone performing the computations, allowing them to compute the equation \(\) to "decrypt" the ciphertext, but only in such a way that the output comes back encrypted under \(k\).It's important to understand what we mean here by "noisy encryptions". Normally, this encryption scheme only allows encrypting \(m \in \\), and an "encryption of \(m\)" is a vector \(c\) such that \( = m+2e\) for some small error \(e\). Here, we're "encrypting" arbitrary \(m \in \\). Note that the error means that you can't fully recover \(m\) from \(c\); your answer will be off by some multiple of 2. However, it turns out that, for this specific use case, this is fine.The relinearization key consists of a set of vectors which, when inner-producted (modulo \(p\)) with the key \(k\), give values of the form \(k_i * k_j * 2^d + 2e\) (mod \(p\)), one such vector for every possible triple \((i, j, d)\), where \(i\) and \(j\) are indices in the key and \(d\) is an exponent where \(2^d < p\) (note: if the key has length \(n\), there would be \(n^2 * log(p)\) values in the relinearization key; make sure you understand why before continuing). Example assuming p = 15 and k has length 2. Formally, enc(x) here means "outputs x+2e if inner-producted with k".Now, let us take a step back and look again at our goal. We have a ciphertext which, if decrypted with \(k \otimes k\), gives \(m_1 * m_2\). We want a ciphertext which, if decrypted with \(k\), gives \(m_1 * m_2\). We can do this with the relinearization key. Notice that the decryption equation \(\) is just a big sum of terms of the form \((ct_ * ct_) * k_p * k_q\).And what do we have in our relinearization key? A bunch of elements of the form \(2^d * k_p * k_q\), noisy-encrypted under \(k\), for every possible combination of \(p\) and \(q\)! Having all the powers of two in our relinearization key allows us to generate any \((ct_ * ct_) * k_p * k_q\) by just adding up \(\le log(p)\) powers of two (eg. 13 = 8 + 4 + 1) together for each \((p, q)\) pair.For example, if \(ct_1 = [1, 2]\) and \(ct_2 = [3, 4]\), then \(ct_1 \otimes ct_2 = [3, 4, 6, 8]\), and \(enc() = enc(3k_1k_1 + 4k_1k_2 + 6k_2k_1 + 8k_2k_2)\) could be computed via:\[enc(k_1 * k_1) + enc(k_1 * k_1 * 2) + enc(k_1 * k_2 * 4) + \]\[enc(k_2 * k_1 * 2) + enc(k_2 * k_1 * 4) + enc(k_2 * k_2 * 8) \]Note that each noisy-encryption in the relinearization key has some even error \(2e\), and the equation \(\) itself has some error: if \( = 2e_1 + m_1\) and \( = 2e_2 + m_2\), then \( =\) \( * =\) \(2(2e_1e_2 + e_1m_2 + e_2m_1) + m_1m_2\). But this total error is still (relatively) small (\(2e_1e_2 + e_1m_2 + e_2m_1\) plus \(n^2 * log(p)\) fixed-size errors from the realinearization key), and the error is even, and so the result of this calculation still gives a value which, when inner-producted with \(k\), gives \(m_1 * m_2 + 2e'\) for some "combined error" \(e'\).The broader technique we used here is a common trick in homomorphic encryption: provide pieces of the key encrypted under the key itself (or a different key if you are pedantic about avoiding circular security assumptions), such that someone computing on the data can compute the decryption equation, but only in such a way that the output itself is still encrypted. It was used in bootstrapping above, and it's used here; it's best to make sure you mentally understand what's going on in both cases.This new ciphertext has considerably more error in it: the \(n^2 * log(p)\) different errors from the portions of the relinearization key that we used, plus the \(2(2e_1e_2 + e_1m_2 + e_2m_1)\) from the original outer-product ciphertext. Hence, the new ciphertext still does have quadratically larger error than the original ciphertexts, and so we still haven't solved the problem that the error blows up too quickly. To solve this, we move on to another trick...Modulus switchingHere, we need to understand an important algebraic fact. A ciphertext is a vector \(ct\), such that \( = m+2e\), where \(m \in \\). But we can also look at the ciphertext from a different "perspective": consider \(\frac\) (modulo \(p\)). \( = \frac + e\), where \(\frac \in \\}\). Note that because (modulo \(p\)) \((\frac)*2 = p+1 = 1\), division by 2 (modulo \(p\)) maps \(1\) to \(\frac\); this is a very convenient fact for us. The scheme in this section uses both modular division (ie. multiplying by the modular multiplicative inverse) and regular "rounded down" integer division; make sure you understand how both work and how they are different from each other.That is, the operation of dividing by 2 (modulo \(p\)) converts small even numbers into small numbers, and it converts 1 into \(\frac\) (rounded up). So if we look at \(\frac\) (modulo \(p\)) instead of \(ct\), decryption involves computing \(\) and seeing if it's closer to \(0\) or \(\frac\). This "perspective" is much more robust to certain kinds of errors, where you know the error is small but can't guarantee that it's a multiple of 2.Now, here is something we can do to a ciphertext.Start: \( = \ + 2e\ (mod\ p)\) Divide \(ct\) by 2 (modulo \(p\)): \( = \ + 2e' + 2e_2\ (mod\ q)\) Step 3 is the crucial one: it converts a ciphertext under modulus \(p\) into a ciphertext under modulus \(q\). The process just involves "scaling down" each element of \(ct'\) by multiplying by \(\frac\) and rounding down, eg. \(floor(56 * \frac) = floor(8.15533..) = 8\).The idea is this: if \( = p(z + \frac) + e\) for some integer \(z\). Therefore, \( = m*\frac + e' + e_2\ (mod\ q)\), where \(e' = e * \frac\), and \(e_2\) is a small error from rounding.What have we accomplished? We turned a ciphertext with modulus \(p\) and error \(2e\) into a ciphertext with modulus \(q\) and error \(2(floor(e*\frac) + e_2)\), where the new error is smaller than the original error.Let's go through the above with an example. Suppose:\(ct\) is just one value, \([5612]\) \(k = [9]\) \(p = 9999\) and \(q = 113\) \( = 5612 * 9 = 50508 = 9999 * 5 + 2 * 256 + 1\), so \(ct\) represents the bit 1, but the error is fairly large (\(e = 256\)).Step 2: \(ct' = \frac = 2806\) (remember this is modular division; if \(ct\) were instead \(5613\), then we would have \(\frac = 7806\)). Checking: \(

Exploring Fully Homomorphic Encryption Exploring Fully Homomorphic Encryption2020 Jul 20 See all posts Exploring Fully Homomorphic Encryption Special thanks to Karl Floersch and Dankrad Feist for reviewFully homomorphic encryption has for a long time been considered one of the holy grails of cryptography. The promise of fully homomorphic encryption (FHE) is powerful: it is a type of encryption that allows a third party to perform computations on encrypted data, and get an encrypted result that they can hand back to whoever has the decryption key for the original data, without the third party being able to decrypt the data or the result themselves. As a simple example, imagine that you have a set of emails, and you want to use a third party spam filter to check whether or not they are spam. The spam filter has a desire for privacy of their algorithm: either the spam filter provider wants to keep their source code closed, or the spam filter depends on a very large database that they do not want to reveal publicly as that would make attacking easier, or both. However, you care about the privacy of your data, and don't want to upload your unencrypted emails to a third party. So here's how you do it: Fully homomorphic encryption has many applications, including in the blockchain space. One key example is that can be used to implement privacy-preserving light clients (the light client hands the server an encrypted index i, the server computes and returns data[0] * (i = 0) + data[1] * (i = 1) + ... + data[n] * (i = n), where data[i] is the i'th piece of data in a block or state along with its Merkle branch and (i = k) is an expression that returns 1 if i = k and otherwise 0; the light client gets the data it needs and the server learns nothing about what the light client asked).It can also be used for:More efficient stealth address protocols, and more generally scalability solutions to privacy-preserving protocols that today require each user to personally scan the entire blockchain for incoming transactions Privacy-preserving data-sharing marketplaces that let users allow some specific computation to be performed on their data while keeping full control of their data for themselves An ingredient in more powerful cryptographic primitives, such as more efficient multi-party computation protocols and perhaps eventually obfuscation And it turns out that fully homomorphic encryption is, conceptually, not that difficult to understand!Partially, Somewhat, Fully homomorphic encryptionFirst, a note on definitions. There are different kinds of homomorphic encryption, some more powerful than others, and they are separated by what kinds of functions one can compute on the encrypted data.Partially homomorphic encryption allows evaluating only a very limited set of operations on encrypted data: either just additions (so given encrypt(a) and encrypt(b) you can compute encrypt(a+b)), or just multiplications (given encrypt(a) and encrypt(b) you can compute encrypt(a*b)). Somewhat homomorphic encryption allows computing additions as well as a limited number of multiplications (alternatively, polynomials up to a limited degree). That is, if you get encrypt(x1) ... encrypt(xn) (assuming these are "original" encryptions and not already the result of homomorphic computation), you can compute encrypt(p(x1 ... xn)), as long as p(x1 ... xn) is a polynomial with degree < D for some specific degree bound D (D is usually very low, think 5-15). Fully homomorphic encryption allows unlimited additions and multiplications. Additions and multiplications let you replicate any binary circuit gates (AND(x, y) = x*y, OR(x, y) = x+y-x*y, XOR(x, y) = x+y-2*x*y or just x+y if you only care about even vs odd, NOT(x) = 1-x...), so this is sufficient to do arbitrary computation on encrypted data. Partially homomorphic encryption is fairly easy; eg. RSA has a multiplicative homomorphism: \(enc(x) = x^e\), \(enc(y) = y^e\), so \(enc(x) * enc(y) = (xy)^e = enc(xy)\). Elliptic curves can offer similar properties with addition. Allowing both addition and multiplication is, it turns out, significantly harder.A simple somewhat-HE algorithmHere, we will go through a somewhat-homomorphic encryption algorithm (ie. one that supports a limited number of multiplications) that is surprisingly simple. A more complex version of this category of technique was used by Craig Gentry to create the first-ever fully homomorphic scheme in 2009. More recent efforts have switched to using different schemes based on vectors and matrices, but we will still go through this technique first.We will describe all of these encryption schemes as secret-key schemes; that is, the same key is used to encrypt and decrypt. Any secret-key HE scheme can be turned into a public key scheme easily: a "public key" is typically just a set of many encryptions of zero, as well as an encryption of one (and possibly more powers of two). To encrypt a value, generate it by adding together the appropriate subset of the non-zero encryptions, and then adding a random subset of the encryptions of zero to "randomize" the ciphertext and make it infeasible to tell what it represents.The secret key here is a large prime, \(p\) (think of \(p\) as having hundreds or even thousands of digits). The scheme can only encrypt 0 or 1, and "addition" becomes XOR, ie. 1 + 1 = 0. To encrypt a value \(m\) (which is either 0 or 1), generate a large random value \(R\) (this will typically be even larger than \(p\)) and a smaller random value \(r\) (typically much smaller than \(p\)), and output:\[enc(m) = R * p + r * 2 + m\]To decrypt a ciphertext \(ct\), compute:\[dec(ct) = (ct\ mod\ p)\ mod\ 2\]To add two ciphertexts \(ct_1\) and \(ct_2\), you simply, well, add them: \(ct_1 + ct_2\). And to multiply two ciphertexts, you once again... multiply them: \(ct_1 * ct_2\). We can prove the homomorphic property (that the sum of the encryptions is an encryption of the sum, and likewise for products) as follows.Let:\[ct_1 = R_1 * p + r_1 * 2 + m_1\] \[ct_2 = R_2 * p + r_2 * 2 + m_2\]We add:\[ct_1 + ct_2 = R_1 * p + R_2 * p + r_1 * 2 + r_2 * 2 + m_1 + m_2\]Which can be rewritten as:\[(R_1 + R_2) * p + (r_1 + r_2) * 2 + (m_1 + m_2)\]Which is of the exact same "form" as a ciphertext of \(m_1 + m_2\). If you decrypt it, the first \(mod\ p\) removes the first term, the second \(mod\ 2\) removes the second term, and what's left is \(m_1 + m_2\) (remember that if \(m_1 = 1\) and \(m_2 = 1\) then the 2 will get absorbed into the second term and you'll be left with zero). And so, voila, we have additive homomorphism!Now let's check multiplication:\[ct_1 * ct_2 = (R_1 * p + r_1 * 2 + m_1) * (R_2 * p + r_2 * 2 + m_2)\]Or:\[(R_1 * R_2 * p + r_1 * 2 + m_1 + r_2 * 2 + m_2) * p + \] \[(r_1 * r_2 * 2 + r_1 * m_2 + r_2 * m_1) * 2 + \] \[(m_1 * m_2)\]This was simply a matter of expanding the product above, and grouping together all the terms that contain \(p\), then all the remaining terms that contain \(2\), and finally the remaining term which is the product of the messages. If you decrypt, then once again the \(mod\ p\) removes the first group, the \(mod\ 2\) removes the second group, and only \(m_1 * m_2\) is left.But there are two problems here: first, the size of the ciphertext itself grows (the length roughly doubles when you multiply), and second, the "noise" (also often called "error") in the smaller \(\* 2\) term also gets quadratically bigger. Adding this error into the ciphertexts was necessary because the security of this scheme is based on the approximate GCD problem: Had we instead used the "exact GCD problem", breaking the system would be easy: if you just had a set of expressions of the form \(p * R_1 + m_1\), \(p * R_2 + m_2\)..., then you could use the Euclidean algorithm to efficiently compute the greatest common divisor \(p\). But if the ciphertexts are only approximate multiples of \(p\) with some "error", then extracting \(p\) quickly becomes impractical, and so the scheme can be secure.Unfortunately, the error introduces the inherent limitation that if you multiply the ciphertexts by each other enough times, the error eventually grows big enough that it exceeds \(p\), and at that point the \(mod\ p\) and \(mod\ 2\) steps "interfere" with each other, making the data unextractable. This will be an inherent tradeoff in all of these homomorphic encryption schemes: extracting information from approximate equations "with errors" is much harder than extracting information from exact equations, but any error you add quickly increases as you do computations on encrypted data, bounding the amount of computation that you can do before the error becomes overwhelming. And this is why these schemes are only "somewhat" homomorphic.BootstrappingThere are two classes of solution to this problem. First, in many somewhat homomorphic encryption schemes, there are clever tricks to make multiplication only increase the error by a constant factor (eg. 1000x) instead of squaring it. Increasing the error by 1000x still sounds by a lot, but keep in mind that if \(p\) (or its equivalent in other schemes) is a 300-digit number, that means that you can multiply numbers by each other 100 times, which is enough to compute a very wide class of computations. Second, there is Craig Gentry's technique of "bootstrapping".Suppose that you have a ciphertext \(ct\) that is an encryption of some \(m\) under a key \(p\), that has a lot of error. The idea is that we "refresh" the ciphertext by turning it into a new ciphertext of \(m\) under another key \(p_2\), where this process "clears out" the old error (though it will introduce a fixed amount of new error). The trick is quite clever. The holder of \(p\) and \(p_2\) provides a "bootstrapping key" that consists of an encryption of the bits of \(p\) under the key \(p_2\), as well as the public key for \(p_2\). Whoever is doing computations on data encrypted under \(p\) would then take the bits of the ciphertext \(ct\), and individually encrypt these bits under \(p_2\). They would then homomorphically compute the decryption under \(p\) using these ciphertexts, and get out the single bit, which would be \(m\) encrypted under \(p_2\). This is difficult to understand, so we can restate it as follows. The decryption procedure \(dec(ct, p)\) is itself a computation, and so it can itself be implemented as a circuit that takes as input the bits of \(ct\) and the bits of \(p\), and outputs the decrypted bit \(m \in \). If someone has a ciphertext \(ct\) encrypted under \(p\), a public key for \(p_2\), and the bits of \(p\) encrypted under \(p_2\), then they can compute \(dec(ct, p) = m\) "homomorphically", and get out \(m\) encrypted under \(p_2\). Notice that the decryption procedure itself washes away the old error; it just outputs 0 or 1. The decryption procedure is itself a circuit, which contains additions or multiplications, so it will introduce new error, but this new error does not depend on the amount of error in the original encryption.(Note that we can avoid having a distinct new key \(p_2\) (and if you want to bootstrap multiple times, also a \(p_3\), \(p_4\)...) by just setting \(p_2 = p\). However, this introduces a new assumption, usually called "circular security"; it becomes more difficult to formally prove security if you do this, though many cryptographers think it's fine and circular security poses no significant risk in practice)But.... there is a catch. In the scheme as described above (using circular security or not), the error blows up so quickly that even the decryption circuit of the scheme itself is too much for it. That is, the new \(m\) encrypted under \(p_2\) would already have so much error that it is unreadable. This is because each AND gate doubles the bit-length of the error, so a scheme using a \(d\)-bit modulus \(p\) can only handle less than \(log(d)\) multiplications (in series), but decryption requires computing \(mod\ p\) in a circuit made up of these binary logic gates, which requires... more than \(log(d)\) multiplications.Craig Gentry came up with clever techniques to get around this problem, but they are arguably too complicated to explain; instead, we will skip straight to newer work from 2011 and 2013, that solves this problem in a different way.Learning with errorsTo move further, we will introduce a different type of somewhat-homomorphic encryption introduced by Brakerski and Vaikuntanathan in 2011, and show how to bootstrap it. Here, we will move away from keys and ciphertexts being integers, and instead have keys and ciphertexts be vectors. Given a key \(k = \), to encrypt a message \(m\), construct a vector \(c = \) such that the inner product (or "dot product") \( = c_1k_1 + c_2k_1 + ... + c_nk_n\), modulo some fixed number \(p\), equals \(m+2e\) where \(m\) is the message (which must be 0 or 1), and \(e\) is a small (much smaller than \(p\)) "error" term. A "public key" that allows encryption but not decryption can be constructed, as before, by making a set of encryptions of 0; an encryptor can randomly combine a subset of these equations and add 1 if the message they are encrypting is 1. To decrypt a ciphertext \(c\) knowing the key \(k\), you would compute \(\) modulo \(p\), and see if the result is odd or even (this is the same "mod p mod 2" trick we used earlier). Note that here the \(mod\ p\) is typically a "symmetric" mod, that is, it returns a number between \(-\frac\) and \(\frac\) (eg. 137 mod 10 = -3, 212 mod 10 = 2); this allows our error to be positive or negative. Additionally, \(p\) does not necessarily have to be prime, though it does need to be odd. Key 3 14 15 92 65 Ciphertext 2 71 82 81 8 The key and the ciphertext are both vectors, in this example of five elements each.In this example, we set the modulus \(p = 103\). The dot product is 3 * 2 + 14 * 71 + 15 * 82 + 92 * 81 + 65 * 8 = 10202, and \(10202 = 99 * 103 + 5\). 5 itself is of course \(2 * 2 + 1\), so the message is 1. Note that in practice, the first element of the key is often set to \(1\); this makes it easier to generate ciphertexts for a particular value (see if you can figure out why).The security of the scheme is based on an assumption known as "learning with errors" (LWE) - or, in more jargony but also more understandable terms, the hardness of solving systems of equations with errors. A ciphertext can itself be viewed as an equation: \(k_1c_1 + .... + k_nc_n \approx 0\), where the key \(k_1 ... k_n\) is the unknowns, the ciphertext \(c_1 ... c_n\) is the coefficients, and the equality is only approximate because of both the message (0 or 1) and the error (\(2e\) for some relatively small \(e\)). The LWE assumption ensures that even if you have access to many of these ciphertexts, you cannot recover \(k\).Note that in some descriptions of LWE, can equal any value, but this value must be provided as part of the ciphertext. This is mathematically equivalent to the = m+2e formulation, because you can just add this answer to the end of the ciphertext and add -1 to the end of the key, and get two vectors that when multiplied together just give m+2e. We'll use the formulation that requires to be near-zero (ie. just m+2e) because it is simpler to work with.Multiplying ciphertextsIt is easy to verify that the encryption is additive: if \( = 2e_1 + m_1\) and \( = 2e_2 + m_2\), then \( = 2(e_1 + e_2) + m_1 + m_2\) (the addition here is modulo \(p\)). What is harder is multiplication: unlike with numbers, there is no natural way to multiply two length-n vectors into another length-n vector. The best that we can do is the outer product: a vector containing the products of each possible pair where the first element comes from the first vector and the second element comes from the second vector. That is, \(a \otimes b = a_1b_1 + a_2b_1 + ... + a_nb_1 + a_1b_2 + ... + a_nb_2 + ... + a_nb_n\). We can "multiply ciphertexts" using the convenient mathematical identity \( = * \).Given two ciphertexts \(c_1\) and \(c_2\), we compute the outer product \(c_1 \otimes c_2\). If both \(c_1\) and \(c_2\) were encrypted with \(k\), then \( = 2e_1 + m_1\) and \( = 2e_2 + m_2\). The outer product \(c_1 \otimes c_2\) can be viewed as an encryption of \(m_1 * m_2\) under \(k \otimes k\); we can see this by looking what happens when we try to decrypt with \(k \otimes k\):\[\] \[= * \] \[ = (2e_1 + m_1) * (2e_2 + m_2)\] \[ = 2(e_1m_2 + e_2m_1 + 2e_1e_2) + m_1m_2\]So this outer-product approach works. But there is, as you may have already noticed, a catch: the size of the ciphertext, and the key, grows quadratically.RelinearizationWe solve this with a relinearization procedure. The holder of the private key \(k\) provides, as part of the public key, a "relinearization key", which you can think of as "noisy" encryptions of \(k \otimes k\) under \(k\). The idea is that we provide these encrypted pieces of \(k \otimes k\) to anyone performing the computations, allowing them to compute the equation \(\) to "decrypt" the ciphertext, but only in such a way that the output comes back encrypted under \(k\).It's important to understand what we mean here by "noisy encryptions". Normally, this encryption scheme only allows encrypting \(m \in \\), and an "encryption of \(m\)" is a vector \(c\) such that \( = m+2e\) for some small error \(e\). Here, we're "encrypting" arbitrary \(m \in \\). Note that the error means that you can't fully recover \(m\) from \(c\); your answer will be off by some multiple of 2. However, it turns out that, for this specific use case, this is fine.The relinearization key consists of a set of vectors which, when inner-producted (modulo \(p\)) with the key \(k\), give values of the form \(k_i * k_j * 2^d + 2e\) (mod \(p\)), one such vector for every possible triple \((i, j, d)\), where \(i\) and \(j\) are indices in the key and \(d\) is an exponent where \(2^d < p\) (note: if the key has length \(n\), there would be \(n^2 * log(p)\) values in the relinearization key; make sure you understand why before continuing). Example assuming p = 15 and k has length 2. Formally, enc(x) here means "outputs x+2e if inner-producted with k".Now, let us take a step back and look again at our goal. We have a ciphertext which, if decrypted with \(k \otimes k\), gives \(m_1 * m_2\). We want a ciphertext which, if decrypted with \(k\), gives \(m_1 * m_2\). We can do this with the relinearization key. Notice that the decryption equation \(\) is just a big sum of terms of the form \((ct_ * ct_) * k_p * k_q\).And what do we have in our relinearization key? A bunch of elements of the form \(2^d * k_p * k_q\), noisy-encrypted under \(k\), for every possible combination of \(p\) and \(q\)! Having all the powers of two in our relinearization key allows us to generate any \((ct_ * ct_) * k_p * k_q\) by just adding up \(\le log(p)\) powers of two (eg. 13 = 8 + 4 + 1) together for each \((p, q)\) pair.For example, if \(ct_1 = [1, 2]\) and \(ct_2 = [3, 4]\), then \(ct_1 \otimes ct_2 = [3, 4, 6, 8]\), and \(enc() = enc(3k_1k_1 + 4k_1k_2 + 6k_2k_1 + 8k_2k_2)\) could be computed via:\[enc(k_1 * k_1) + enc(k_1 * k_1 * 2) + enc(k_1 * k_2 * 4) + \]\[enc(k_2 * k_1 * 2) + enc(k_2 * k_1 * 4) + enc(k_2 * k_2 * 8) \]Note that each noisy-encryption in the relinearization key has some even error \(2e\), and the equation \(\) itself has some error: if \( = 2e_1 + m_1\) and \( = 2e_2 + m_2\), then \( =\) \( * =\) \(2(2e_1e_2 + e_1m_2 + e_2m_1) + m_1m_2\). But this total error is still (relatively) small (\(2e_1e_2 + e_1m_2 + e_2m_1\) plus \(n^2 * log(p)\) fixed-size errors from the realinearization key), and the error is even, and so the result of this calculation still gives a value which, when inner-producted with \(k\), gives \(m_1 * m_2 + 2e'\) for some "combined error" \(e'\).The broader technique we used here is a common trick in homomorphic encryption: provide pieces of the key encrypted under the key itself (or a different key if you are pedantic about avoiding circular security assumptions), such that someone computing on the data can compute the decryption equation, but only in such a way that the output itself is still encrypted. It was used in bootstrapping above, and it's used here; it's best to make sure you mentally understand what's going on in both cases.This new ciphertext has considerably more error in it: the \(n^2 * log(p)\) different errors from the portions of the relinearization key that we used, plus the \(2(2e_1e_2 + e_1m_2 + e_2m_1)\) from the original outer-product ciphertext. Hence, the new ciphertext still does have quadratically larger error than the original ciphertexts, and so we still haven't solved the problem that the error blows up too quickly. To solve this, we move on to another trick...Modulus switchingHere, we need to understand an important algebraic fact. A ciphertext is a vector \(ct\), such that \( = m+2e\), where \(m \in \\). But we can also look at the ciphertext from a different "perspective": consider \(\frac\) (modulo \(p\)). \( = \frac + e\), where \(\frac \in \\}\). Note that because (modulo \(p\)) \((\frac)*2 = p+1 = 1\), division by 2 (modulo \(p\)) maps \(1\) to \(\frac\); this is a very convenient fact for us. The scheme in this section uses both modular division (ie. multiplying by the modular multiplicative inverse) and regular "rounded down" integer division; make sure you understand how both work and how they are different from each other.That is, the operation of dividing by 2 (modulo \(p\)) converts small even numbers into small numbers, and it converts 1 into \(\frac\) (rounded up). So if we look at \(\frac\) (modulo \(p\)) instead of \(ct\), decryption involves computing \(\) and seeing if it's closer to \(0\) or \(\frac\). This "perspective" is much more robust to certain kinds of errors, where you know the error is small but can't guarantee that it's a multiple of 2.Now, here is something we can do to a ciphertext.Start: \( = \ + 2e\ (mod\ p)\) Divide \(ct\) by 2 (modulo \(p\)): \( = \ + 2e' + 2e_2\ (mod\ q)\) Step 3 is the crucial one: it converts a ciphertext under modulus \(p\) into a ciphertext under modulus \(q\). The process just involves "scaling down" each element of \(ct'\) by multiplying by \(\frac\) and rounding down, eg. \(floor(56 * \frac) = floor(8.15533..) = 8\).The idea is this: if \( = p(z + \frac) + e\) for some integer \(z\). Therefore, \( = m*\frac + e' + e_2\ (mod\ q)\), where \(e' = e * \frac\), and \(e_2\) is a small error from rounding.What have we accomplished? We turned a ciphertext with modulus \(p\) and error \(2e\) into a ciphertext with modulus \(q\) and error \(2(floor(e*\frac) + e_2)\), where the new error is smaller than the original error.Let's go through the above with an example. Suppose:\(ct\) is just one value, \([5612]\) \(k = [9]\) \(p = 9999\) and \(q = 113\) \( = 5612 * 9 = 50508 = 9999 * 5 + 2 * 256 + 1\), so \(ct\) represents the bit 1, but the error is fairly large (\(e = 256\)).Step 2: \(ct' = \frac = 2806\) (remember this is modular division; if \(ct\) were instead \(5613\), then we would have \(\frac = 7806\)). Checking: \( -

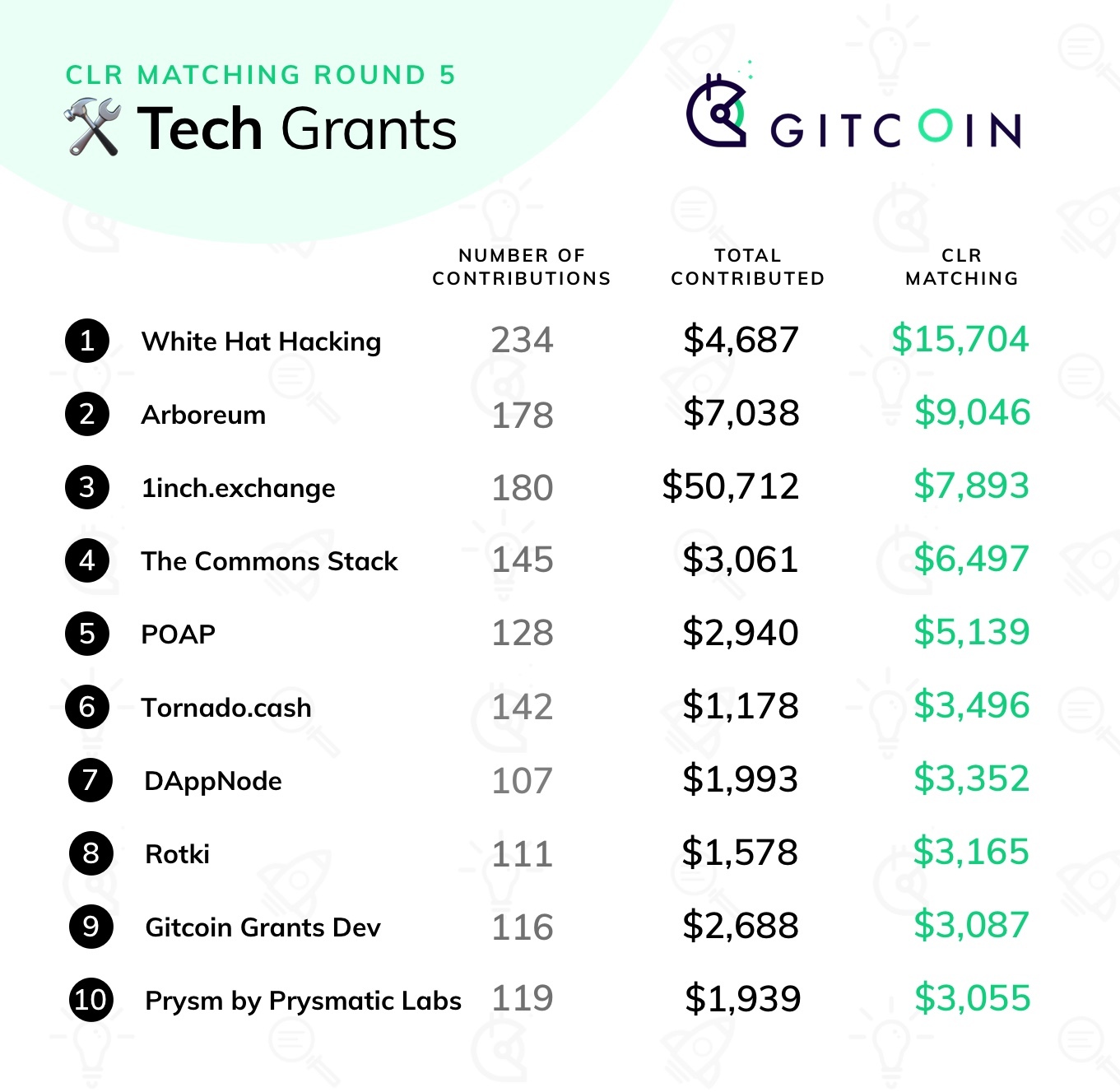

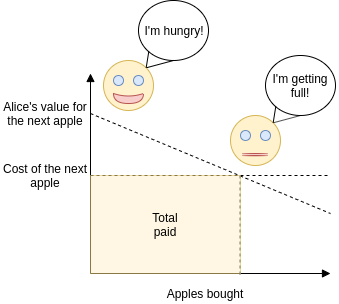

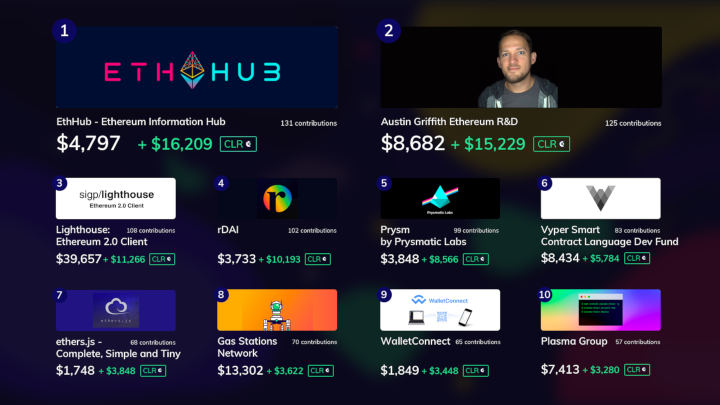

Gitcoin Grants Round 5 Retrospective Gitcoin Grants Round 5 Retrospective2020 Apr 30 See all posts Gitcoin Grants Round 5 Retrospective Special thanks to Kevin Owocki and Frank Chen for help and reviewRound 5 of Gitcoin Grants has just finished, with $250,000 of matching split between tech, media, and the new (non-Ethereum-centric) category of "public health". In general, it seems like the mechanism and the community are settling down into a regular rhythm. People know what it means to contribute, people know what to expect, and the results emerge in a relatively predictable pattern - even if which specific grants get the most funds is not so easy to predict. Stability of incomeSo let's go straight into the analysis. One important property worth looking at is stability of income across rounds: do projects that do well in round N also tend to do well in round N+1? Stability of income is very important if we want to support an ecosystem of "quadratic freelancers": we want people to feel comfortable relying on their income knowing that it will not completely disappear the next round. On the other hand, it would be harmful if some recipients became completely entrenched, with no opportunity for new projects to come in and compete for the pot, so there is a need for a balance.On the media side, we do see some balance between stability and dynamism: Week in Ethereum had the highest total amount received in both the previous round and the current round. EthHub and Bankless are also near the top in both the current round and the previous round. On the other hand, Antiprosynthesis, the (beloved? notorious? famous?) Twitter info-warrior, has decreased from $13,813 to $5,350, while Chris Blec's YouTube channel has increased from $5,851 to $12,803. So some churn, but also some continuity between rounds.On the tech side, we see much more churn in the winners, with a less clear relationship between income last round and income this round: Last round, the winner was Tornado Cash, claiming $30,783; this round, they are down to $8,154. This round, the three roughly-even winners are Samczsun ($4,631 contributions + $15,704 match = $20,335 total), Arboreum ($16,084 contributions + $9,046 match = $25,128 total) and 1inch.exchange ($58,566 contributions + $7,893 match = $66,459 total), in the latter case the bulk coming from one contribution: In the previous round, those three winners were not even in the top ten, and in some cases not even part of Gitcoin Grants at all.These numbers show us two things. First, large parts of the Gitcoin community seem to be in the mindset of treating grants not as a question of "how much do you deserve for your last two months of work?", but rather as a one-off reward for years of contributions in the past. This was one of the strongest rebuttals that I received to my criticism of Antiprosynthesis receiving $13,813 in the last round: that the people who contributed to that award did not see it as two months' salary, but rather as a reward for years of dedication and work for the Ethereum ecosystem. In the next round, contributors were content that the debt was sufficiently repaid, and so they moved on to give a similar gift of appreciation and gratitude to Chris Blec.That said, not everyone contributes in this way. For example, Prysm got $7,966 last round and $8,033 this round, and Week in Ethereum is consistently well-rewarded ($16,727 previous, $12,195 current), and EthHub saw less stability but still kept half its income ($13,515 previous, $6,705 current) even amid a 20% drop to the matching pool size as some funds were redirected to public health. So there definitely are some contributors that are getting almost a reasonable monthly salary from Gitcoin Grants (yes, even these amounts are all serious underpayment, but remember that the pool of funds Gitcoin Grants has to distribute in the first place is quite small, so there's no allocation that would not seriously underpay most people; the hope is that in the future we will find ways to make the matching pot grow bigger).Why didn't more people use recurring contributions?One feature that was tested this round to try to improve stability was recurring contributions: users could choose to split their contribution among multiple rounds. However, the feature was not used often: out of over 8,000 total contributions, only 120 actually made recurring contributions. I can think of three possible explanations for this:People just don't want to give recurring contributions; they genuinely prefer to freshly rethink who they are supporting every round. People would be willing to give more recurring contributions, but there is some kind of "market failure" stopping them; that is, it's collectively optimal for everyone to give more recurring contributions, but it's not any individual contributor's interest to be the first to do so. There's some UI inconveniences or other "incidental" obstacles preventing recurring contributions. In a recent call with the Gitcoin team, hypothesis (3) was mentioned frequently. A specific issue was that people were worried about making recurring contributions because they were concerned whether or not the money that they lock up for a recurring contribution would be safe. Improving the payment system and notification workflow may help with this. Another option is to move away from explicit "streaming" and instead simply have the UI provide an option for repeating the last round's contributions and making edits from there.Hypothesis (1) also should be taken seriously; there's genuine value in preventing ossification and allowing space for new entrants. But I want to zoom in particularly on hypothesis (2), the coordination failure hypothesis.My explanation of hypothesis (2) starts, interestingly enough, with a defense of (1): why ossification is genuinely a risk. Suppose that there are two projects, A and B, and suppose that they are equal quality. But A already has an established base of contributors; B does not (we'll say for illustration it only has a few existing contributors). Here's how much matching you are contributing by participating in each project:Contributing to A Contributing to B Clearly, you have more impact by supporting A, and so A gets even more contributors and B gets fewer; the rich get richer. Even if project B was somewhat better, the greater impact from supporting A could still create a lock-in that reinforces A's position. The current everyone-starts-from-zero-in-each-round mechanism greatly limits this type of entrenchment, because, well, everyone's matching gets reset and starts from zero.However, a very similar effect also is the cause behind the market failure preventing stable recurring contributions, and the every-round-reset actually exacerbates it. Look at the same picture above, except instead of thinking of A and B as two different projects, think of them as the same project in the current round and in the next round.We simplify the model as follows. An individual has two choices: contribute $10 in the current round, or contribute $5 in the current round and $5 in the next round. If the matchings in the two rounds were equal, then the latter option would actually be more favorable: because the matching is proportional to the square root of the donation size, the former might give you eg. a $200 match now, but the latter would give you $141 in the current round + $141 in the next round = $282. But if you see a large mass of people contributing in the current round, and you expect much fewer people to contribute in the second round, then the choice is not $200 versus $141 + $141, it might be $200 versus $141 + $5. And so you're better off joining the current round's frenzy. We can mathematically analyze the equilibrium: So there is a substantial region within which the bad equilibrium of everyone concentrating is sticky: if more than about 3/4 of contributors are expected to concentrate, it seems in your interest to also concentrate. A mathematically astute reader may note that there is always some intermediate strategy that involves splitting but at a ratio different from 50/50, which you can prove performs better than either full concentrating or the even split, but here we get back to hypothesis (3) above: the UI doesn't offer such a complex menu of choices, it just offers the choice of a one-time contribution or a recurring contribution, so people pick one or the other.How might we fix this? One option is to add a bit of continuity to matching ratios: when computing pairwise matches, match against not just the current round's contributors but, say, 1/3 of the previous round's contributors as well: This makes some philosophical sense: the objective of quadratic funding is to subsidize contributions to projects that are detected to be public goods because multiple people have contributed to them, and contributions in the previous round are certainly also evidence of a project's value, so why not reuse those? So here, moving away from everyone-starts-from-zero toward this partial carryover of matching ratios would mitigate the round concentration effect - but, of course, it would exacerbate the risk of entrenchment. Hence, some experimentation and balance may be in order. A broader philosophical question is, is there really a deep inherent tradeoff between risk of entrenchment and stability of income, or is there some way we could get both?Responses to negative contributionsThis round also introduced negative contributions, a feature proposed in my review of the previous round. But as with recurring contributions, very few people made negative contributions, to the point where their impact on the results was negligible. Also, there was active opposition to negative contributions: Source: honestly I have no idea, someone else sent it to me and they forgot where they found it. Sorry :( The main source of opposition was basically what I predicted in the previous round. Adding a mechanism that allows people to penalize others, even if deservedly so, can have tricky and easily harmful social consequences. Some people even opposed the negative contribution mechanism to the point where they took care to give positive contributions to everyone who received a negative contribution.How do we respond? To me it seems clear that, in the long run, some mechanism of filtering out bad projects, and ideally compensating for overexcitement into good projects, will have to exist. It doesn't necessarily need to be integrated as a symmetric part of the QF, but there does need to be a filter of some form. And this mechanism, whatever form it will take, invariably opens up the possibility of the same social dynamics. So there is a challenge that will have to be solved no matter how we do it.One approach would be to hide more information: instead of just hiding who made a negative contribution, outright hide the fact that a negative contribution was made. Many opponents of negative contributions explicitly indicated that they would be okay (or at least more okay) with such a model. And indeed (see the next section), this is a direction we will have to go anyway. But it would come at a cost - effectively hiding negative contributions would mean not giving as much real-time feedback into what projects got how much funds.Stepping up the fight against collusionThis round saw much larger-scale attempts at collusion: It does seem clear that, at current scales, stronger protections against manipulation are goingto be required. The first thing that can be done is adding a stronger identity verification layer than Github accounts; this is something that the Gitcoin team is already working on. There is definitely a complex tradeoff between security and inclusiveness to be worked through, but it is not especially difficult to implement a first version. And if the identity problem is solved to a reasonable extent, that will likely be enough to prevent collusion at current scales. But in the longer term, we are going to need protection not just against manipulating the system by making many fake accounts, but also against collusion via bribes (explicit and implicit).MACI is the solution that I proposed (and Barry Whitehat and co are implementing) to solve this problem. Essentially, MACI is a cryptographic construction that allows for contributions to projects to happen on-chain in a privacy-preserving, encrypted form, that allows anyone to cryptographically verify that the mechanism is being implemented correctly, but prevents participants from being able to prove to a third party that they made any particular contribution. Unprovability means that if someone tries to bribe others to contribute to their project, the bribe recipients would have no way to prove that they actually contributed to that project, making the bribe unenforceable. Benign "collusion" in the form of friends and family supporting each other would still happen, as people would not easily lie to each other at such small scales, but any broader collusion would be very difficult to maintain.However, we do need to think through some of the second-order consequences that integrating MACI would introduce. The biggest blessing, and curse, of using MACI is that contributions become hidden. Identities necessarily become hidden, but even the exact timing of contributions would need to be hidden to prevent deanonymization through timing (to prove that you contributed, make the total amount jump up between 17:40 and 17:42 today). Instead, for example, totals could be provided and updated once per day. Note that as a corollary negative contributions would be hidden as well; they would only appear if they exceeded all positive contributions for an entire day (and if even that is not desired then the mechanism for when balances are updated could be tweaked to further hide downward changes).The challenge with hiding contributions is that we lose the "social proof" motivator for contributing: if contributions are unprovable you can't as easily publicly brag about a contribution you made. My best proposal for solving this is for the mechanism to publish one extra number: the total amount that a particular participant contributed (counting only projects that have received at least 10 contributors to prevent inflating one's number by self-dealing). Individuals would then have a generic "proof-of-generosity" that they contributed some specific total amount, and could publicly state (without proof) what projects it was that they supported. But this is all a significant change to the user experience that will require multiple rounds of experimentation to get right.ConclusionsAll in all, Gitcoin Grants is establishing itself as a significant pillar of the Ethereum ecosystem that more and more projects are relying on for some or all of their support. While it has a relatively low amount of funding at present, and so inevitably underfunds almost everything it touches, we hope that over time we'll continue to see larger sources of funding for the matching pools appear. One option is MEV auctions, another is that new or existing token projects looking to do airdrops could provide the tokens to a matching pool. A third is transaction fees of various applications. With larger amounts of funding, Gitcoin Grants could serve as a more significant funding stream - though to get to that point, further iteration and work on fine-tuning the mechanism will be required. Additionally, this round saw Gitcoin Grants' first foray into applications beyond Ethereum with the health section. There is growing interest in quadratic funding from local government bodies and other non-blockchain groups, and it would be very valuable to see quadratic funding more broadly deployed in such contexts. That said, there are unique challenges there too. First, there's issues around onboarding people who do not already have cryptocurrency. Second, the Ethereum community is naturally expert in the needs of the Ethereum community, but neither it nor average people are expert in, eg. medical support for the coronavirus pandemic. We should expect quadratic funding to perform worse when the participants are not experts in the domain they're being asked to contribute to. Will non-blockchain uses of QF focus on domains where there's a clear local community that's expert in its own needs, or will people try larger-scale deployments soon? If we do see larger-scale deployments, how will those turn out? There's still a lot of questions to be answered.

Gitcoin Grants Round 5 Retrospective Gitcoin Grants Round 5 Retrospective2020 Apr 30 See all posts Gitcoin Grants Round 5 Retrospective Special thanks to Kevin Owocki and Frank Chen for help and reviewRound 5 of Gitcoin Grants has just finished, with $250,000 of matching split between tech, media, and the new (non-Ethereum-centric) category of "public health". In general, it seems like the mechanism and the community are settling down into a regular rhythm. People know what it means to contribute, people know what to expect, and the results emerge in a relatively predictable pattern - even if which specific grants get the most funds is not so easy to predict. Stability of incomeSo let's go straight into the analysis. One important property worth looking at is stability of income across rounds: do projects that do well in round N also tend to do well in round N+1? Stability of income is very important if we want to support an ecosystem of "quadratic freelancers": we want people to feel comfortable relying on their income knowing that it will not completely disappear the next round. On the other hand, it would be harmful if some recipients became completely entrenched, with no opportunity for new projects to come in and compete for the pot, so there is a need for a balance.On the media side, we do see some balance between stability and dynamism: Week in Ethereum had the highest total amount received in both the previous round and the current round. EthHub and Bankless are also near the top in both the current round and the previous round. On the other hand, Antiprosynthesis, the (beloved? notorious? famous?) Twitter info-warrior, has decreased from $13,813 to $5,350, while Chris Blec's YouTube channel has increased from $5,851 to $12,803. So some churn, but also some continuity between rounds.On the tech side, we see much more churn in the winners, with a less clear relationship between income last round and income this round: Last round, the winner was Tornado Cash, claiming $30,783; this round, they are down to $8,154. This round, the three roughly-even winners are Samczsun ($4,631 contributions + $15,704 match = $20,335 total), Arboreum ($16,084 contributions + $9,046 match = $25,128 total) and 1inch.exchange ($58,566 contributions + $7,893 match = $66,459 total), in the latter case the bulk coming from one contribution: In the previous round, those three winners were not even in the top ten, and in some cases not even part of Gitcoin Grants at all.These numbers show us two things. First, large parts of the Gitcoin community seem to be in the mindset of treating grants not as a question of "how much do you deserve for your last two months of work?", but rather as a one-off reward for years of contributions in the past. This was one of the strongest rebuttals that I received to my criticism of Antiprosynthesis receiving $13,813 in the last round: that the people who contributed to that award did not see it as two months' salary, but rather as a reward for years of dedication and work for the Ethereum ecosystem. In the next round, contributors were content that the debt was sufficiently repaid, and so they moved on to give a similar gift of appreciation and gratitude to Chris Blec.That said, not everyone contributes in this way. For example, Prysm got $7,966 last round and $8,033 this round, and Week in Ethereum is consistently well-rewarded ($16,727 previous, $12,195 current), and EthHub saw less stability but still kept half its income ($13,515 previous, $6,705 current) even amid a 20% drop to the matching pool size as some funds were redirected to public health. So there definitely are some contributors that are getting almost a reasonable monthly salary from Gitcoin Grants (yes, even these amounts are all serious underpayment, but remember that the pool of funds Gitcoin Grants has to distribute in the first place is quite small, so there's no allocation that would not seriously underpay most people; the hope is that in the future we will find ways to make the matching pot grow bigger).Why didn't more people use recurring contributions?One feature that was tested this round to try to improve stability was recurring contributions: users could choose to split their contribution among multiple rounds. However, the feature was not used often: out of over 8,000 total contributions, only 120 actually made recurring contributions. I can think of three possible explanations for this:People just don't want to give recurring contributions; they genuinely prefer to freshly rethink who they are supporting every round. People would be willing to give more recurring contributions, but there is some kind of "market failure" stopping them; that is, it's collectively optimal for everyone to give more recurring contributions, but it's not any individual contributor's interest to be the first to do so. There's some UI inconveniences or other "incidental" obstacles preventing recurring contributions. In a recent call with the Gitcoin team, hypothesis (3) was mentioned frequently. A specific issue was that people were worried about making recurring contributions because they were concerned whether or not the money that they lock up for a recurring contribution would be safe. Improving the payment system and notification workflow may help with this. Another option is to move away from explicit "streaming" and instead simply have the UI provide an option for repeating the last round's contributions and making edits from there.Hypothesis (1) also should be taken seriously; there's genuine value in preventing ossification and allowing space for new entrants. But I want to zoom in particularly on hypothesis (2), the coordination failure hypothesis.My explanation of hypothesis (2) starts, interestingly enough, with a defense of (1): why ossification is genuinely a risk. Suppose that there are two projects, A and B, and suppose that they are equal quality. But A already has an established base of contributors; B does not (we'll say for illustration it only has a few existing contributors). Here's how much matching you are contributing by participating in each project:Contributing to A Contributing to B Clearly, you have more impact by supporting A, and so A gets even more contributors and B gets fewer; the rich get richer. Even if project B was somewhat better, the greater impact from supporting A could still create a lock-in that reinforces A's position. The current everyone-starts-from-zero-in-each-round mechanism greatly limits this type of entrenchment, because, well, everyone's matching gets reset and starts from zero.However, a very similar effect also is the cause behind the market failure preventing stable recurring contributions, and the every-round-reset actually exacerbates it. Look at the same picture above, except instead of thinking of A and B as two different projects, think of them as the same project in the current round and in the next round.We simplify the model as follows. An individual has two choices: contribute $10 in the current round, or contribute $5 in the current round and $5 in the next round. If the matchings in the two rounds were equal, then the latter option would actually be more favorable: because the matching is proportional to the square root of the donation size, the former might give you eg. a $200 match now, but the latter would give you $141 in the current round + $141 in the next round = $282. But if you see a large mass of people contributing in the current round, and you expect much fewer people to contribute in the second round, then the choice is not $200 versus $141 + $141, it might be $200 versus $141 + $5. And so you're better off joining the current round's frenzy. We can mathematically analyze the equilibrium: So there is a substantial region within which the bad equilibrium of everyone concentrating is sticky: if more than about 3/4 of contributors are expected to concentrate, it seems in your interest to also concentrate. A mathematically astute reader may note that there is always some intermediate strategy that involves splitting but at a ratio different from 50/50, which you can prove performs better than either full concentrating or the even split, but here we get back to hypothesis (3) above: the UI doesn't offer such a complex menu of choices, it just offers the choice of a one-time contribution or a recurring contribution, so people pick one or the other.How might we fix this? One option is to add a bit of continuity to matching ratios: when computing pairwise matches, match against not just the current round's contributors but, say, 1/3 of the previous round's contributors as well: This makes some philosophical sense: the objective of quadratic funding is to subsidize contributions to projects that are detected to be public goods because multiple people have contributed to them, and contributions in the previous round are certainly also evidence of a project's value, so why not reuse those? So here, moving away from everyone-starts-from-zero toward this partial carryover of matching ratios would mitigate the round concentration effect - but, of course, it would exacerbate the risk of entrenchment. Hence, some experimentation and balance may be in order. A broader philosophical question is, is there really a deep inherent tradeoff between risk of entrenchment and stability of income, or is there some way we could get both?Responses to negative contributionsThis round also introduced negative contributions, a feature proposed in my review of the previous round. But as with recurring contributions, very few people made negative contributions, to the point where their impact on the results was negligible. Also, there was active opposition to negative contributions: Source: honestly I have no idea, someone else sent it to me and they forgot where they found it. Sorry :( The main source of opposition was basically what I predicted in the previous round. Adding a mechanism that allows people to penalize others, even if deservedly so, can have tricky and easily harmful social consequences. Some people even opposed the negative contribution mechanism to the point where they took care to give positive contributions to everyone who received a negative contribution.How do we respond? To me it seems clear that, in the long run, some mechanism of filtering out bad projects, and ideally compensating for overexcitement into good projects, will have to exist. It doesn't necessarily need to be integrated as a symmetric part of the QF, but there does need to be a filter of some form. And this mechanism, whatever form it will take, invariably opens up the possibility of the same social dynamics. So there is a challenge that will have to be solved no matter how we do it.One approach would be to hide more information: instead of just hiding who made a negative contribution, outright hide the fact that a negative contribution was made. Many opponents of negative contributions explicitly indicated that they would be okay (or at least more okay) with such a model. And indeed (see the next section), this is a direction we will have to go anyway. But it would come at a cost - effectively hiding negative contributions would mean not giving as much real-time feedback into what projects got how much funds.Stepping up the fight against collusionThis round saw much larger-scale attempts at collusion: It does seem clear that, at current scales, stronger protections against manipulation are goingto be required. The first thing that can be done is adding a stronger identity verification layer than Github accounts; this is something that the Gitcoin team is already working on. There is definitely a complex tradeoff between security and inclusiveness to be worked through, but it is not especially difficult to implement a first version. And if the identity problem is solved to a reasonable extent, that will likely be enough to prevent collusion at current scales. But in the longer term, we are going to need protection not just against manipulating the system by making many fake accounts, but also against collusion via bribes (explicit and implicit).MACI is the solution that I proposed (and Barry Whitehat and co are implementing) to solve this problem. Essentially, MACI is a cryptographic construction that allows for contributions to projects to happen on-chain in a privacy-preserving, encrypted form, that allows anyone to cryptographically verify that the mechanism is being implemented correctly, but prevents participants from being able to prove to a third party that they made any particular contribution. Unprovability means that if someone tries to bribe others to contribute to their project, the bribe recipients would have no way to prove that they actually contributed to that project, making the bribe unenforceable. Benign "collusion" in the form of friends and family supporting each other would still happen, as people would not easily lie to each other at such small scales, but any broader collusion would be very difficult to maintain.However, we do need to think through some of the second-order consequences that integrating MACI would introduce. The biggest blessing, and curse, of using MACI is that contributions become hidden. Identities necessarily become hidden, but even the exact timing of contributions would need to be hidden to prevent deanonymization through timing (to prove that you contributed, make the total amount jump up between 17:40 and 17:42 today). Instead, for example, totals could be provided and updated once per day. Note that as a corollary negative contributions would be hidden as well; they would only appear if they exceeded all positive contributions for an entire day (and if even that is not desired then the mechanism for when balances are updated could be tweaked to further hide downward changes).The challenge with hiding contributions is that we lose the "social proof" motivator for contributing: if contributions are unprovable you can't as easily publicly brag about a contribution you made. My best proposal for solving this is for the mechanism to publish one extra number: the total amount that a particular participant contributed (counting only projects that have received at least 10 contributors to prevent inflating one's number by self-dealing). Individuals would then have a generic "proof-of-generosity" that they contributed some specific total amount, and could publicly state (without proof) what projects it was that they supported. But this is all a significant change to the user experience that will require multiple rounds of experimentation to get right.ConclusionsAll in all, Gitcoin Grants is establishing itself as a significant pillar of the Ethereum ecosystem that more and more projects are relying on for some or all of their support. While it has a relatively low amount of funding at present, and so inevitably underfunds almost everything it touches, we hope that over time we'll continue to see larger sources of funding for the matching pools appear. One option is MEV auctions, another is that new or existing token projects looking to do airdrops could provide the tokens to a matching pool. A third is transaction fees of various applications. With larger amounts of funding, Gitcoin Grants could serve as a more significant funding stream - though to get to that point, further iteration and work on fine-tuning the mechanism will be required. Additionally, this round saw Gitcoin Grants' first foray into applications beyond Ethereum with the health section. There is growing interest in quadratic funding from local government bodies and other non-blockchain groups, and it would be very valuable to see quadratic funding more broadly deployed in such contexts. That said, there are unique challenges there too. First, there's issues around onboarding people who do not already have cryptocurrency. Second, the Ethereum community is naturally expert in the needs of the Ethereum community, but neither it nor average people are expert in, eg. medical support for the coronavirus pandemic. We should expect quadratic funding to perform worse when the participants are not experts in the domain they're being asked to contribute to. Will non-blockchain uses of QF focus on domains where there's a clear local community that's expert in its own needs, or will people try larger-scale deployments soon? If we do see larger-scale deployments, how will those turn out? There's still a lot of questions to be answered. -