找到

1087

篇与

heyuan

相关的结果

-

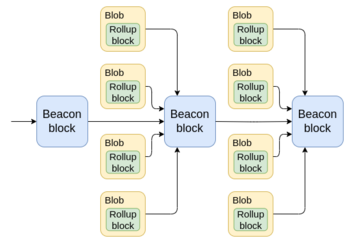

How do layer 2s really differ from execution sharding? How do layer 2s really differ from execution sharding?2024 May 23 See all posts How do layer 2s really differ from execution sharding? One of the points that I made in my post two and half years ago on "the Endgame" is that the different future development paths for a blockchain, at least technologically, look surprisingly similar. In both cases, you have a very large number of transactions onchain, and processing them requires (i) a large amount of computation, and (ii) a large amount of data bandwidth. Regular Ethereum nodes, such as the 2 TB reth archive node running on the laptop I'm using to write this article, are not powerful enough to verify such a huge amount of data and computation directly, even with heroic software engineering work and Verkle trees. Instead, in both "L1 sharding" and a rollup-centric world, ZK-SNARKs are used to verify computation, and DAS to verify data availability. The DAS in both cases is the same. The ZK-SNARKs in both cases are the same tech, except in one case they are smart contract code and in the other case they are an enshrined feature of the protocol. In a very real technical sense, Ethereum is doing sharding, and rollups are shards. This raises a natural question: what is the difference between these two worlds? One answer is that the consequences of code bugs are different: in a rollup world, coins get lost, and in a shard chain world, you have consensus failures. But I expect that as protocol solidify, and as formal verification technology improves, the importance of bugs will decrease. So what are the differences between the two visions that we can expect will stick into the long term?Diversity of execution environmentsOne of the ideas that we briefly played around with in Ethereum in 2019 was execution environments. Essentially, Ethereum would have different "zones" that could have different rules for how accounts work (including totally different approaches like UTXOs), how the virtual machine works, and other features. This would enable a diversity of approaches in parts of the stack where it would be difficult to achieve if Ethereum were to try to do everything by itself.In the end, we ended up abandoning some of the more ambitious plans, and simply kept the EVM. However, Ethereum L2s (including rollups, valdiums and Plasmas) arguably ended up serving the role of execution environments. Today, we generally focus on EVM-equivalent L2s, but this ignores the diversity of many alternative approaches:Arbitrum Stylus, which adds a second virtual machine based on WASM alongside the EVM. Fuel, which uses a Bitcoin-like (but more feature-complete) UTXO-based architecture. Aztec, which introduces a new language and programming paradigm designed around ZK-SNARK-based privacy-preserving smart contracts. UTXO-based architecture. Source: Fuel documentation. We could try to make the EVM into a super-VM that covers all possible paradigms, but that would have led to much less effective implementations of each of these concepts than allowing platforms like these to specialize.Security tradeoffs: scale and speedEthereum L1 provides a really strong security guarantee. If some piece of data is inside a block that is finalized on L1, the entire consensus (including, in extreme situations, social consensus) works to ensure that the data will not be edited in a way that goes against the rules of the application that put that data there, that any execution triggered by the data will not be reverted, and that the data will remain accessible. To achieve these guarantees, Ethereum L1 is willing to accept high costs. At the time of this writing, the transaction fees are relatively low: layer 2s charge less than a cent per transaction, and even the L1 is under $1 for a basic ETH transfer. These costs may remain low in the future if technology improves fast enough that available block space grows to keep up with demand - but they may not. And even $0.01 per transaction is too high for many non-financial applications, eg. social media or gaming.But social media and gaming do not require the same security model as L1. It's ok if someone can pay a million dollars to revert a record of them losing a chess game, or make one of your twitter posts look like it was published three days after it actually was. And so these applications should not have to pay for the same security costs. An L2-centric approach enables this, by supporting a spectrum of data availability approaches from rollups to plasma to validiums. Different L2 types for different use cases. Read more here. Another security tradeoff arises around the issue of passing assets from L2 to L2. In the limit (5-10 years into the future), I expect that all rollups will be ZK rollups, and hyper-efficient proof systems like Binius and Circle STARKs with lookups, plus proof aggregation layers, will make it possible for L2s to provide finalized state roots in each slot. For now, however, we have a complicated mix of optimistic rollups and ZK rollups with various proof time windows. If we had implemented execution sharding in 2021, the security model to keep shards honest would have been optimistic rollups, not ZK - and so L1 would have had to manage the systemically-complex fraud proof logic on-chain and have a week-long withdrawal period for moving assets from shard to shard. But like code bugs, I think this issue is ultimately temporary.A third, and once again more lasting, dimension of security tradeoff is transaction speed. Ethereum has blocks every 12 seconds, and is unwilling to go much faster because that would overly centralize the network. Many L2s, however, are exploring block times of a few hundred milliseconds. 12 seconds is already not that bad: on average, a user who submits a transaction needs to wait ~6-7 seconds to get included into a block (not just 6 because of the possibility that the next block will not include them). This is comparable to what I have to wait when making a payment on my credit card. But many applications demand much higher speed, and L2s provide it.To provide this higher speed, L2s rely on preconfirmation mechanisms: the L2's own validators digitally sign a promise to include the transaction at a particular time, and if the transaction does not get included, they can be penalized. A mechanism called StakeSure generalizes this further. L2 preconfirmations. Now, we could try to do all of this on layer 1. Layer 1 could incorporate a "fast pre-confirmation" and "slow final confirmation" system. It could incorporate different shards with different levels of security. However, this would add a lot of complexity to the protocol. Furthermore, doing it all on layer 1 would risk overloading the consensus, because a lot of the higher-scale or faster-throughput approaches have higher centralization risks or require stronger forms of "governance", and if done at L1, the effects of those stronger demands would spill over to the rest of the protocol. By offering these tradeoffs through layer 2s, Ethereum can mostly avoid these risks.The benefits of layer 2s on organization and cultureImagine that a country gets split in half, and one half becomes capitalist and the other becomes highly government-driven (unlike when this happens in reality, assume that in this thought experiment it's not the result of any kind of traumatic war; rather, one day a border magically goes up and that's it). In the capitalist part, the restaurants are all run by various combinations of decentralized ownership, chains and franchises. In the government-driven part, they are all branches of the government, like police stations. On the first day, not much would change. People largely follow their existing habits, and what works and what doesn't work depends on technical realities like labor skill and infrastructure. A year later, however, you would expect to see large changes, because the differing structures of incentives and control lead to large changes in behavior, which affect who comes, who stays and who goes, what gets built, what gets maintained, and what gets left to rot.Industrial organization theory covers a lot of these distinctions: it talks about the differences not just between a government-run economy and a capitalist economy, but also between an economy dominated by large franchises and an economy where eg. each supermarket is run by an independent entrepreneur. I would argue that the difference between a layer-1-centric ecosystem and a layer-2-centric ecosystem falls along similar lines. A "core devs run everything" architecture gone very wrong. I would phrase the key benefit to Ethereum of being a layer-2-centric ecosystem as follows:Because Ethereum is a layer-2-centric ecosystem, you are free to go independently build a sub-ecosystem that is yours with your unique features, and is at the same time a part of a greater Ethereum.If you're just building an Ethereum client, you're part of a greater Ethereum, and while you have some room for creativity, it's far less than what's available to L2s. And if you're building a completely independent chain, you have maximal room for creativity, but you lose the benefits like shared security and shared network effects. Layer 2s form a happy medium.Layer 2s do not just create a technical opportunity to experiment with new execution environments and security tradeoffs to achieve scale, flexibility and speed: they also create an incentive to: both for the developers to build and maintain it, and for the community to form around and support it.The fact that each L2 is isolated also means that deploying new approaches is permissionless: there's no need to convince all the core devs that your new approach is "safe" for the rest of the chain. If your L2 fails, that's on you. Anyone can work on totally weird ideas (eg. Intmax's approach to Plasma), and even if they get completely ignored by the Ethereum core devs, they can keep building and eventually deploy. L1 features and precompiles are not like this, and even in Ethereum, what succeeds and what fails in L1 development often ends up depending on politics to a higher degree than we would like. Regardless of what theoretically could get built, the distinct incentives created by an L1-centric ecosystem and an L2-centric ecosystem end up heavily influencing what does get built in practice, with what level of quality and in what order.What challenges does Ethereum's layer-2-centric ecosystem have? A layer 1 + layer 2 architecture gone very wrong. Source. There is a key challenge to this kind of layer-2-centric approach, and it's a problem that layer 1-centric ecosystems do not have to face to nearly the same extent: coordination. In other words, while Ethereum branches out, the challenge is in preserving the fundamental property that it still all feels like "Ethereum", and has the network effects of being Ethereum rather than being N separate chains. Today, the situation is suboptimal in many ways:Moving tokens from one layer 2 to another requires often centralized bridge platforms, and is complicated for the average user. If you have coins on Optimism, you can't just paste someone's Arbitrum address into your wallet, and send them funds. Cross-chain smart contract wallet support is not great - both for personal smart contract wallets and for organizational wallets (including DAOs). If you change your key on one L2, you also need to go change your key on every other L2. Decentralized validation infrastructure is often lacking. Ethereum is finally starting to have decent light clients, such as Helios. However, there is no point in this if activity is all happening on layer 2s that all require their own centralized RPCs. In principle, once you have the Ethereum header chain, making light clients for L2s is not hard; in practice, there's far too little emphasis on it. There are efforts working to improve all three. For cross-chain token exchange, the ERC-7683 standard is an emerging option, and unlike existing "centralized bridges" it does not have any enshrined central operator, token or governance. For cross-chain accounts, the approach most wallets are taking is to use cross-chain replayable messages to update keys in the short term, and keystore rollups in the longer term. Light clients for L2s are starting to emerge, eg. Beerus for Starknet. Additionally, recent improvements in user experience through next-generation wallets have already solved much more basic problems like removing the need for users to manually switch to the right network to access a dapp. Rabby showing an integrated view of asset balances across multiple chains. In the not-so-long-ago dark old days, wallets did not do this! But it is important to recognize that layer-2-centric ecosystems do swim against the current to some extent when trying to coordinate. Individual layer 2s don't have a natural economic incentive to build the infrastructure to coordinate: small ones don't, because they would only see a small share of the benefit of their contributions, and large ones don't, because they would benefit as much or more from strengthening their own local network effects. If each layer 2 is separately optimizing its individual piece, and no one is thinking about how each piece fits into the broader whole, we get failures like the urbanism dystopia in the picture a few paragraphs above.I do not claim to have magical perfect solutions to this problem. The best I can say is that the ecosystem needs to more fully recognize that cross-L2 infrastructure is a type of Ethereum infrastructure, alongside L1 clients, dev tools and programming languages, and should be valorized and funded as such. We have Protocol Guild; maybe we need Basic Infrastructure Guild.Conclusions"Layer 2s" and "sharding" often get described in public discourse as being two opposite strategies for how to scale a blockchain. But when you look at the underlying technology, there is a puzzle: the actual underlying approaches to scaling are exactly the same. You have some kind of data sharding. You have fraud provers or ZK-SNARK provers. You have solutions for cross- communication. The main difference is: who is responsible for building and updating those pieces, and how much autonomy do they have?A layer-2-centric ecosystem is sharding in a very real technical sense, but it's sharding where you can go create your own shard with your own rules. This is powerful, and enables a lot of creativity and independent innovation. But it also has key challenges, particularly around coordination. For a layer-2-centric ecosystem like Ethereum to succeed, it needs to understand those challenges, and address them head-on, in order to get as many of the benefits of layer-1-centric ecosystems as possible, and come as close as possible to having the best of both worlds.

How do layer 2s really differ from execution sharding? How do layer 2s really differ from execution sharding?2024 May 23 See all posts How do layer 2s really differ from execution sharding? One of the points that I made in my post two and half years ago on "the Endgame" is that the different future development paths for a blockchain, at least technologically, look surprisingly similar. In both cases, you have a very large number of transactions onchain, and processing them requires (i) a large amount of computation, and (ii) a large amount of data bandwidth. Regular Ethereum nodes, such as the 2 TB reth archive node running on the laptop I'm using to write this article, are not powerful enough to verify such a huge amount of data and computation directly, even with heroic software engineering work and Verkle trees. Instead, in both "L1 sharding" and a rollup-centric world, ZK-SNARKs are used to verify computation, and DAS to verify data availability. The DAS in both cases is the same. The ZK-SNARKs in both cases are the same tech, except in one case they are smart contract code and in the other case they are an enshrined feature of the protocol. In a very real technical sense, Ethereum is doing sharding, and rollups are shards. This raises a natural question: what is the difference between these two worlds? One answer is that the consequences of code bugs are different: in a rollup world, coins get lost, and in a shard chain world, you have consensus failures. But I expect that as protocol solidify, and as formal verification technology improves, the importance of bugs will decrease. So what are the differences between the two visions that we can expect will stick into the long term?Diversity of execution environmentsOne of the ideas that we briefly played around with in Ethereum in 2019 was execution environments. Essentially, Ethereum would have different "zones" that could have different rules for how accounts work (including totally different approaches like UTXOs), how the virtual machine works, and other features. This would enable a diversity of approaches in parts of the stack where it would be difficult to achieve if Ethereum were to try to do everything by itself.In the end, we ended up abandoning some of the more ambitious plans, and simply kept the EVM. However, Ethereum L2s (including rollups, valdiums and Plasmas) arguably ended up serving the role of execution environments. Today, we generally focus on EVM-equivalent L2s, but this ignores the diversity of many alternative approaches:Arbitrum Stylus, which adds a second virtual machine based on WASM alongside the EVM. Fuel, which uses a Bitcoin-like (but more feature-complete) UTXO-based architecture. Aztec, which introduces a new language and programming paradigm designed around ZK-SNARK-based privacy-preserving smart contracts. UTXO-based architecture. Source: Fuel documentation. We could try to make the EVM into a super-VM that covers all possible paradigms, but that would have led to much less effective implementations of each of these concepts than allowing platforms like these to specialize.Security tradeoffs: scale and speedEthereum L1 provides a really strong security guarantee. If some piece of data is inside a block that is finalized on L1, the entire consensus (including, in extreme situations, social consensus) works to ensure that the data will not be edited in a way that goes against the rules of the application that put that data there, that any execution triggered by the data will not be reverted, and that the data will remain accessible. To achieve these guarantees, Ethereum L1 is willing to accept high costs. At the time of this writing, the transaction fees are relatively low: layer 2s charge less than a cent per transaction, and even the L1 is under $1 for a basic ETH transfer. These costs may remain low in the future if technology improves fast enough that available block space grows to keep up with demand - but they may not. And even $0.01 per transaction is too high for many non-financial applications, eg. social media or gaming.But social media and gaming do not require the same security model as L1. It's ok if someone can pay a million dollars to revert a record of them losing a chess game, or make one of your twitter posts look like it was published three days after it actually was. And so these applications should not have to pay for the same security costs. An L2-centric approach enables this, by supporting a spectrum of data availability approaches from rollups to plasma to validiums. Different L2 types for different use cases. Read more here. Another security tradeoff arises around the issue of passing assets from L2 to L2. In the limit (5-10 years into the future), I expect that all rollups will be ZK rollups, and hyper-efficient proof systems like Binius and Circle STARKs with lookups, plus proof aggregation layers, will make it possible for L2s to provide finalized state roots in each slot. For now, however, we have a complicated mix of optimistic rollups and ZK rollups with various proof time windows. If we had implemented execution sharding in 2021, the security model to keep shards honest would have been optimistic rollups, not ZK - and so L1 would have had to manage the systemically-complex fraud proof logic on-chain and have a week-long withdrawal period for moving assets from shard to shard. But like code bugs, I think this issue is ultimately temporary.A third, and once again more lasting, dimension of security tradeoff is transaction speed. Ethereum has blocks every 12 seconds, and is unwilling to go much faster because that would overly centralize the network. Many L2s, however, are exploring block times of a few hundred milliseconds. 12 seconds is already not that bad: on average, a user who submits a transaction needs to wait ~6-7 seconds to get included into a block (not just 6 because of the possibility that the next block will not include them). This is comparable to what I have to wait when making a payment on my credit card. But many applications demand much higher speed, and L2s provide it.To provide this higher speed, L2s rely on preconfirmation mechanisms: the L2's own validators digitally sign a promise to include the transaction at a particular time, and if the transaction does not get included, they can be penalized. A mechanism called StakeSure generalizes this further. L2 preconfirmations. Now, we could try to do all of this on layer 1. Layer 1 could incorporate a "fast pre-confirmation" and "slow final confirmation" system. It could incorporate different shards with different levels of security. However, this would add a lot of complexity to the protocol. Furthermore, doing it all on layer 1 would risk overloading the consensus, because a lot of the higher-scale or faster-throughput approaches have higher centralization risks or require stronger forms of "governance", and if done at L1, the effects of those stronger demands would spill over to the rest of the protocol. By offering these tradeoffs through layer 2s, Ethereum can mostly avoid these risks.The benefits of layer 2s on organization and cultureImagine that a country gets split in half, and one half becomes capitalist and the other becomes highly government-driven (unlike when this happens in reality, assume that in this thought experiment it's not the result of any kind of traumatic war; rather, one day a border magically goes up and that's it). In the capitalist part, the restaurants are all run by various combinations of decentralized ownership, chains and franchises. In the government-driven part, they are all branches of the government, like police stations. On the first day, not much would change. People largely follow their existing habits, and what works and what doesn't work depends on technical realities like labor skill and infrastructure. A year later, however, you would expect to see large changes, because the differing structures of incentives and control lead to large changes in behavior, which affect who comes, who stays and who goes, what gets built, what gets maintained, and what gets left to rot.Industrial organization theory covers a lot of these distinctions: it talks about the differences not just between a government-run economy and a capitalist economy, but also between an economy dominated by large franchises and an economy where eg. each supermarket is run by an independent entrepreneur. I would argue that the difference between a layer-1-centric ecosystem and a layer-2-centric ecosystem falls along similar lines. A "core devs run everything" architecture gone very wrong. I would phrase the key benefit to Ethereum of being a layer-2-centric ecosystem as follows:Because Ethereum is a layer-2-centric ecosystem, you are free to go independently build a sub-ecosystem that is yours with your unique features, and is at the same time a part of a greater Ethereum.If you're just building an Ethereum client, you're part of a greater Ethereum, and while you have some room for creativity, it's far less than what's available to L2s. And if you're building a completely independent chain, you have maximal room for creativity, but you lose the benefits like shared security and shared network effects. Layer 2s form a happy medium.Layer 2s do not just create a technical opportunity to experiment with new execution environments and security tradeoffs to achieve scale, flexibility and speed: they also create an incentive to: both for the developers to build and maintain it, and for the community to form around and support it.The fact that each L2 is isolated also means that deploying new approaches is permissionless: there's no need to convince all the core devs that your new approach is "safe" for the rest of the chain. If your L2 fails, that's on you. Anyone can work on totally weird ideas (eg. Intmax's approach to Plasma), and even if they get completely ignored by the Ethereum core devs, they can keep building and eventually deploy. L1 features and precompiles are not like this, and even in Ethereum, what succeeds and what fails in L1 development often ends up depending on politics to a higher degree than we would like. Regardless of what theoretically could get built, the distinct incentives created by an L1-centric ecosystem and an L2-centric ecosystem end up heavily influencing what does get built in practice, with what level of quality and in what order.What challenges does Ethereum's layer-2-centric ecosystem have? A layer 1 + layer 2 architecture gone very wrong. Source. There is a key challenge to this kind of layer-2-centric approach, and it's a problem that layer 1-centric ecosystems do not have to face to nearly the same extent: coordination. In other words, while Ethereum branches out, the challenge is in preserving the fundamental property that it still all feels like "Ethereum", and has the network effects of being Ethereum rather than being N separate chains. Today, the situation is suboptimal in many ways:Moving tokens from one layer 2 to another requires often centralized bridge platforms, and is complicated for the average user. If you have coins on Optimism, you can't just paste someone's Arbitrum address into your wallet, and send them funds. Cross-chain smart contract wallet support is not great - both for personal smart contract wallets and for organizational wallets (including DAOs). If you change your key on one L2, you also need to go change your key on every other L2. Decentralized validation infrastructure is often lacking. Ethereum is finally starting to have decent light clients, such as Helios. However, there is no point in this if activity is all happening on layer 2s that all require their own centralized RPCs. In principle, once you have the Ethereum header chain, making light clients for L2s is not hard; in practice, there's far too little emphasis on it. There are efforts working to improve all three. For cross-chain token exchange, the ERC-7683 standard is an emerging option, and unlike existing "centralized bridges" it does not have any enshrined central operator, token or governance. For cross-chain accounts, the approach most wallets are taking is to use cross-chain replayable messages to update keys in the short term, and keystore rollups in the longer term. Light clients for L2s are starting to emerge, eg. Beerus for Starknet. Additionally, recent improvements in user experience through next-generation wallets have already solved much more basic problems like removing the need for users to manually switch to the right network to access a dapp. Rabby showing an integrated view of asset balances across multiple chains. In the not-so-long-ago dark old days, wallets did not do this! But it is important to recognize that layer-2-centric ecosystems do swim against the current to some extent when trying to coordinate. Individual layer 2s don't have a natural economic incentive to build the infrastructure to coordinate: small ones don't, because they would only see a small share of the benefit of their contributions, and large ones don't, because they would benefit as much or more from strengthening their own local network effects. If each layer 2 is separately optimizing its individual piece, and no one is thinking about how each piece fits into the broader whole, we get failures like the urbanism dystopia in the picture a few paragraphs above.I do not claim to have magical perfect solutions to this problem. The best I can say is that the ecosystem needs to more fully recognize that cross-L2 infrastructure is a type of Ethereum infrastructure, alongside L1 clients, dev tools and programming languages, and should be valorized and funded as such. We have Protocol Guild; maybe we need Basic Infrastructure Guild.Conclusions"Layer 2s" and "sharding" often get described in public discourse as being two opposite strategies for how to scale a blockchain. But when you look at the underlying technology, there is a puzzle: the actual underlying approaches to scaling are exactly the same. You have some kind of data sharding. You have fraud provers or ZK-SNARK provers. You have solutions for cross- communication. The main difference is: who is responsible for building and updating those pieces, and how much autonomy do they have?A layer-2-centric ecosystem is sharding in a very real technical sense, but it's sharding where you can go create your own shard with your own rules. This is powerful, and enables a lot of creativity and independent innovation. But it also has key challenges, particularly around coordination. For a layer-2-centric ecosystem like Ethereum to succeed, it needs to understand those challenges, and address them head-on, in order to get as many of the benefits of layer-1-centric ecosystems as possible, and come as close as possible to having the best of both worlds. -

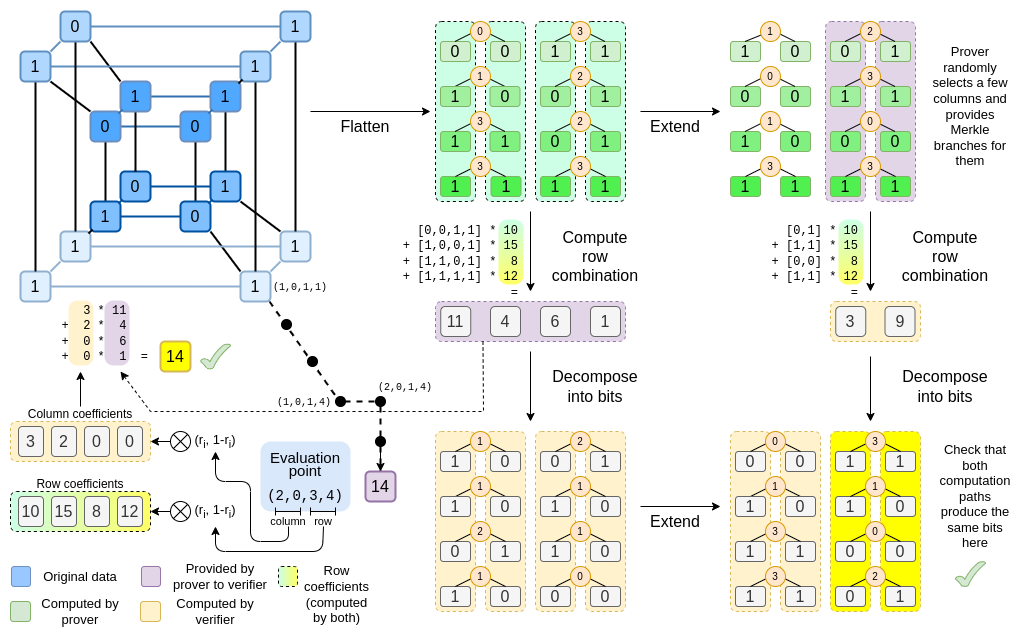

The near and mid-term future of improving the Ethereum network's permissionlessness and decentralization The near and mid-term future of improving the Ethereum network's permissionlessness and decentralization2024 May 17 See all posts The near and mid-term future of improving the Ethereum network's permissionlessness and decentralization Special thanks to Dankrad Feist, Caspar Schwarz-Schilling and Francesco for rapid feedback and review.I am sitting here writing this on the final day of an Ethereum developer interop in Kenya, where we made a large amount of progress implementing and ironing out technical details of important upcoming Ethereum improvements, most notably PeerDAS, the Verkle tree transition and decentralized approaches to storing history in the context of EIP 4444. From my own perspective, it feels like the pace of Ethereum development, and our capacity to ship large and important features that meaningfully improve the experience for node operators and (L1 and L2) users, is increasing. Ethereum client teams working together to ship the Pectra devnet. Given this greater technical capacity, one important question to be asking is: are we building toward the right goals? One prompt for thinking about this is a recent series of unhappy tweets from the long-time Geth core developer Peter Szilagyi: These are valid concerns. They are concerns that many people in the Ethereum community have expressed. They are concerns that I have on many occasions had personally. However, I also do not think that the situation is anywhere near as hopeless as Peter's tweets imply; rather, many of the concerns are already being addressed by protocol features that are already in-progress, and many others can be addressed by very realistic tweaks to the current roadmap.In order to see what this means in practice, let us go through the three examples that Peter provided one by one. The goal is not to focus on Peter specifically; they are concerns that are widely shared among many community members, and it's important to address them.MEV, and builder dependenceIn the past, Ethereum blocks were created by miners, who used a relatively simple algorithm to create blocks. Users send transactions to a public p2p network often called the "mempool" (or "txpool"). Miners listen to the mempool, and accept transactions that are valid and pay fees. They include the transactions they can, and if there is not enough space, they prioritize by highest-fee-first.This was a very simple system, and it was friendly toward decentralization: as a miner, you can just run default software, and you can get the same levels of fee revenue from a block that you could get from highly professional mining farms. Around 2020, however, people started exploiting what was called miner extractable value (MEV): revenue that could only be gained by executing complex strategies that are aware of activities happening inside of various defi protocols.For example, consider decentralized exchanges like Uniswap. Suppose that at time T, the USD/ETH exchange rate - on centralized exchanges and on Uniswap - is $3000. At time T+11, the USD/ETH exchange rate on centralized exchanges rises to $3005. But Ethereum has not yet had its next block. At time T+12, it does. Whoever creates the block can make their first transaction be a series of Uniswap buys, buying up all of the ETH available on Uniswap at prices from $3000 to $3004. This is extra revenue, and is called MEV. Applications other than DEXes have their own analogues to this problem. The Flash Boys 2.0 paper published in 2019 goes into this in detail. A chart from the Flash Boys 2.0 paper that shows the amount of revenue capturable using the kinds of approaches described above.The problem is that this breaks the story for why mining (or, post-2022, block proposing) can be "fair": now, large actors who have better ability to optimize these kinds of extraction algorithms can get a better return per block.Since then there has been a debate between two strategies, which I will call MEV minimization and MEV quarantining. MEV minimization comes in two forms: (i) aggressively work on MEV-free alternatives to Uniswap (eg. Cowswap), and (ii) build in-protocol techniques, like encrypted mempools, that reduce the information available to block producers, and thus reduce the revenue that they can capture. In particular, encrypted mempools prevent strategies such as sandwich attacks, which put transactions right before and after users' trades in order to financially exploit them ("front-running").MEV quarantining works by accepting MEV, but trying to limit its impact on staking centralization by separating the market into two kinds of actors: validators are responsible for attesting and proposing blocks, but the task of choosing the block's contents gets outsourced to specialized builders through an auction protocol. Individual stakers now no longer need to worry about optimizing defi arbitrage themselves; they simply join the auction protocol, and accept the highest bid. This is called proposer/builder separation (PBS). This approach has precedents in other industries: a major reason why restaurants are able to remain so decentralized is that they often rely on a fairly concentrated set of providers for various operations that do have large economies of scale. So far, PBS has been reasonably successful at ensuring that small validators and large validators are on a fair playing field, at least as far as MEV is concerned. However, it creates another problem: the task of choosing which transactions get included becomes more concentrated.My view on this has always been that MEV minimization is good and we should pursue it (I personally use Cowswap regularly!) - though encrypted mempools have a lot of challenges, but MEV minimization will likely be insufficient; MEV will not go down to zero, or even near-zero. Hence, we need some kind of MEV quarantining too. This creates an interesting task: how do we make the "MEV quarantine box" as small as possible? How do we give builders the least possible power, while still keeping them capable of absorbing the role of optimizing arbitrage and other forms of MEV collecting?If builders have the power to exclude transactions from a block entirely, there are attacks that can quite easily arise. Suppose that you have a collateralized debt position (CDP) in a defi protocol, backed by an asset whose price is rapidly dropping. You want to either bump up your collateral or exit the CDP. Malicious builders could try to collude to refuse to include your transaction, delaying it until prices drop by enough that they can forcibly liquidate your CDP. If that happens, you would have to pay a large penalty, and the builders would get a large share of it. So how can we prevent builders from excluding transactions and accomplishing these kinds of attacks?This is where inclusion lists come in. Source: this ethresear.ch post. Inclusion lists allow block proposers (meaning, stakers) to choose transactions that are required to go into the block. Builders can still reorder transactions or insert their own, but they must include the proposer's transactions. Eventually, inclusion lists were modified to constrain the next block rather than the current block. In either case, they take away the builder's ability to push transactions out of the block entirely.The above was all a deep rabbit hole of complicated background. But MEV is a complicated issue; even the above description misses lots of important nuances. As the old adage goes, "you may not be looking for MEV, but MEV is looking for you". Ethereum researchers are already quite aligned on the goal of "minimizing the quarantine box", reducing the harm that builders can do (eg. by excluding or delaying transactions as a way of attacking specific applications) as much as possible.That said, I do think that we can go even further. Historically, inclusion lists have often been conceived as an "off-to-the-side special-case feature": normally, you would not think about them, but just in case malicious builders start doing crazy things, they give you a "second path". This attitude is reflected in current design decisions: in the current EIP, the gas limit of an inclusion list is around 2.1 million. But we can make a philosophical shift in how we think about inclusion lists: think of the inclusion list as being the block, and think of the builder's role as being an off-to-the-side function of adding a few transactions to collect MEV. What if it's builders that have the 2.1 million gas limit?I think ideas in this direction - really pushing the quarantine box to be as small as possible - are really interesting, and I'm in favor of going in that direction. This is a shift from "2021-era philosophy": in 2021-era philosophy, we were more enthusiastic about the idea that, since we now have builders, we can "overload" their functionality and have them serve users in more complicated ways, eg. by supporting ERC-4337 fee markets. In this new philosophy, the transaction validation parts of ERC-4337 would have to be enshrined into the protocol. Fortunately, the ERC-4337 team is already increasingly warm about this direction.Summary: MEV thought has already been going back in the direction of empowering block producers, including giving block producers the authority to directly ensure the inclusion of users' transactions. Account abstraction proposals are already going back in the direction of removing reliance on centralized relayers, and even bundlers. However, there is a good argument that we are not going far enough, and I think pressure pushing the development process to go further in that direction is highly welcome.Liquid stakingToday, solo stakers make up a relatively small percentage of all Ethereum staking, and most staking is done by various providers - some centralized operators, and others DAOs, like Lido and RocketPool. I have done my own research - various polls [1] [2], surveys, in-person conversations, asking the question "why are you - specifically you - not solo staking today?" To me, a robust solo staking ecosystem is by far my preferred outcome for Ethereum staking, and one of the best things about Ethereum is that we actually try to support a robust solo staking ecosystem instead of just surrendering to delegation. However, we are far from that outcome. In my polls and surveys, there are a few consistent trends:The great majority of people who are not solo staking cite their primary reason as being the 32 ETH minimum. Out of those who cite other reasons, the highest is technical challenge of running and maintaining a validator node. The loss of instant availability of ETH, the security risks of "hot" private keys, and the loss of ability to simultaneously participate in defi protocols, are significant but smaller concerns. The main reasons why people are not solo staking, according to Farcaster polls. There are two key questions for staking research to resolve:How do we solve these concerns? If, despite effective solutions to most of these concerns, most people still don't want to solo stake, how do we keep the protocol stable and robust against attacks despite that fact? Many ongoing research and development items are aimed precisely at solving these problems:Verkle trees plus EIP-4444 allow staking nodes to function with very low hard disk requirements. Additionallty, they allow staking nodes to sync almost instantly, greatly simplifying the setup process, as well as operations such as switching from one implementation to another. They also make Ethereum light clients much more viable, by reducing the data bandwidth needed to provide proofs for every state access. Research (eg. these proposals) into ways to allow a much larger valdiator set (enabling much smaller staking minimums) while at the same time reducing consensus node overhead. These ideas can be implemented as part of single slot finality. Doing this would also makes light clients safer, as they would be able to verify the full set of signatures instead of relying on sync committees). Ongoing Ethereum client optimizations keep reducing the cost and difficulty of running a validator node, despite growing history. Research on penalties capping could potentially mitigate concerns around private key risk, and make it possible for stakers to simultaneously stake their ETH in defi protocols if that's what they wish to do. 0x01 Withdrawal credentials allow stakers to set an ETH address as their withdrawal address. This makes decentralized staking pools more viable, giving them a leg up against centralized staking pools. However, once again there is more that we could do. It is theoretically possible to allow validators to withdraw much more quickly: Casper FFG continues to be safe even if the validator set changes by a few percent ever time it finalizes (ie. once per epoch). Hence, we could reduce the withdrawal period much more if we put effort into it. If we wanted to greatly reduce the minimum deposit size, we could make a hard decision to trade off in other directions, eg. if we increase the finality time by 4x, that would allow a 4x minimum deposit size decrease. Single slot finality would later clean this up by moving beyond the "every staker participates in every epoch" model entirely.Another important part of this whole question is the economics of staking. A key question is: do we want staking to be a relatively niche activity, or do we want everyone or almost everyone to stake all of their ETH? If everyone is staking, then what is the responsibility that we want everyone to take on? If people end up simply delegating this responsibility because they are lazy, that could end up leading to centralization. There are important and deep philosophical questions here. Incorrect answers could lead Ethereum down a path of centralization and "re-creating the traditional financial system with extra steps"; correct answers could create a shining example of a successful ecosystem with a wide and diverse set of solo stakers and highly decentralized staking pools. These are questions that touch on core Ethereum economics and values, and so we need more diverse participation here.Hardware requirements of nodesMany of the key questions in Ethereum decentralization end up coming down to a question that has defined blockchain politics for a decade: how accessible do we want to make running a node, and how?Today, running a node is hard. Most people do not do it. On the laptop that I am using to write this post, I have a reth node, and it takes up 2.1 terabytes - already the result of heroic software engineering and optimization. I needed to go and buy an extra 4 TB hard drive to put into my laptop in order to store this node. We all want running a node to be easier. In my ideal world, people would be able to run nodes on their phones.As I wrote above, EIP-4444 and Verkle trees are two key technologies that get us closer to this ideal. If both are implemented, hardware requirements of a node could plausibly eventually decrease to less than a hundred gigabytes, and perhaps to near-zero if we eliminate the history storage responsibility (perhaps only for non-staking nodes) entirely. Type 1 ZK-EVMs would remove the need to run EVM computation yourself, as you could instead simply verify a proof that the execution was correct. In my ideal world, we stack all of these technologies together, and even Ethereum browser extension wallets (eg. Metamask, Rabby) have a built-in node that verifies these proofs, does data availability sampling, and is satisfied that the chain is correct. The vision described above is often called "The Verge". This is all known and understood, even by people raising the concerns about Ethereum node size. However, there is an important concern: if we are offloading the responsibility to maintain state and provide proofs, then is that not a centralization vector? Even if they can't cheat by providing invalid data, doesn't it still go against the principles of Ethereum to get too dependent on them?One very near-term version of this concern is many people's discomfort toward EIP-4444: if regular Ethereum nodes no longer need to store old history, then who does? A common answer is: there are certainly enough big actors (eg. block explorers, exchanges, layer 2s) who have the incentive to hold that data, and compared to the 100 petabytes stored by the Wayback Machine, the Ethereum chain is tiny. So it's ridiculous to think that any history will actually be lost.However, this arguments relies on dependence on a small number of large actors. In my taxonomy of trust models, it's a 1-of-N assumption, but the N is pretty small. This has its tail risks. One thing that we could do instead is to store old history in a peer-to-peer network, where each node only stores a small percentage of the data. This kind of network would still do enough copying to ensure robustness: there would be thousands of copies of each piece of data, and in the future we could use erasure coding (realistically, by putting history into EIP-4844-style blobs, which already have erasure coding built in) to increase robustness further. Blobs have erasure coding within blobs and between blobs. The easiest way to make ultra-robust storage for all of Ethereum's history may well be to just put beacon and execution blocks into blobs. Image source: codex.storage For a long time, this work has been on the backburner; Portal Network exists, but realistically it has not gotten the level of attention commensurate with its importance in Ethereum's future. Fortunately, there is now strong interest in momentum toward putting far more resources into a minimized version of Portal that focuses on distributed storage, and accessibility, of history. This momentum should be built on, and we should make a concerted effort to implement EIP-4444 soon, paired with a robust decentralized peer-to-peer network for storing and retrieving old history.For state and ZK-EVMs, this kind of distributed approach is harder. To build an efficient block, you simply have to have the full state. In this case, I personally favor a pragmatic approach: we define, and stick to, some level of hardware requirements needed to have a "node that does everything", which is higher than the (ideally ever-decreasing) cost of simply validating the chain, but still low enough to be affordable to hobbyists. We rely on a 1-of-N assumption, where we ensure that the N is quite large. For example, this could be a high-end consumer laptop.ZK-EVM proving is likely to be the trickiest piece, and real-time ZK-EVM provers are likely to require considerably beefier hardware than an archive node, even with advancements like Binius, and worst-case-bounding with multidimensional gas. We could work hard on a distributed proving network, where each node takes on the responsibility to prove eg. one percent of a block's execution, and then the block producer only needs to aggregate the hundred proofs at the end. Proof aggregation trees could help further. But if this doesn't work well, then one other compromise would be to allow the hardware requirements of proving to get higher, but make sure that a "node that does everything" can verify Ethereum blocks directly (without a proof), fast enough to effectively participate in the network.ConclusionsI think it is actually true that 2021-era Ethereum thought became too comfortable with offloading responsibilities to a small number of large-scale actors, as long as some kind of market mechanism or zero knowledge proof system existed to force the centralized actors to behave honestly. Such systems often work well in the average case, but fail catastrophically in the worst case. We're not doing this. At the same time, I think it's important to emphasize that current Ethereum protocol proposals have already significantly moved away from that kind of model, and take the need for a truly decentralized network much more seriously. Ideas around stateless nodes, MEV mitigations, single-slot finality, and similar concepts, already are much further in this direction. A year ago, the idea of doing data availability sampling by piggy-backing on relays as semi-centralized nodes was seriously considered. This year, we've moved beyond the need to do such things, with surprisingly robust progress on PeerDAS.But there is a lot that we could do to go further in this direction, on all three axes that I talked about above, as well as many other important axes. Helios has made great progress in giving Ethereum an "actual light client". Now, we need to get it included by default in Ethereum wallets, and make RPC providers provide proofs along with their results so that they can be validated, and extend light client technology to layer 2 protocols. If Ethereum is scaling via a rollup-centric roadmap, layer 2s need to get the same security and decentralization guarantees as layer 1. In a rollup-centric world, there are many other things that we should be taking more seriously; decentralized and efficient cross-L2 bridges are one example of many. Many dapps get their logs through centralized protocols, as Ethereum's native log scanning has become too slow. We could improve on this with a dedicated decentralized sub-protocol; here is one proposal of mine for how this could be done.There is a near-unlimited number of blockchain projects aiming for the niche of "we can be super-fast, we'll think about decentralization later". I don't think Ethereum should be one of those projects. Ethereum L1 can and certainly should be a strong base layer for layer 2 projects that do take a hyper-scale approach, using Ethereum as a backbone for decentralization and security. Even a layer-2-centric approach requires layer 1 itself to have sufficient scalability to handle a significant number of operations. But we should have deep respect for the properties that make Ethereum unique, and continue to work to maintain and improve on those properties as Ethereum scales.

The near and mid-term future of improving the Ethereum network's permissionlessness and decentralization The near and mid-term future of improving the Ethereum network's permissionlessness and decentralization2024 May 17 See all posts The near and mid-term future of improving the Ethereum network's permissionlessness and decentralization Special thanks to Dankrad Feist, Caspar Schwarz-Schilling and Francesco for rapid feedback and review.I am sitting here writing this on the final day of an Ethereum developer interop in Kenya, where we made a large amount of progress implementing and ironing out technical details of important upcoming Ethereum improvements, most notably PeerDAS, the Verkle tree transition and decentralized approaches to storing history in the context of EIP 4444. From my own perspective, it feels like the pace of Ethereum development, and our capacity to ship large and important features that meaningfully improve the experience for node operators and (L1 and L2) users, is increasing. Ethereum client teams working together to ship the Pectra devnet. Given this greater technical capacity, one important question to be asking is: are we building toward the right goals? One prompt for thinking about this is a recent series of unhappy tweets from the long-time Geth core developer Peter Szilagyi: These are valid concerns. They are concerns that many people in the Ethereum community have expressed. They are concerns that I have on many occasions had personally. However, I also do not think that the situation is anywhere near as hopeless as Peter's tweets imply; rather, many of the concerns are already being addressed by protocol features that are already in-progress, and many others can be addressed by very realistic tweaks to the current roadmap.In order to see what this means in practice, let us go through the three examples that Peter provided one by one. The goal is not to focus on Peter specifically; they are concerns that are widely shared among many community members, and it's important to address them.MEV, and builder dependenceIn the past, Ethereum blocks were created by miners, who used a relatively simple algorithm to create blocks. Users send transactions to a public p2p network often called the "mempool" (or "txpool"). Miners listen to the mempool, and accept transactions that are valid and pay fees. They include the transactions they can, and if there is not enough space, they prioritize by highest-fee-first.This was a very simple system, and it was friendly toward decentralization: as a miner, you can just run default software, and you can get the same levels of fee revenue from a block that you could get from highly professional mining farms. Around 2020, however, people started exploiting what was called miner extractable value (MEV): revenue that could only be gained by executing complex strategies that are aware of activities happening inside of various defi protocols.For example, consider decentralized exchanges like Uniswap. Suppose that at time T, the USD/ETH exchange rate - on centralized exchanges and on Uniswap - is $3000. At time T+11, the USD/ETH exchange rate on centralized exchanges rises to $3005. But Ethereum has not yet had its next block. At time T+12, it does. Whoever creates the block can make their first transaction be a series of Uniswap buys, buying up all of the ETH available on Uniswap at prices from $3000 to $3004. This is extra revenue, and is called MEV. Applications other than DEXes have their own analogues to this problem. The Flash Boys 2.0 paper published in 2019 goes into this in detail. A chart from the Flash Boys 2.0 paper that shows the amount of revenue capturable using the kinds of approaches described above.The problem is that this breaks the story for why mining (or, post-2022, block proposing) can be "fair": now, large actors who have better ability to optimize these kinds of extraction algorithms can get a better return per block.Since then there has been a debate between two strategies, which I will call MEV minimization and MEV quarantining. MEV minimization comes in two forms: (i) aggressively work on MEV-free alternatives to Uniswap (eg. Cowswap), and (ii) build in-protocol techniques, like encrypted mempools, that reduce the information available to block producers, and thus reduce the revenue that they can capture. In particular, encrypted mempools prevent strategies such as sandwich attacks, which put transactions right before and after users' trades in order to financially exploit them ("front-running").MEV quarantining works by accepting MEV, but trying to limit its impact on staking centralization by separating the market into two kinds of actors: validators are responsible for attesting and proposing blocks, but the task of choosing the block's contents gets outsourced to specialized builders through an auction protocol. Individual stakers now no longer need to worry about optimizing defi arbitrage themselves; they simply join the auction protocol, and accept the highest bid. This is called proposer/builder separation (PBS). This approach has precedents in other industries: a major reason why restaurants are able to remain so decentralized is that they often rely on a fairly concentrated set of providers for various operations that do have large economies of scale. So far, PBS has been reasonably successful at ensuring that small validators and large validators are on a fair playing field, at least as far as MEV is concerned. However, it creates another problem: the task of choosing which transactions get included becomes more concentrated.My view on this has always been that MEV minimization is good and we should pursue it (I personally use Cowswap regularly!) - though encrypted mempools have a lot of challenges, but MEV minimization will likely be insufficient; MEV will not go down to zero, or even near-zero. Hence, we need some kind of MEV quarantining too. This creates an interesting task: how do we make the "MEV quarantine box" as small as possible? How do we give builders the least possible power, while still keeping them capable of absorbing the role of optimizing arbitrage and other forms of MEV collecting?If builders have the power to exclude transactions from a block entirely, there are attacks that can quite easily arise. Suppose that you have a collateralized debt position (CDP) in a defi protocol, backed by an asset whose price is rapidly dropping. You want to either bump up your collateral or exit the CDP. Malicious builders could try to collude to refuse to include your transaction, delaying it until prices drop by enough that they can forcibly liquidate your CDP. If that happens, you would have to pay a large penalty, and the builders would get a large share of it. So how can we prevent builders from excluding transactions and accomplishing these kinds of attacks?This is where inclusion lists come in. Source: this ethresear.ch post. Inclusion lists allow block proposers (meaning, stakers) to choose transactions that are required to go into the block. Builders can still reorder transactions or insert their own, but they must include the proposer's transactions. Eventually, inclusion lists were modified to constrain the next block rather than the current block. In either case, they take away the builder's ability to push transactions out of the block entirely.The above was all a deep rabbit hole of complicated background. But MEV is a complicated issue; even the above description misses lots of important nuances. As the old adage goes, "you may not be looking for MEV, but MEV is looking for you". Ethereum researchers are already quite aligned on the goal of "minimizing the quarantine box", reducing the harm that builders can do (eg. by excluding or delaying transactions as a way of attacking specific applications) as much as possible.That said, I do think that we can go even further. Historically, inclusion lists have often been conceived as an "off-to-the-side special-case feature": normally, you would not think about them, but just in case malicious builders start doing crazy things, they give you a "second path". This attitude is reflected in current design decisions: in the current EIP, the gas limit of an inclusion list is around 2.1 million. But we can make a philosophical shift in how we think about inclusion lists: think of the inclusion list as being the block, and think of the builder's role as being an off-to-the-side function of adding a few transactions to collect MEV. What if it's builders that have the 2.1 million gas limit?I think ideas in this direction - really pushing the quarantine box to be as small as possible - are really interesting, and I'm in favor of going in that direction. This is a shift from "2021-era philosophy": in 2021-era philosophy, we were more enthusiastic about the idea that, since we now have builders, we can "overload" their functionality and have them serve users in more complicated ways, eg. by supporting ERC-4337 fee markets. In this new philosophy, the transaction validation parts of ERC-4337 would have to be enshrined into the protocol. Fortunately, the ERC-4337 team is already increasingly warm about this direction.Summary: MEV thought has already been going back in the direction of empowering block producers, including giving block producers the authority to directly ensure the inclusion of users' transactions. Account abstraction proposals are already going back in the direction of removing reliance on centralized relayers, and even bundlers. However, there is a good argument that we are not going far enough, and I think pressure pushing the development process to go further in that direction is highly welcome.Liquid stakingToday, solo stakers make up a relatively small percentage of all Ethereum staking, and most staking is done by various providers - some centralized operators, and others DAOs, like Lido and RocketPool. I have done my own research - various polls [1] [2], surveys, in-person conversations, asking the question "why are you - specifically you - not solo staking today?" To me, a robust solo staking ecosystem is by far my preferred outcome for Ethereum staking, and one of the best things about Ethereum is that we actually try to support a robust solo staking ecosystem instead of just surrendering to delegation. However, we are far from that outcome. In my polls and surveys, there are a few consistent trends:The great majority of people who are not solo staking cite their primary reason as being the 32 ETH minimum. Out of those who cite other reasons, the highest is technical challenge of running and maintaining a validator node. The loss of instant availability of ETH, the security risks of "hot" private keys, and the loss of ability to simultaneously participate in defi protocols, are significant but smaller concerns. The main reasons why people are not solo staking, according to Farcaster polls. There are two key questions for staking research to resolve:How do we solve these concerns? If, despite effective solutions to most of these concerns, most people still don't want to solo stake, how do we keep the protocol stable and robust against attacks despite that fact? Many ongoing research and development items are aimed precisely at solving these problems:Verkle trees plus EIP-4444 allow staking nodes to function with very low hard disk requirements. Additionallty, they allow staking nodes to sync almost instantly, greatly simplifying the setup process, as well as operations such as switching from one implementation to another. They also make Ethereum light clients much more viable, by reducing the data bandwidth needed to provide proofs for every state access. Research (eg. these proposals) into ways to allow a much larger valdiator set (enabling much smaller staking minimums) while at the same time reducing consensus node overhead. These ideas can be implemented as part of single slot finality. Doing this would also makes light clients safer, as they would be able to verify the full set of signatures instead of relying on sync committees). Ongoing Ethereum client optimizations keep reducing the cost and difficulty of running a validator node, despite growing history. Research on penalties capping could potentially mitigate concerns around private key risk, and make it possible for stakers to simultaneously stake their ETH in defi protocols if that's what they wish to do. 0x01 Withdrawal credentials allow stakers to set an ETH address as their withdrawal address. This makes decentralized staking pools more viable, giving them a leg up against centralized staking pools. However, once again there is more that we could do. It is theoretically possible to allow validators to withdraw much more quickly: Casper FFG continues to be safe even if the validator set changes by a few percent ever time it finalizes (ie. once per epoch). Hence, we could reduce the withdrawal period much more if we put effort into it. If we wanted to greatly reduce the minimum deposit size, we could make a hard decision to trade off in other directions, eg. if we increase the finality time by 4x, that would allow a 4x minimum deposit size decrease. Single slot finality would later clean this up by moving beyond the "every staker participates in every epoch" model entirely.Another important part of this whole question is the economics of staking. A key question is: do we want staking to be a relatively niche activity, or do we want everyone or almost everyone to stake all of their ETH? If everyone is staking, then what is the responsibility that we want everyone to take on? If people end up simply delegating this responsibility because they are lazy, that could end up leading to centralization. There are important and deep philosophical questions here. Incorrect answers could lead Ethereum down a path of centralization and "re-creating the traditional financial system with extra steps"; correct answers could create a shining example of a successful ecosystem with a wide and diverse set of solo stakers and highly decentralized staking pools. These are questions that touch on core Ethereum economics and values, and so we need more diverse participation here.Hardware requirements of nodesMany of the key questions in Ethereum decentralization end up coming down to a question that has defined blockchain politics for a decade: how accessible do we want to make running a node, and how?Today, running a node is hard. Most people do not do it. On the laptop that I am using to write this post, I have a reth node, and it takes up 2.1 terabytes - already the result of heroic software engineering and optimization. I needed to go and buy an extra 4 TB hard drive to put into my laptop in order to store this node. We all want running a node to be easier. In my ideal world, people would be able to run nodes on their phones.As I wrote above, EIP-4444 and Verkle trees are two key technologies that get us closer to this ideal. If both are implemented, hardware requirements of a node could plausibly eventually decrease to less than a hundred gigabytes, and perhaps to near-zero if we eliminate the history storage responsibility (perhaps only for non-staking nodes) entirely. Type 1 ZK-EVMs would remove the need to run EVM computation yourself, as you could instead simply verify a proof that the execution was correct. In my ideal world, we stack all of these technologies together, and even Ethereum browser extension wallets (eg. Metamask, Rabby) have a built-in node that verifies these proofs, does data availability sampling, and is satisfied that the chain is correct. The vision described above is often called "The Verge". This is all known and understood, even by people raising the concerns about Ethereum node size. However, there is an important concern: if we are offloading the responsibility to maintain state and provide proofs, then is that not a centralization vector? Even if they can't cheat by providing invalid data, doesn't it still go against the principles of Ethereum to get too dependent on them?One very near-term version of this concern is many people's discomfort toward EIP-4444: if regular Ethereum nodes no longer need to store old history, then who does? A common answer is: there are certainly enough big actors (eg. block explorers, exchanges, layer 2s) who have the incentive to hold that data, and compared to the 100 petabytes stored by the Wayback Machine, the Ethereum chain is tiny. So it's ridiculous to think that any history will actually be lost.However, this arguments relies on dependence on a small number of large actors. In my taxonomy of trust models, it's a 1-of-N assumption, but the N is pretty small. This has its tail risks. One thing that we could do instead is to store old history in a peer-to-peer network, where each node only stores a small percentage of the data. This kind of network would still do enough copying to ensure robustness: there would be thousands of copies of each piece of data, and in the future we could use erasure coding (realistically, by putting history into EIP-4844-style blobs, which already have erasure coding built in) to increase robustness further. Blobs have erasure coding within blobs and between blobs. The easiest way to make ultra-robust storage for all of Ethereum's history may well be to just put beacon and execution blocks into blobs. Image source: codex.storage For a long time, this work has been on the backburner; Portal Network exists, but realistically it has not gotten the level of attention commensurate with its importance in Ethereum's future. Fortunately, there is now strong interest in momentum toward putting far more resources into a minimized version of Portal that focuses on distributed storage, and accessibility, of history. This momentum should be built on, and we should make a concerted effort to implement EIP-4444 soon, paired with a robust decentralized peer-to-peer network for storing and retrieving old history.For state and ZK-EVMs, this kind of distributed approach is harder. To build an efficient block, you simply have to have the full state. In this case, I personally favor a pragmatic approach: we define, and stick to, some level of hardware requirements needed to have a "node that does everything", which is higher than the (ideally ever-decreasing) cost of simply validating the chain, but still low enough to be affordable to hobbyists. We rely on a 1-of-N assumption, where we ensure that the N is quite large. For example, this could be a high-end consumer laptop.ZK-EVM proving is likely to be the trickiest piece, and real-time ZK-EVM provers are likely to require considerably beefier hardware than an archive node, even with advancements like Binius, and worst-case-bounding with multidimensional gas. We could work hard on a distributed proving network, where each node takes on the responsibility to prove eg. one percent of a block's execution, and then the block producer only needs to aggregate the hundred proofs at the end. Proof aggregation trees could help further. But if this doesn't work well, then one other compromise would be to allow the hardware requirements of proving to get higher, but make sure that a "node that does everything" can verify Ethereum blocks directly (without a proof), fast enough to effectively participate in the network.ConclusionsI think it is actually true that 2021-era Ethereum thought became too comfortable with offloading responsibilities to a small number of large-scale actors, as long as some kind of market mechanism or zero knowledge proof system existed to force the centralized actors to behave honestly. Such systems often work well in the average case, but fail catastrophically in the worst case. We're not doing this. At the same time, I think it's important to emphasize that current Ethereum protocol proposals have already significantly moved away from that kind of model, and take the need for a truly decentralized network much more seriously. Ideas around stateless nodes, MEV mitigations, single-slot finality, and similar concepts, already are much further in this direction. A year ago, the idea of doing data availability sampling by piggy-backing on relays as semi-centralized nodes was seriously considered. This year, we've moved beyond the need to do such things, with surprisingly robust progress on PeerDAS.But there is a lot that we could do to go further in this direction, on all three axes that I talked about above, as well as many other important axes. Helios has made great progress in giving Ethereum an "actual light client". Now, we need to get it included by default in Ethereum wallets, and make RPC providers provide proofs along with their results so that they can be validated, and extend light client technology to layer 2 protocols. If Ethereum is scaling via a rollup-centric roadmap, layer 2s need to get the same security and decentralization guarantees as layer 1. In a rollup-centric world, there are many other things that we should be taking more seriously; decentralized and efficient cross-L2 bridges are one example of many. Many dapps get their logs through centralized protocols, as Ethereum's native log scanning has become too slow. We could improve on this with a dedicated decentralized sub-protocol; here is one proposal of mine for how this could be done.There is a near-unlimited number of blockchain projects aiming for the niche of "we can be super-fast, we'll think about decentralization later". I don't think Ethereum should be one of those projects. Ethereum L1 can and certainly should be a strong base layer for layer 2 projects that do take a hyper-scale approach, using Ethereum as a backbone for decentralization and security. Even a layer-2-centric approach requires layer 1 itself to have sufficient scalability to handle a significant number of operations. But we should have deep respect for the properties that make Ethereum unique, and continue to work to maintain and improve on those properties as Ethereum scales. -